Use Palantir-provided language models within transforms

To use Palantir-provided language models, AIP must first be enabled on your enrollment.

Palantir provides a set of language and embedding models which can be used within Python transforms. The models can be used through the palantir_models library. This library provides a set of FoundryInputParams that can be used with the transforms.api.transform decorator.

Repository setup

To add language model support to your transforms, open the library search panel on the left side of your Code Repository. Search for palantir_models and choose Add and install library within the Library tab. Repeat this process with language-model-service-api to add that library as well.

Your Code Repository will then resolve all dependencies and run checks again. Checks may take a moment to complete, after which you will be able to start using the library in your transforms.

Transform setup

The palantir_model classes can only be used with the transforms.api.transform decorator.

In this example, we will use the palantir_models.transforms.OpenAiGptChatLanguageModelInput. First, import OpenAiGptChatLanguageModelInput into your Python file. This class can now be used to create our transform. Then, follow the prompts to specify and import the model and dataset that you wish to use as input.

Copied!1 2 3 4 5 6 7 8 9 10 11from transforms.api import transform, Input, Output from palantir_models.transforms import OpenAiGptChatLanguageModelInput from palantir_models.models import OpenAiGptChatLanguageModel @transform( source_df=Input("/path/to/input/dataset") model=OpenAiGptChatLanguageModelInput("ri.language-model-service..language-model.gpt-4_azure"), output=Output("/path/to/output/dataset"), ) def compute_generic(ctx, source_df, model: OpenAiGptChatLanguageModel, output): ...

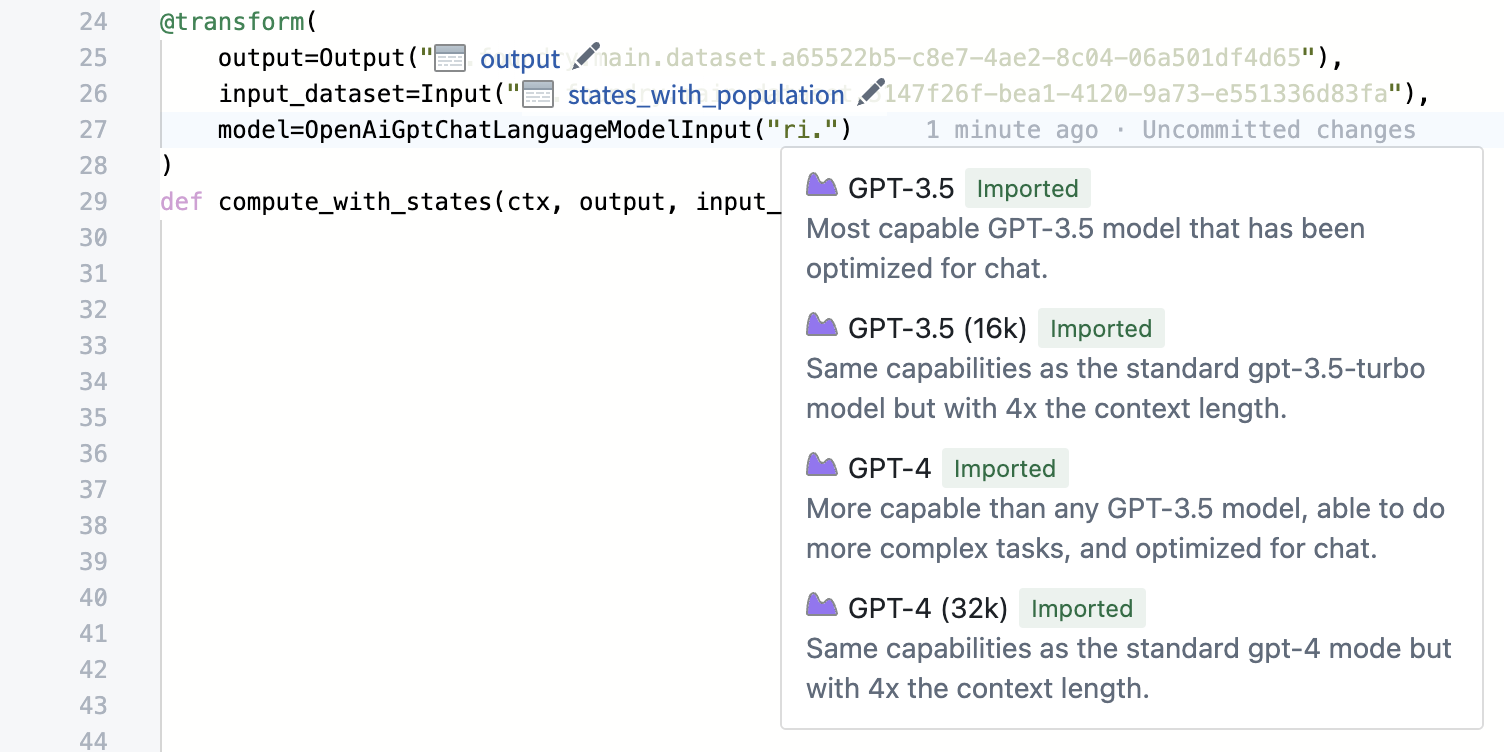

As you begin typing the resource identifier, a dropdown menu will automatically appear to indicate models available for use. You may choose your desired option from the dropdown.

Use language models to generate completions

For this example, we will be using the language model to determine the sentiment for each review in the input dataset. The OpenAiGptChatLanguageModelInput provides an OpenAiGptChatLanguageModel to the transform at runtime which can then be used to generate completions for reviews.

Copied!1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25from transforms.api import transform, Input, Output from palantir_models.transforms import OpenAiGptChatLanguageModelInput from palantir_models.models import OpenAiGptChatLanguageModel from language_model_service_api.languagemodelservice_api_completion_v3 import GptChatCompletionRequest from language_model_service_api.languagemodelservice_api import ChatMessage, ChatMessageRole @transform( reviews=Input("/path/to/reviews/dataset"), model=OpenAiGptChatLanguageModelInput("ri.language-model-service..language-model.gpt-4_azure"), output=Output("/output/path"), ) def compute_sentiment(ctx, reviews, model: OpenAiGptChatLanguageModel, output): def get_completions(review_content: str) -> str: system_prompt = "Take the following review determine the sentiment of the review" request = GptChatCompletionRequest( [ChatMessage(ChatMessageRole.SYSTEM, system_prompt), ChatMessage(ChatMessageRole.USER, review_content)] ) resp = model.create_chat_completion(request) return resp.choices[0].message.content reviews_df = reviews.pandas() reviews_df['sentiment'] = reviews_df['review_content'].apply(get_completions) out_df = ctx.spark_session.createDataFrame(reviews_df) return output.write_dataframe(out_df)

Embeddings

Along with generative language models, Palantir also provides an embedding model. The following example shows how we can use the palantir_models.transforms.GenericEmbeddingModelInput to calculate embeddings on the same reviews dataset. The GenericEmbeddingModelInput provides a GenericEmbeddingModel to the transform at runtime which can be used to calculate embeddings for each review. The embeddings are explicitly cast to floats because the ontology vector property requires this.

Copied!1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22from transforms.api import transform, Input, Output from language_model_service_api.languagemodelservice_api_embeddings_v3 import GenericEmbeddingsRequest from palantir_models.models import GenericEmbeddingModel from palantir_models.transforms import GenericEmbeddingModelInput from pyspark.sql.types import ArrayType, FloatType @transform( reviews=Input("/path/to/reviews/dataset"), embedding_model=GenericEmbeddingModelInput("ri.language-model-service..language-model.text-embedding-ada-002_azure"), output=Output("/path/to/embedding/output") ) def compute_embeddings(ctx, reviews, embedding_model: GenericEmbeddingModel, output): def internal_create_embeddings(val: str): return embedding_model.create_embeddings(GenericEmbeddingsRequest(inputs=[val])).embeddings[0] reviews_df = reviews.pandas() reviews_df['embedding'] = reviews_df['review_content'].apply(internal_create_embeddings) spark_df = ctx.spark_session.createDataFrame(reviews_df) out_df = spark_df.withColumn('embedding', spark_df['embedding'].cast(ArrayType(FloatType()))) return output.write_dataframe(out_df)

Use vision language models to extract PDF document content

For PDF document extraction workflows using vision language models, we recommend using AIP Document Intelligence. AIP Document Intelligence provides an intuitive interface to test different extraction strategies on your documents, compare results, and evaluate extraction quality before deploying to production.

After you deploy a configuration, a Python transform code repository is created and automatically configured with your selected extraction method, model, and any custom prompts. You do not need to manually write transform code for document extraction. Learn more about deploying to Python transforms.