Using Palantir-provided models to create a semantic search workflow

To use Palantir-provided language models, AIP must first be enabled on your enrollment. You also must have permissions to use AIP developer capabilities. Using a custom model? Review Using custom models to create a semantic search workflow instead.

This page illustrates the process of building a notional end-to-end semantic search workflow using a Palantir-provided embedding model.

Instructions

To begin, you need to generate embeddings and store them in an object type with a vector type. Then, you can set up a semantic search workflow in Workshop, build an AIP Agent Workshop widget solution, or create a custom semantic search function for use in Workshop and AIP Logic.

Prerequisite:

Options:

- Create a simple semantic search workflow within workshop using a KNN object set (no-code)

- Enable an AIP Agent to semantic search for objects (no-code)

- Create a function to semantically search across objects for use in Workshop and/or AIP Logic

Generate embeddings and create object type

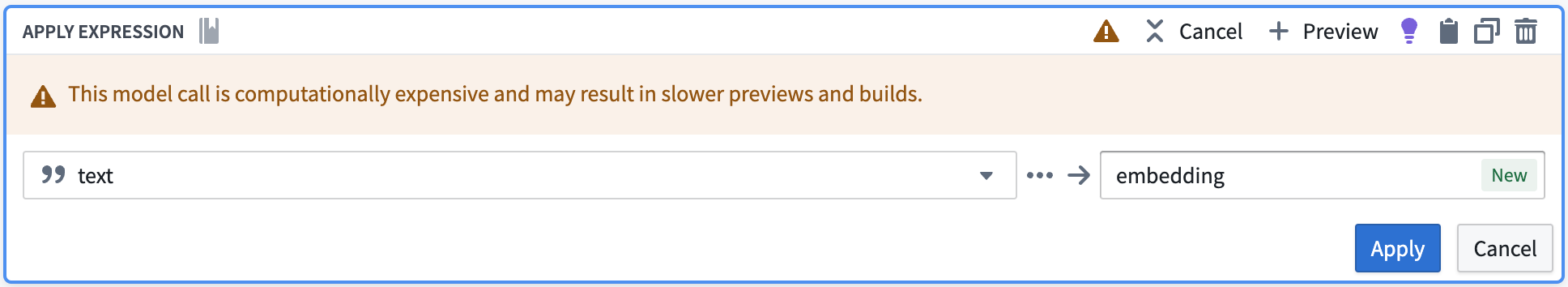

We will use Pipeline Builder to embed text in the dataset as vectors with the Text to Embeddings expression. The expression takes a string and converts it to a vector using one of the Palantir-provided models - in our case the text-embedding-ada-002 embedding model.

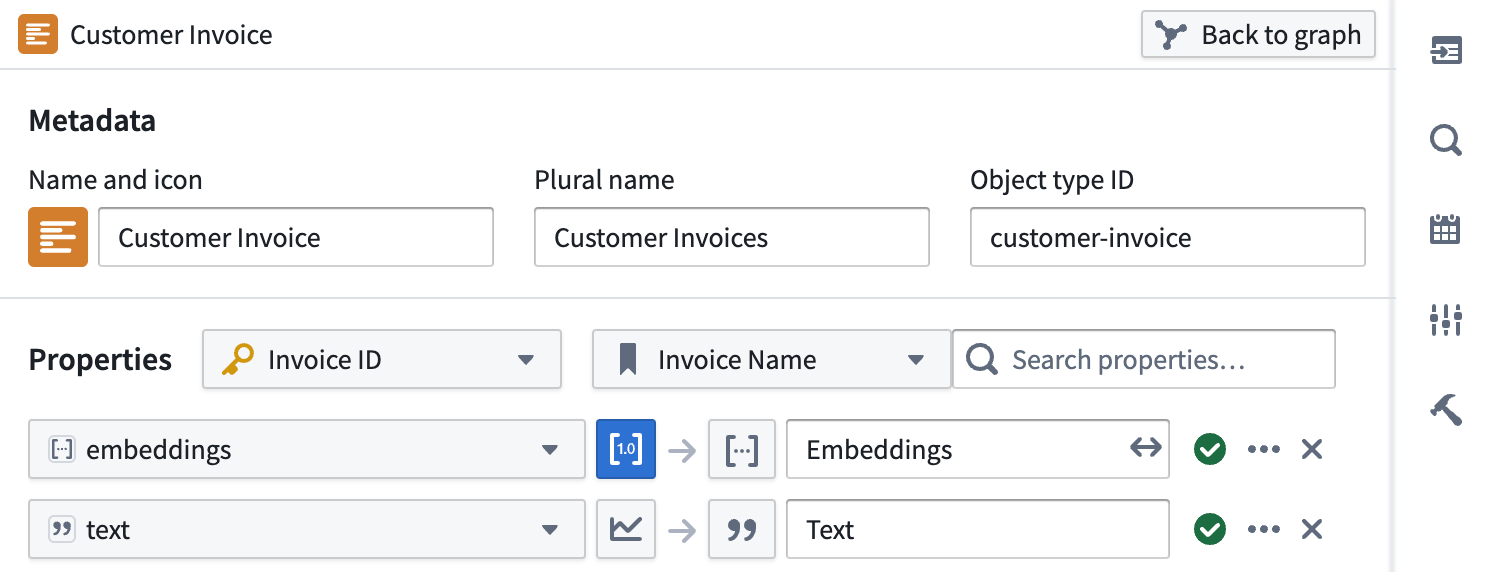

These embeddings can then be added to the Ontology as a vector property.

If you would like more control around the generation of embeddings using Palantir-provided models, see Language models within Python Transforms.

Create a simple semantic search workflow within workshop using a KNN object set (no-code)

The KNN object set cannot be sorted by relevancy. If you need ordered results, use the function approach.

Configuring a KNN object set within Workshop is an easy no-code way to build a semantic search workflow.

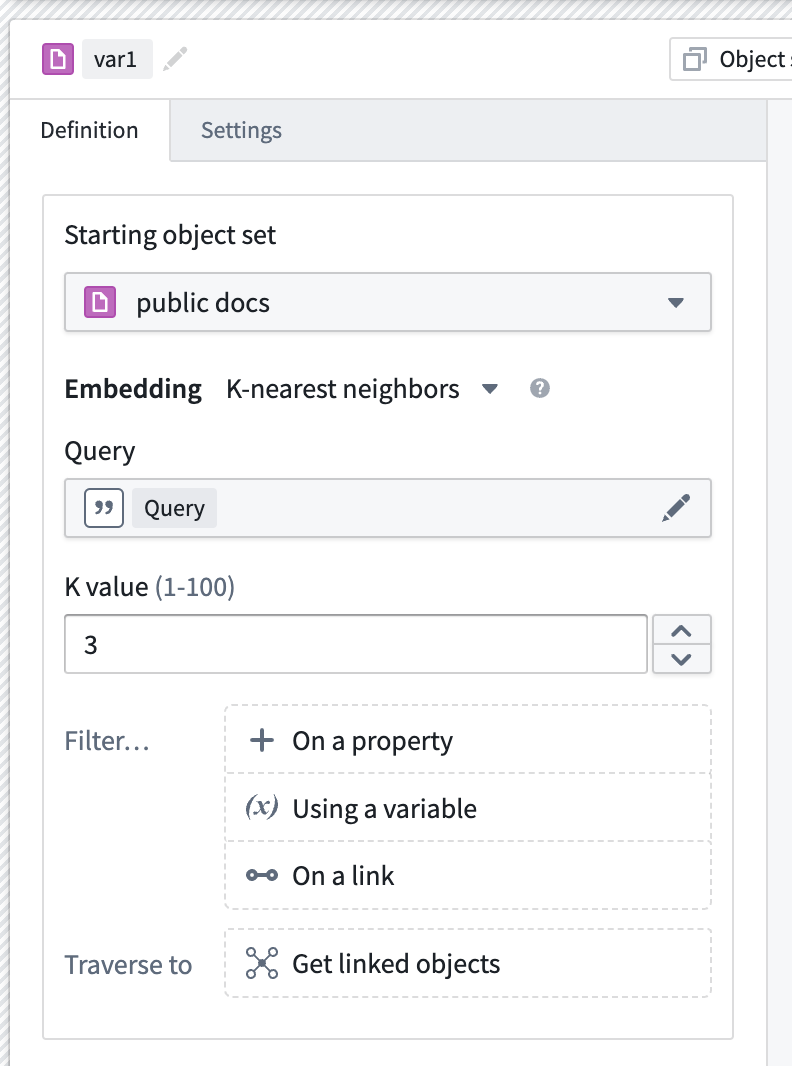

- Create an object set variable and select the object type that contains an embedding property.

- Select the filter

+ On a propertyoption, then from the list of properties in the menu, select your embedding property. - Once selected, the K-nearest-neighbors configuration should appear. If this configuration does not appear, verify that the property you selected is an embedding property.

Within this panel, you can configure:

- K-value: A number between 1-100 for how many objects to return in the semantic search.

- Query: The string variable to use as a query when performing the semantic search.

- Next, create a string selector widget and add its output variable to the KNN query option seen above.

- Lastly, add an object table widget and configure its input variable to be the newly created KNN object set.

For more customized semantic search logic, see the section on functions.

Use AIP Agent (no-code)

AIP Agents created in AIP Agent Studio are good for beginning semantic searches across your objects because they do not require any code. Learn more about incorporating semantic search with more control over the functionality.

Follow the instructions on the getting started guide to create an AIP Agent and either add Ontology context or an Ontology semantic search tool. This initial setup will enable you to ask the AIP Agent to semantically search the objects.

Create a function to semantically search across objects for use in Workshop and/or AIP Logic

We can create a typescript repository and create a function to query our object type. The overall goal is to be able to take some user input, generate a vector using the same Palantir-provided model used earlier, and then do a KNN search over our object type. For more information on how to import Palantir-provided models, review Language models within Functions.

In the code snippet below, replace every instance of ObjectApiName for your unique ObjectType. Note that the identifier may sometimes appear as objectApiName with the first letter in lowercase.

Before proceeding, ensure that the entry "enableVectorProperties": true is present in the functions.json file in your Functions code repository. If this entry is not present, add it to functions.json and commit the change to proceed. Contact your Palantir representative if you need further assistance.

functions-typescript/src/index.ts

Copied!1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22import { Function, Integer } from "@foundry/functions-api"; import { Objects, ObjectApiName } from "@foundry/ontology-api"; import { TextEmbeddingAda_002 } from "@foundry/models-api/language-models" export class MyFunctions { @Function() public async findRelevantObjects( query: string, kValue: Integer, ): Promise<ObjectApiName[]> { if (query.length < 1) { return [] } const embedding = await TextEmbeddingAda_002.createEmbeddings({inputs: [query]}).then(r => r.embeddings[0]); return Objects.search() .objectApiName() .nearestNeighbors(obj => obj.embeddings.near(embedding, {kValue: kValue})) .orderByRelevance() .take(kValue); } }

At this point, we have a function that can run semantic search to query objects with natural language. Remember to publish the function so the function can be used anywhere within Foundry.

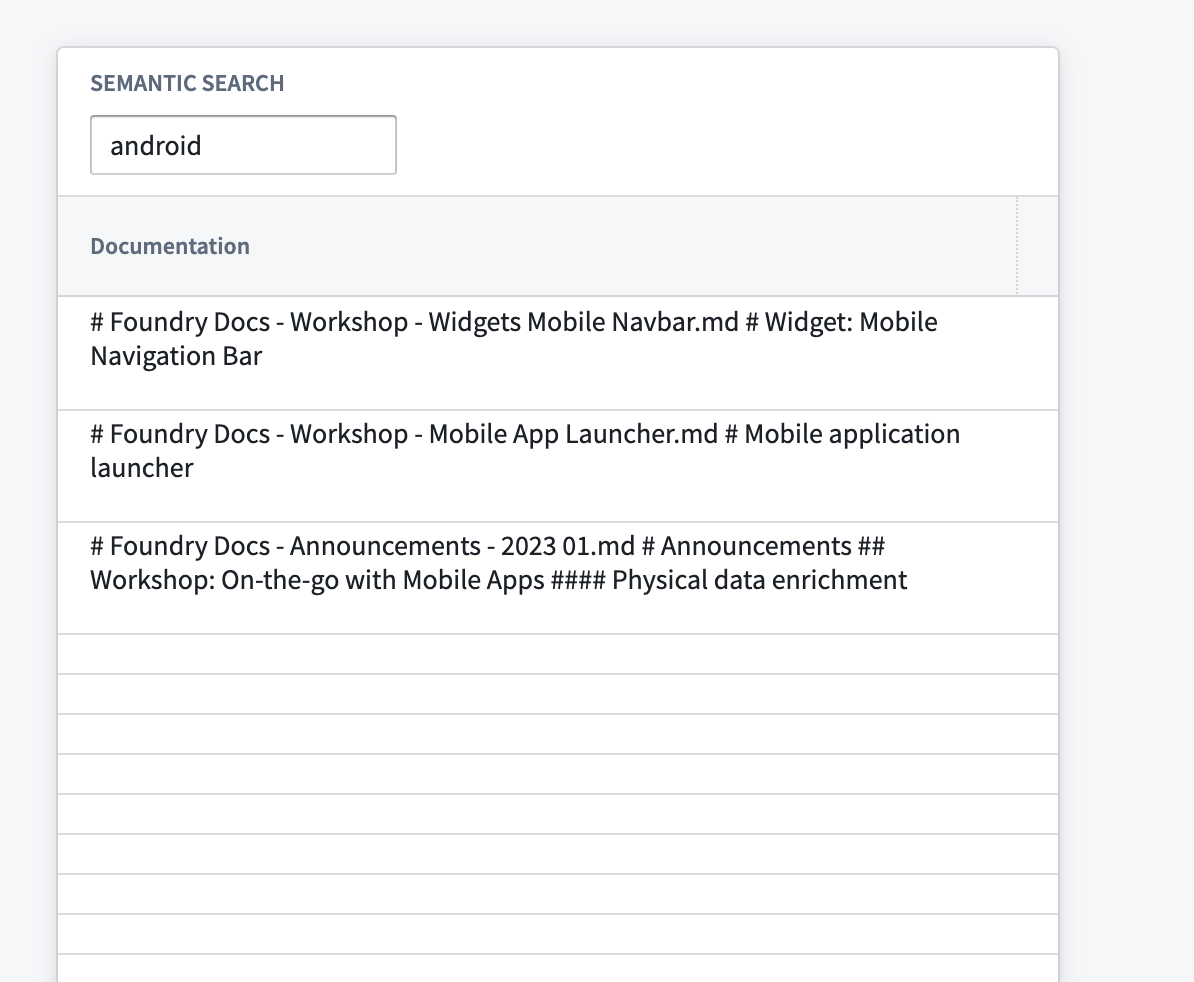

Use semantic search functions in Workshop

- Start by creating a Workshop application.

- Add a text input widget, which will be used as an input to the published KNN document fetch function.

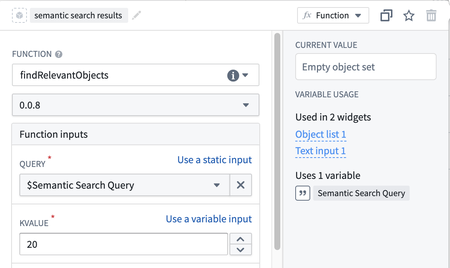

- Add an object list widget with an input object set generated from the function and the selected inputs as shown below:

- Set the

kValueto however many results you want returned, subject to the specified limits.

Use Semantic Search functions in AIP Logic

Add the published function as a tool within AIP Logic. Instruct the language model to use the tool with a prompt similar to this:

Use the fetchRelevantObjects tool with a kValue of 5 to find the most related objects. Remember to add quotes around query when using the tool.