Announcements

REMINDER: You can now sign up for the Foundry Newsletter to receive a summary of new products, features, and improvements across the platform directly to your inbox. For more information on how to subscribe, see the Foundry Newsletter and Product Feedback channels announcement.

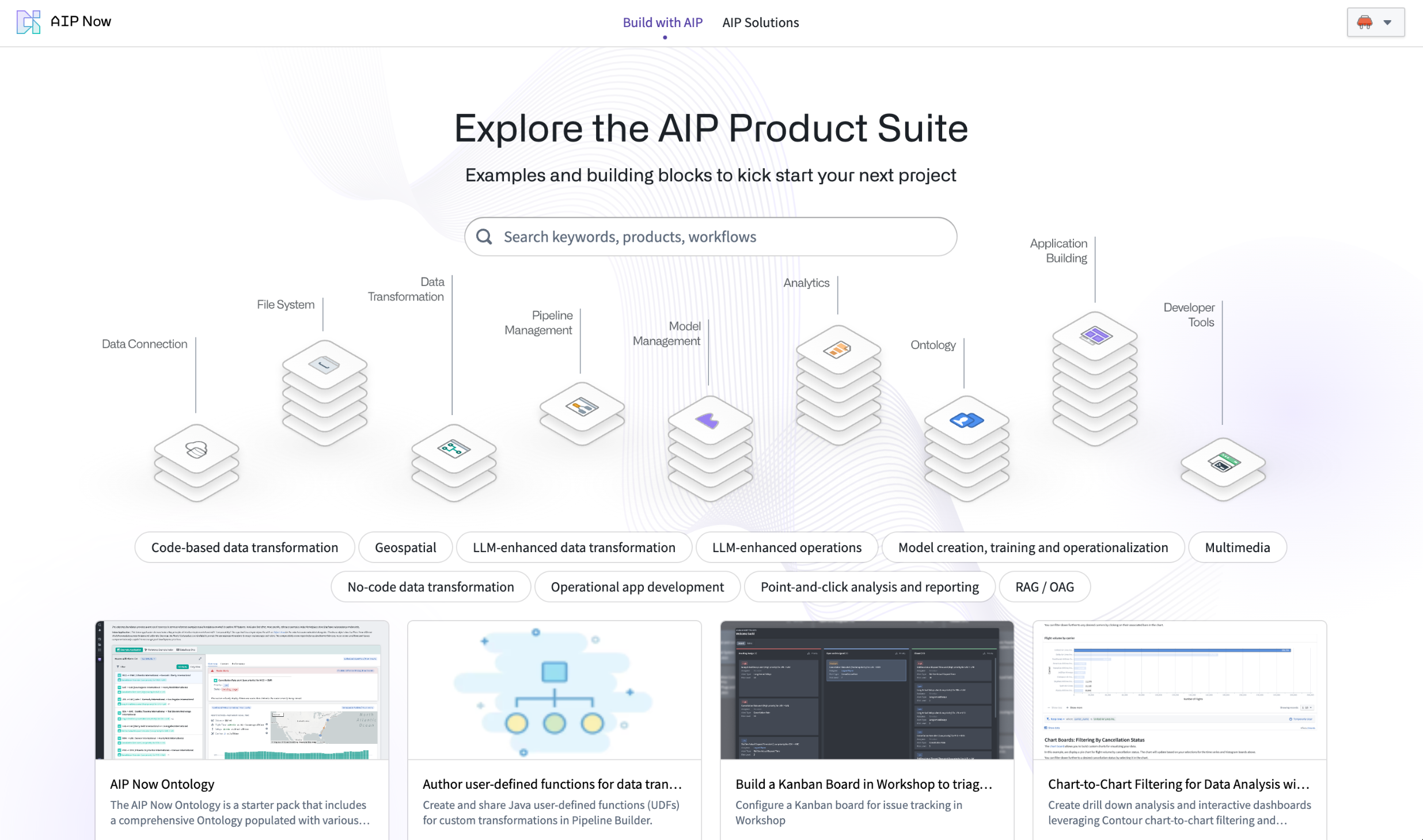

Introducing Build with AIP [Beta], coming early May

Date published: 2024-04-30

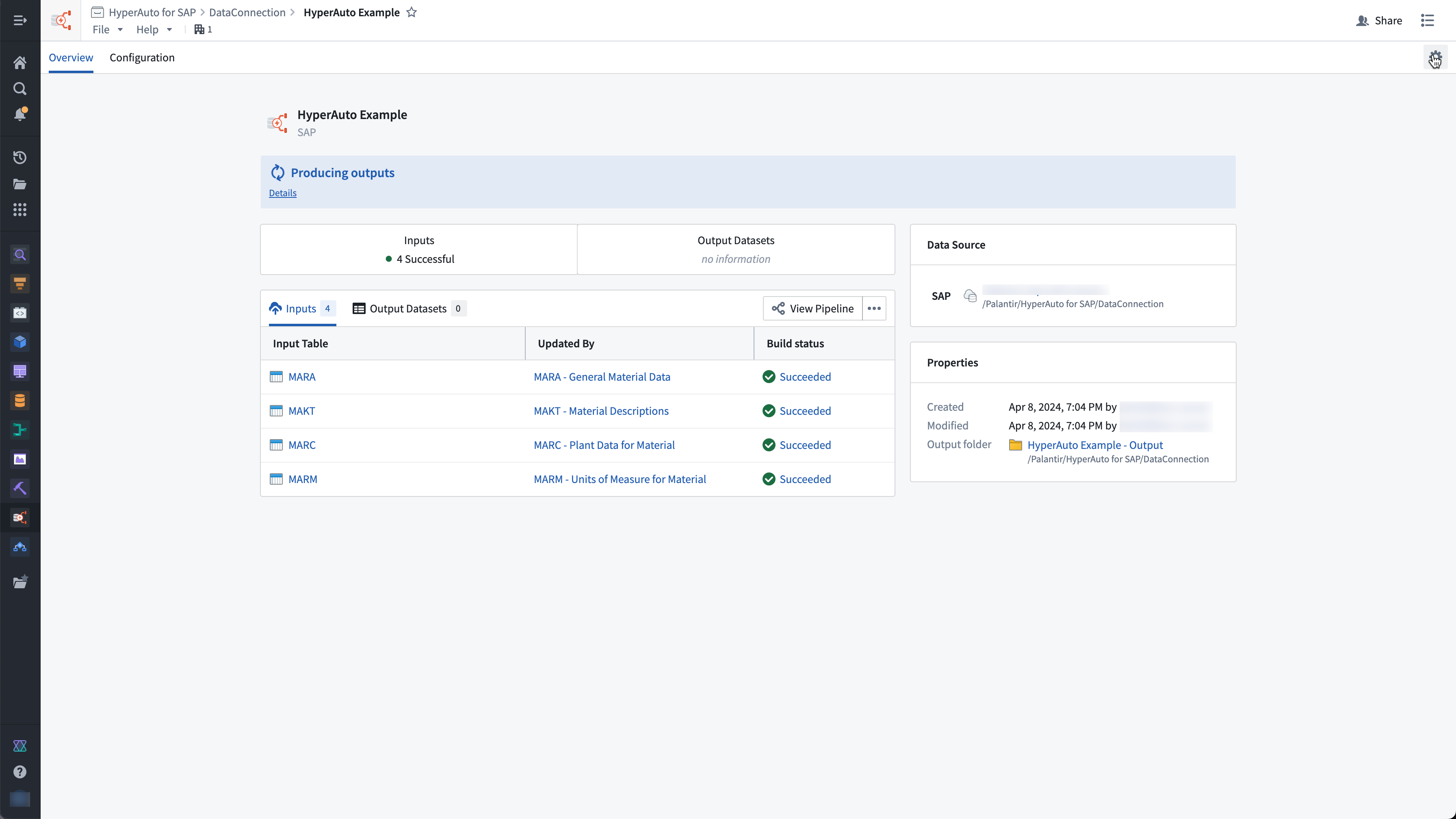

We are excited to announce that Build with AIP will become available in a beta state in early May. The Build with AIP application provides a suite of pre-built products that can be used as reference examples, tutorials, or builder starter packs to adapt to your needs.

From the application, simply search for keywords, use cases, or capabilities to find a product that can accelerate your workflow building. Some of the workflows covered by products include:

- End-to-end semantic search implementation

- Authoring a user-defined function (UDF) for custom boards in Pipeline Builder

- Creating guided forms for user data entry in Workshop

- Code samples to optimize the performance of PySpark transformations

- Using LLMs to classify unstructured data into structured categories

- Rules to automate business processes, such as notifying users upon an event

And more! New AIP products are under active development and will be released as soon as they become available.

Explore the Build with AIP application.

To learn more about a product, select the product to see a detailed explanation of the value, implementation design, and a break-down of individual resources contained within. Users can install any product to a destination Project of their choice, and monitor the progress of the installation. Previously-installed products can always be accessed from within Build with AIP.

Inside the AIP product showcase.

Additional products will be added continuously based on recommended and requested implementation patterns.

The Build with AIP application is on an accelerated release track and will soon be available for all existing customers. Platform administrators opting to restrict access to this feature can do so via Control Panel through Application Access settings.

All products are backed by Foundry Marketplace. For more details, review the documentation.

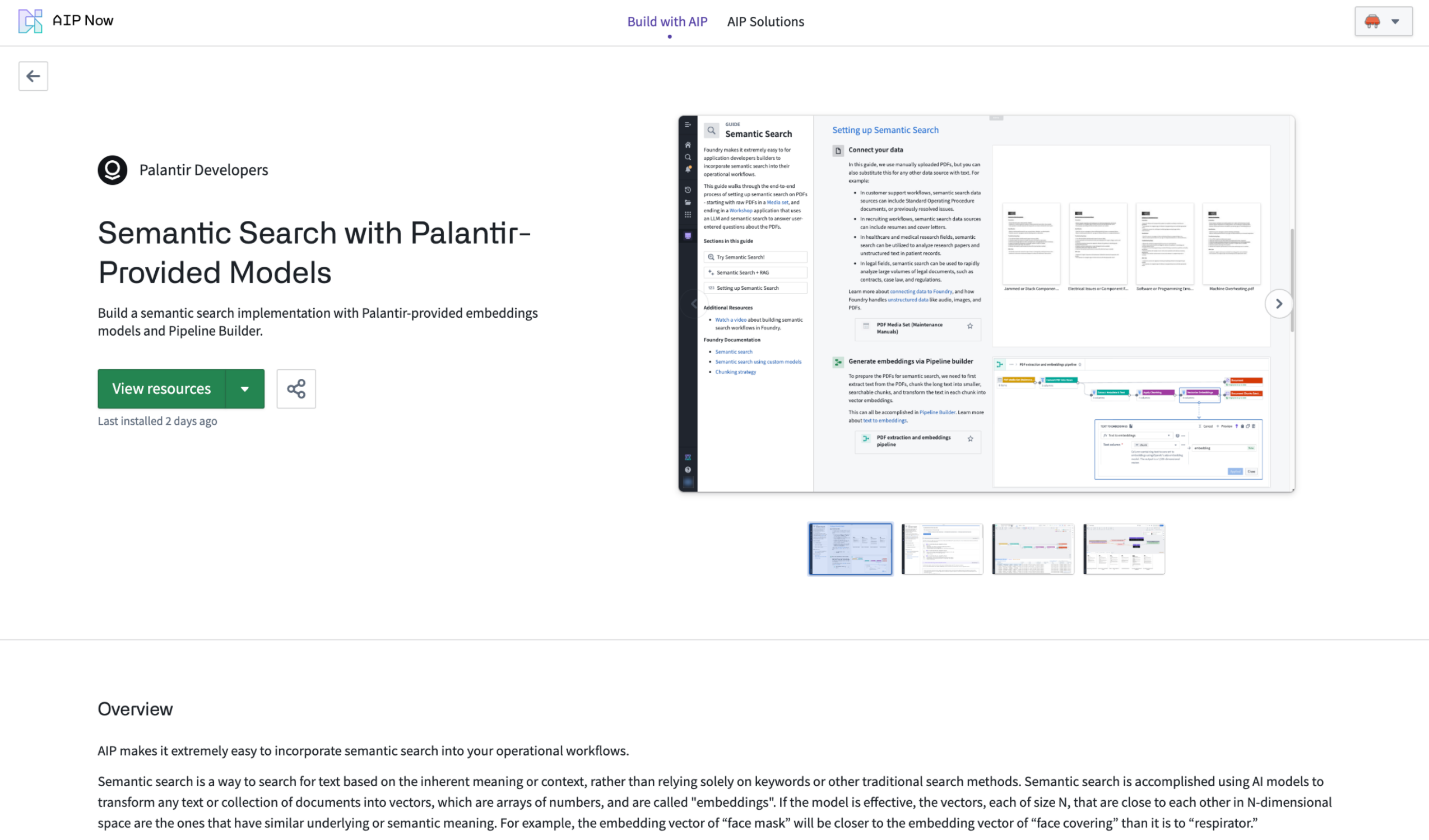

HyperAuto V2 is now available [GA]

Date published: 2024-04-30

HyperAuto is Palantir Foundry’s automation suite for the integration of ERP and CRM systems, providing a point-and-click workflow to dynamically generate valuable, usable outputs (datasets or Ontologies) from the source’s raw data. This feature is now available across all enrollments as of this week.

This new major version introduces a significantly updated user experience as well as increased performance and stability for automated SAP-related ingestion and data transformation workflows.

To create your first HyperAuto pipeline, see Getting Started.

Overview of a live HyperAuto pipeline.

Dynamic integration and management of SAP data

Following the previous beta announcement, the following capabilities are now generally available:

- Ease of configuration: HyperAuto offers a user-friendly, point-and-click wizard, opinionated defaults for quick setup, and customizable settings for advanced users, automating tasks like data renaming, cleaning, de-normalization and de-duplication.

- Pipeline Builder integration: HyperAuto dynamically generates Pipeline Builder pipelines, providing a transparent UI and includes a change management workflow for updating and deploying pipeline configuration changes.

- Real-time SAP data processing: HyperAuto supports real-time data streaming from SAP sources (requires SLT Replication Server), allowing data to flow from source to Ontology in seconds.

- Support for static cuts of SAP data: With this new release, HyperAuto supports working on top of uploaded datasets, in case where a live connection to an SAP source is not possible.

For more information on HyperAuto V2, review the documentation.

Website hosting for static application in Developer console is now GA

Date published: 2024-04-30

Developer Console now provides the ability to host static websites. This addition allows developers to employ Foundry for hosting and serving their websites without the need for external web hosting infrastructure. Static websites consist of a fixed set of files (HTML, CSS, JavaScript) that are downloaded to the user's browser and displayed on the client side. Numerous web application development frameworks, such as React, can be used to create static websites.

Static website support is available for enrollments on domains managed by Palantir (example: enrollment.palantirfoundry.com or geographical equivalent). Feature support for enrollments on customer-managed domains is currently in active development.

Hosting your site on Foundry

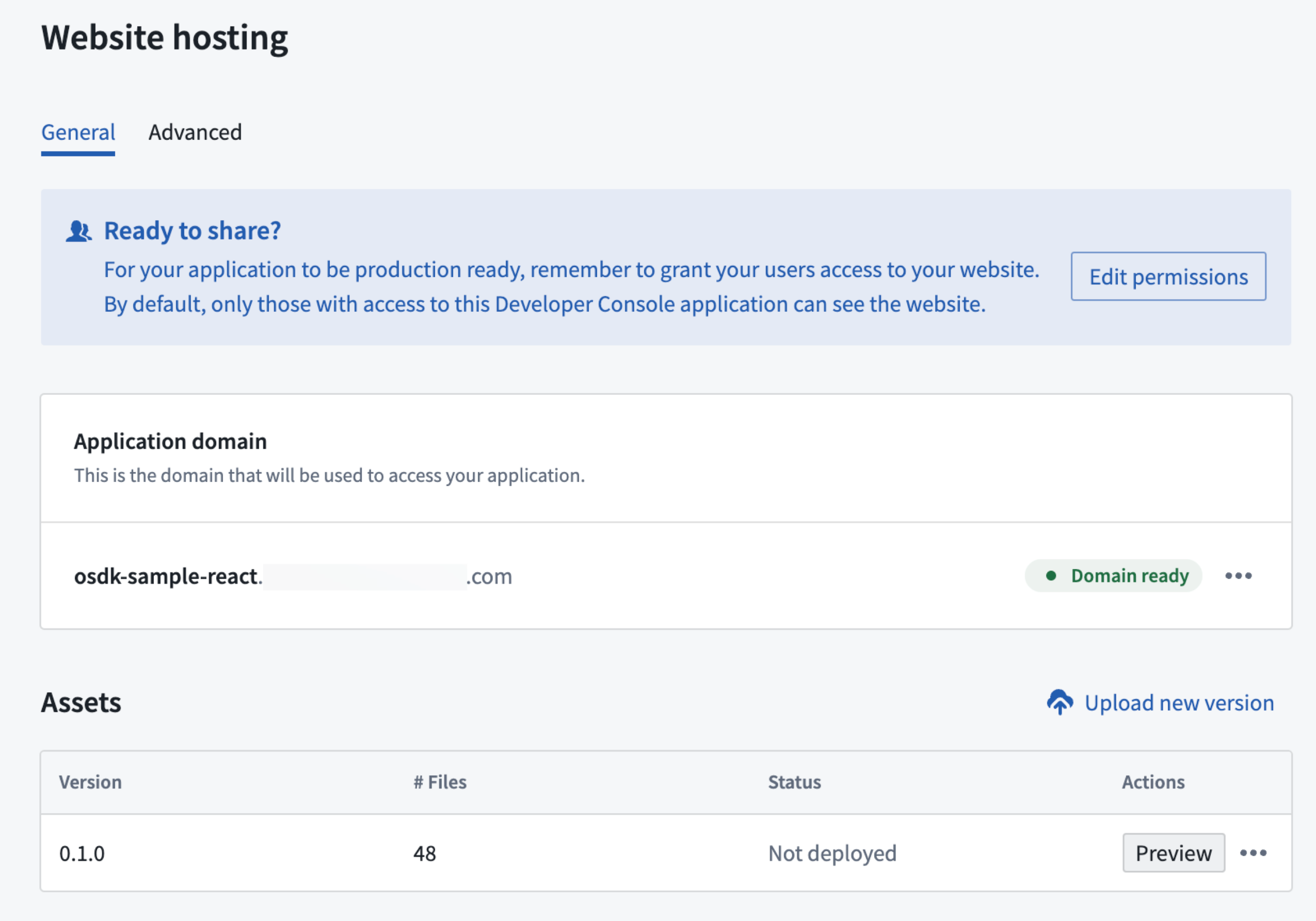

Website hosting menu.

To set up and configure website hosting, you will need to:

- Set an application subdomain for the site, determining the URL your users will use to access the site.

- Upload the asset containing the site to Foundry, preview the results, and publish the site.

You can also configure access to the website and set advance Content Security Policy (CSP) settings for your site.

Who can configure website hosting?

Web hosting can be configured by any user with developer console edit or owner role. Setting up web hosting include creating a subdomain which need to be approved by the enrollment information officer.

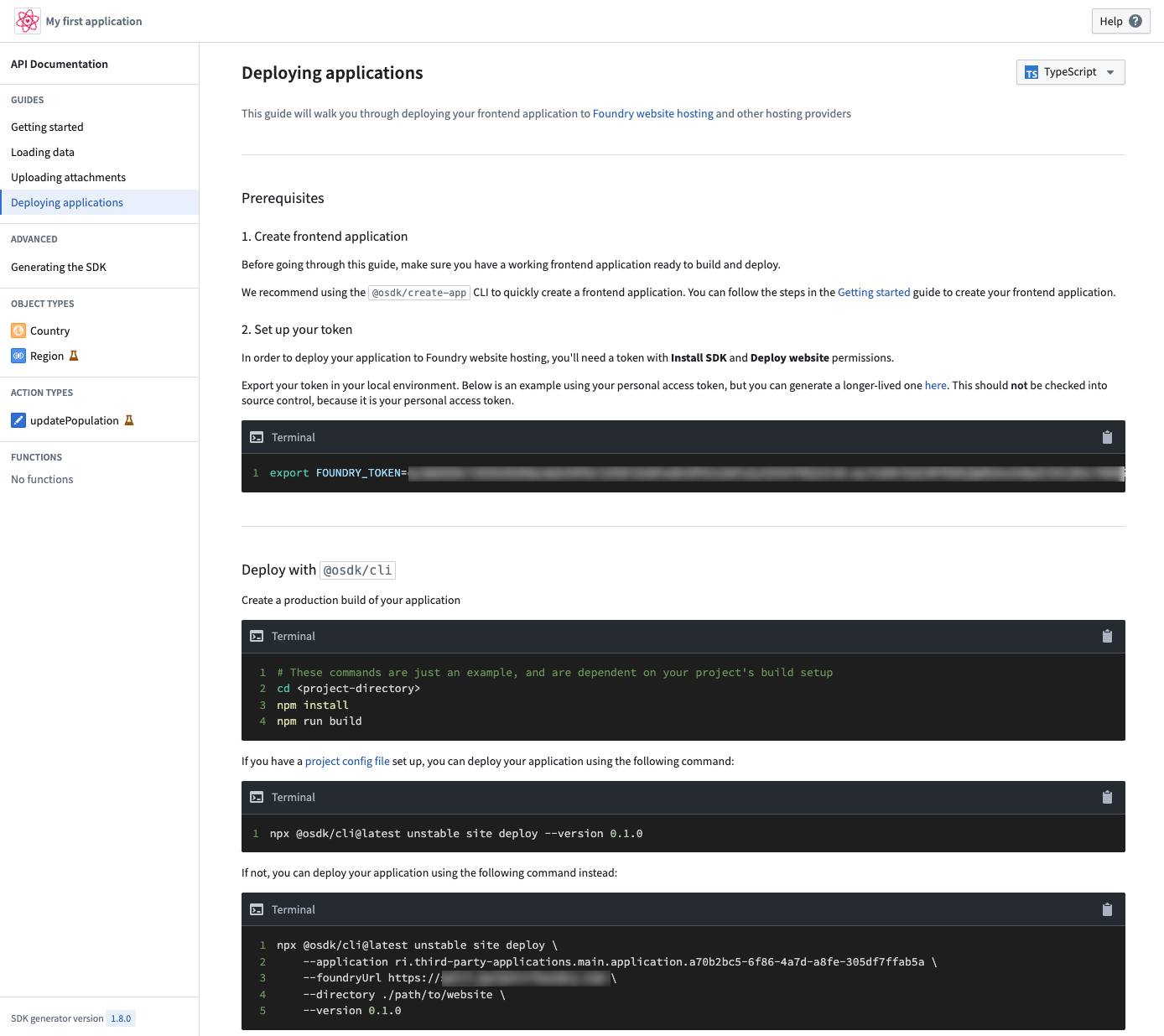

Website hosting command line tool

Automate the static website deployment process using a command line tool or by integrating with existing CI/CD tools. Review the API documentation within your OSDK application for more information.

Deploying applications tutorial within the OSDK application documentation.

What is coming next?

We are actively developing support for website hosting on customer-owned domains, as well as adding support for hosting your code on Foundry and running CI/CD directly from within Palantir Foundry.

To learn more about website hosting configuration, review the documentation.

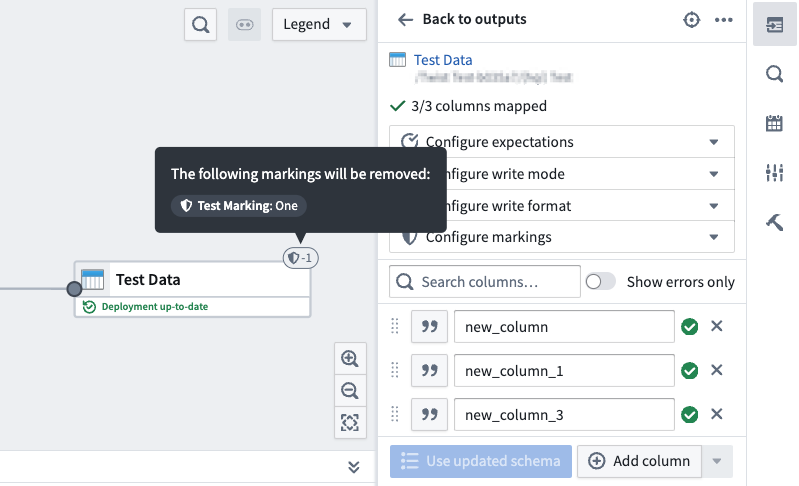

Remove Markings on outputs in Pipeline Builder

Date published: 2024-04-30

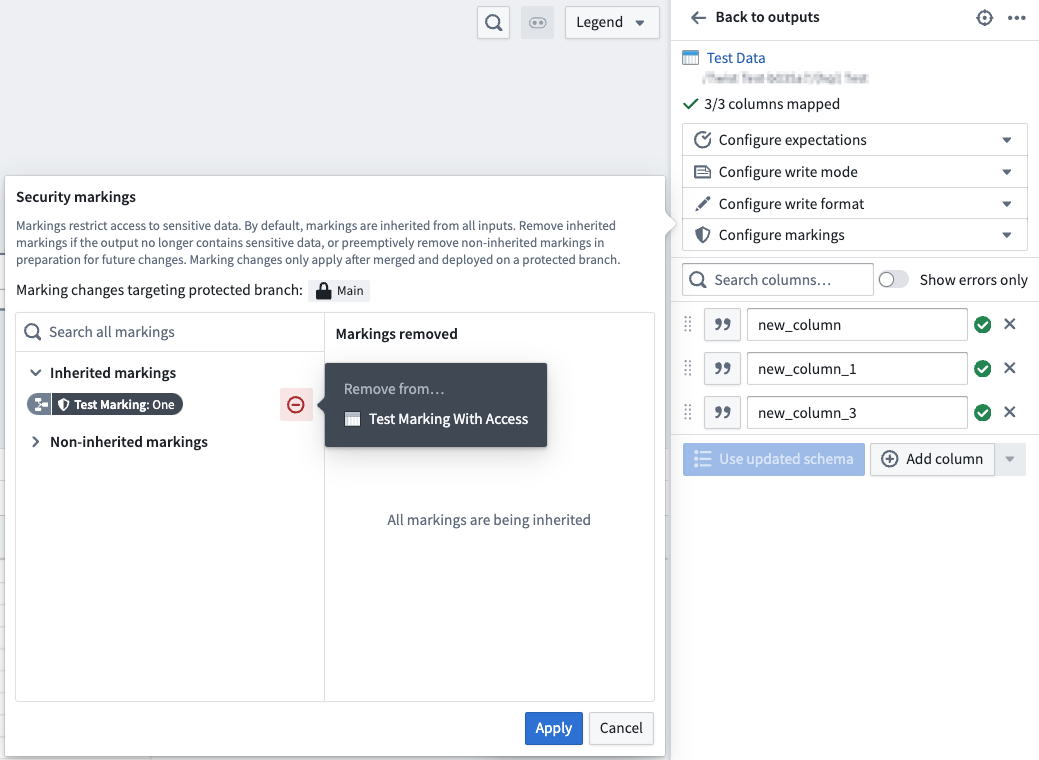

We are excited to announce that you can now remove Markings on outputs directly in Pipeline Builder on all stacks. From within Pipeline Builder, it is now possible to:

- Remove one or multiple inherited markings from your output.

- Proactively remove non-inherited markings that could appear on your dataset in the future.

- Undo any marking removal that was previously applied on your output within the specified pipeline.

To use the remove Markings functionality, ensure you meet the following prerequisites:

- Have the

Remove markingpermission for the specific Marking(s) - Branch protection must be enabled on your pipeline

- Code approvals are required on your pipeline

To remove a marking, navigate to the output you want to remove the marking from and select Configure Markings.

Markings option panel with cursor hovering over the remove from icon.

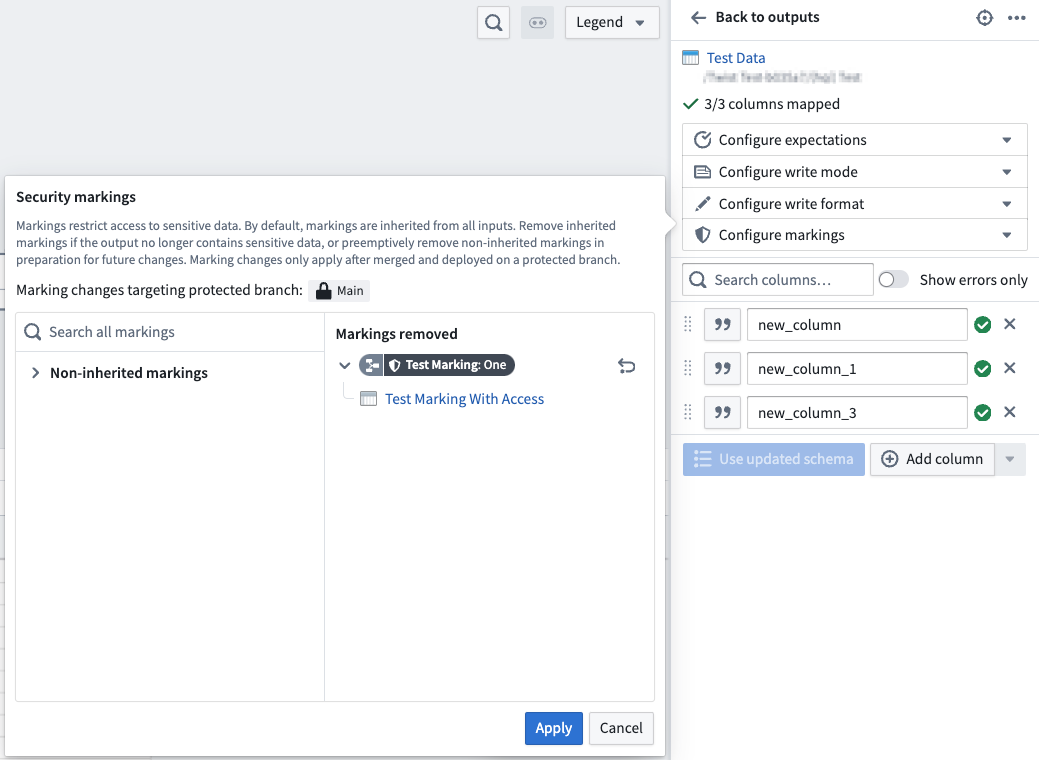

Once you have selected the marking(s) you wish to remove, these markings will be listed under the Markings removed section

After the removals are applied, the marking will no longer appear on the output once your branch is merged and built on master.

Review the documentation to learn more about removing Markings directly in Pipeline Builder.

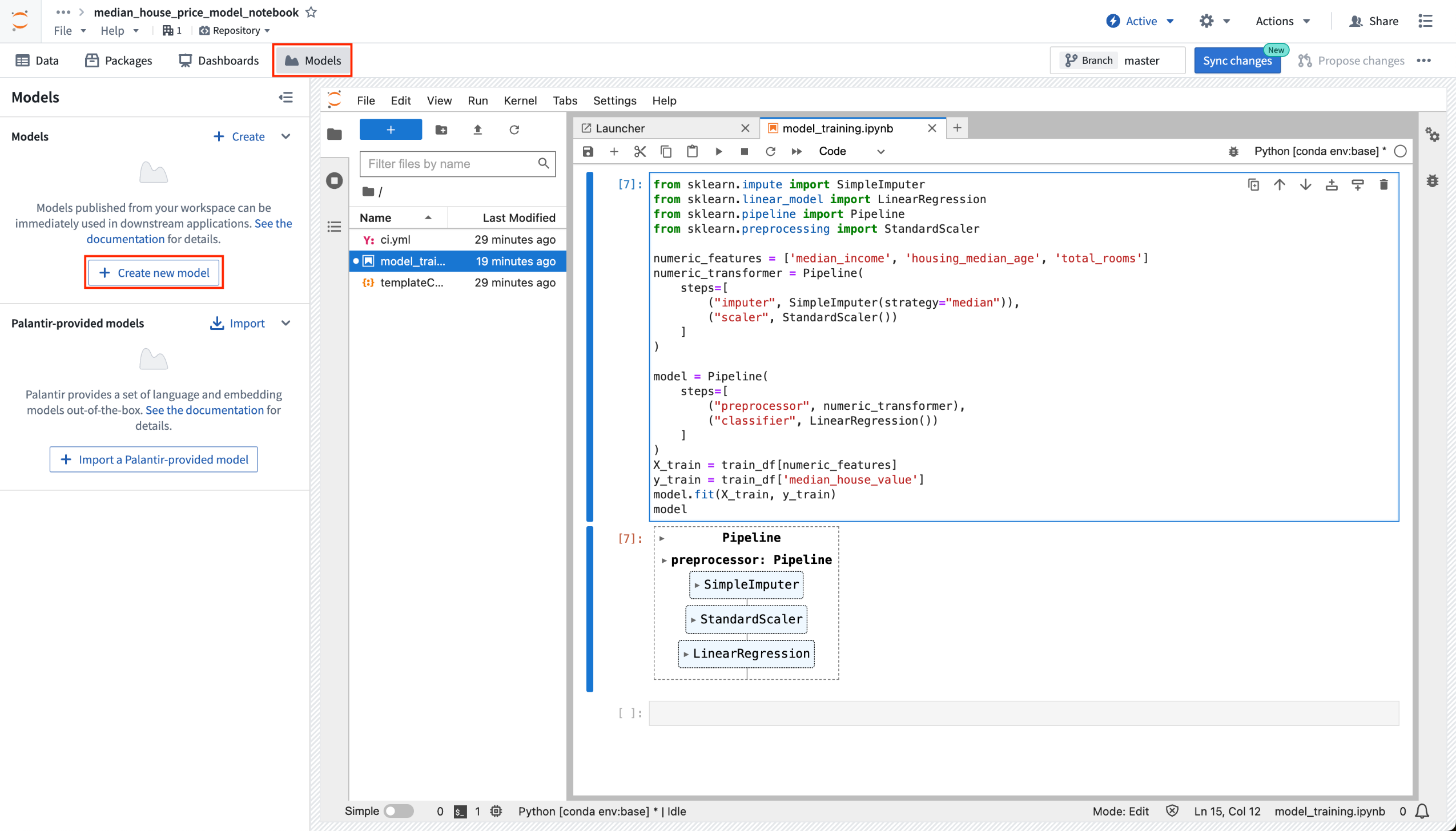

Model development in Jupyter® notebooks now generally available

Date published: 2024-04-30

Beginning today, users can take full advantage of the existing Code Workspaces JupyterLab® integration to train, test, and publish models for consumption across the platform. Code Workspaces provides an alternative to the existing model training flow in Code Repositories by offering a more familiar, interactive environment for data scientists.

Models published through Code Workspaces are immediately available for consumption in downstream applications like Workshop, Slate, Python transforms, and more.

Create models from within Code Workspaces

Models can now be published directly from within Code Workspaces. Just navigate to the Models tab, and select Create a model. Once you have selected your model's location, you will be asked to define a model alias, which is a human readable identifier that maps to the resource identifier used by Palantir Foundry to identify your model.

Create new model option from within Code Workspaces.

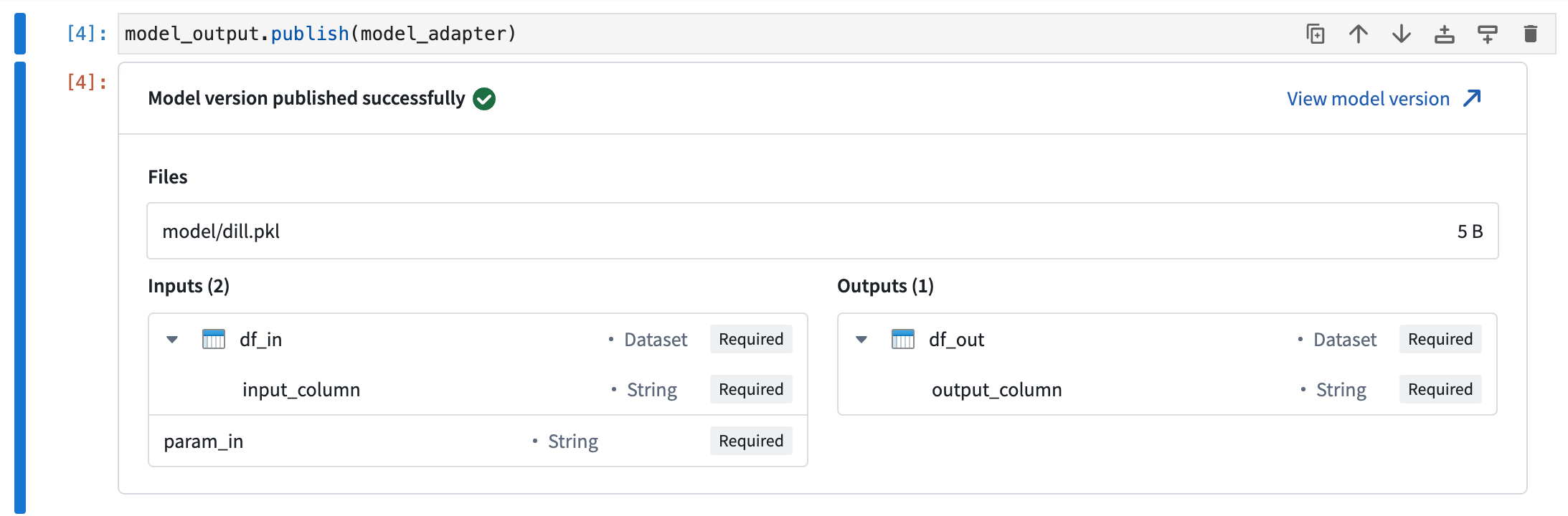

Publish your models directly to Foundry

After defining your model's alias, follow the step-by-step guide located on the left of the view to publish your model to Foundry. Review details on installing the required packages, defining your model adapter, and finally, to publish your model.

When a model is first created, a skeleton adapter file will be generated with the name [MODEL_ALIAS]_adapter.py. Review the Model adapter serialization documentation and Model adapter API documentation for more information on how to define a model adapter.

Once you have authored your adapter, you can use the provided model publishing snippet to publish your model to Foundry. Select the snippet to copy it, then paste it into a notebook cell, and execute it.

Models that publish successfully will display a preview output indicating that publishing was a success, and will display information about the model like the model's API and the saved model files.

Model version successful publish notice.

Learn more

To learn more about developing and publishing models from Code Workspaces, review the Code Workspaces model training documentation. You can also review our full tutorial that walks you through setting up your project, building your model, and deploying your model for consumption in downstream applications.

Jupyter®, JupyterLab®, and the Jupyter® logos are trademarks or registered trademarks of NumFOCUS. All third-party trademarks (including logos and icons) referenced remain the property of their respective owners. No affiliation or endorsement is implied.

Sunsetting Preparation (Blacksmith)

Date published: 2024-04-30

Preparation (Blacksmith) is being sunset and replaced by Pipeline Builder, the de facto tool for no-code pipelining and data cleaning. Preparation has been in maintenance mode for several years and will not support Marketplace integrations. While new enrollments will no longer be granted access, this change will not affect any existing installations of Preparation for now.

Introducing Model Catalog: Available End of April 2024 [GA]

Date published: 2024-04-24

Model Catalog will be generally available at the end of April 2024, enabling users to view all Palantir-provided models and discover new models in AIP.

Model Catalog enables builders to:

- View the models that are available in AIP and discover new models.

- Select the right model for your use case.

- Get started with a workflow using both basic templates and entire use case templates in Marketplace.

- Test different models in a sandbox/playground.

Model Catalog has two main components: the Model Catalog home page and the Model entity page.

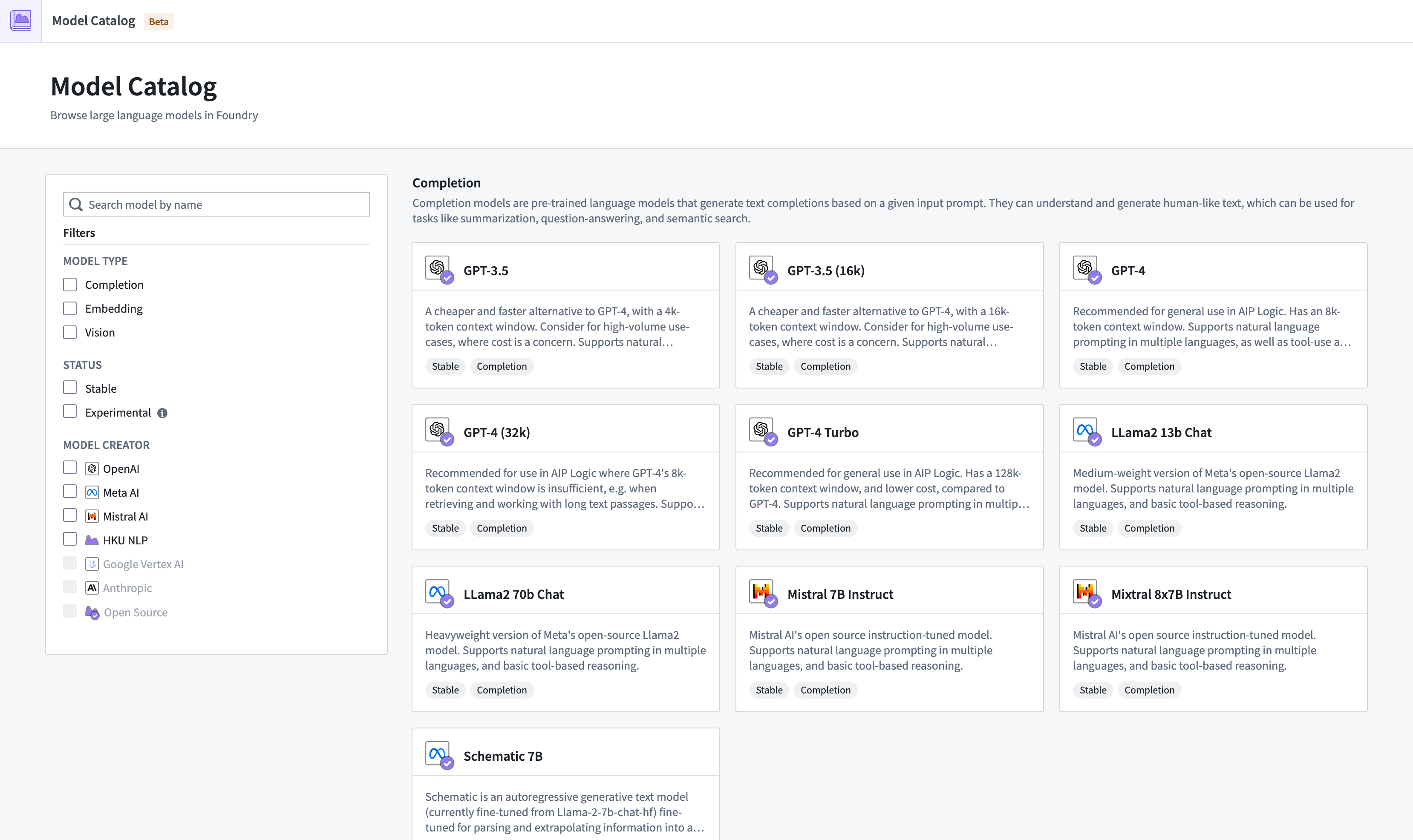

Model Catalog home page

The Model Catalog homepage.

The Model Catalog homepage is a discovery and navigation interface, displaying all large language models available on your Foundry enrollment.

There are a few ways to filter models in the home page:

- Model Type: View all available completion models, embedding models, or vision models.

- Status: View models based on their lifecycle status, either stable or experimental.

- Model Creator: View models based on the organization responsible for creating, developing, and maintaining a specific LLM.

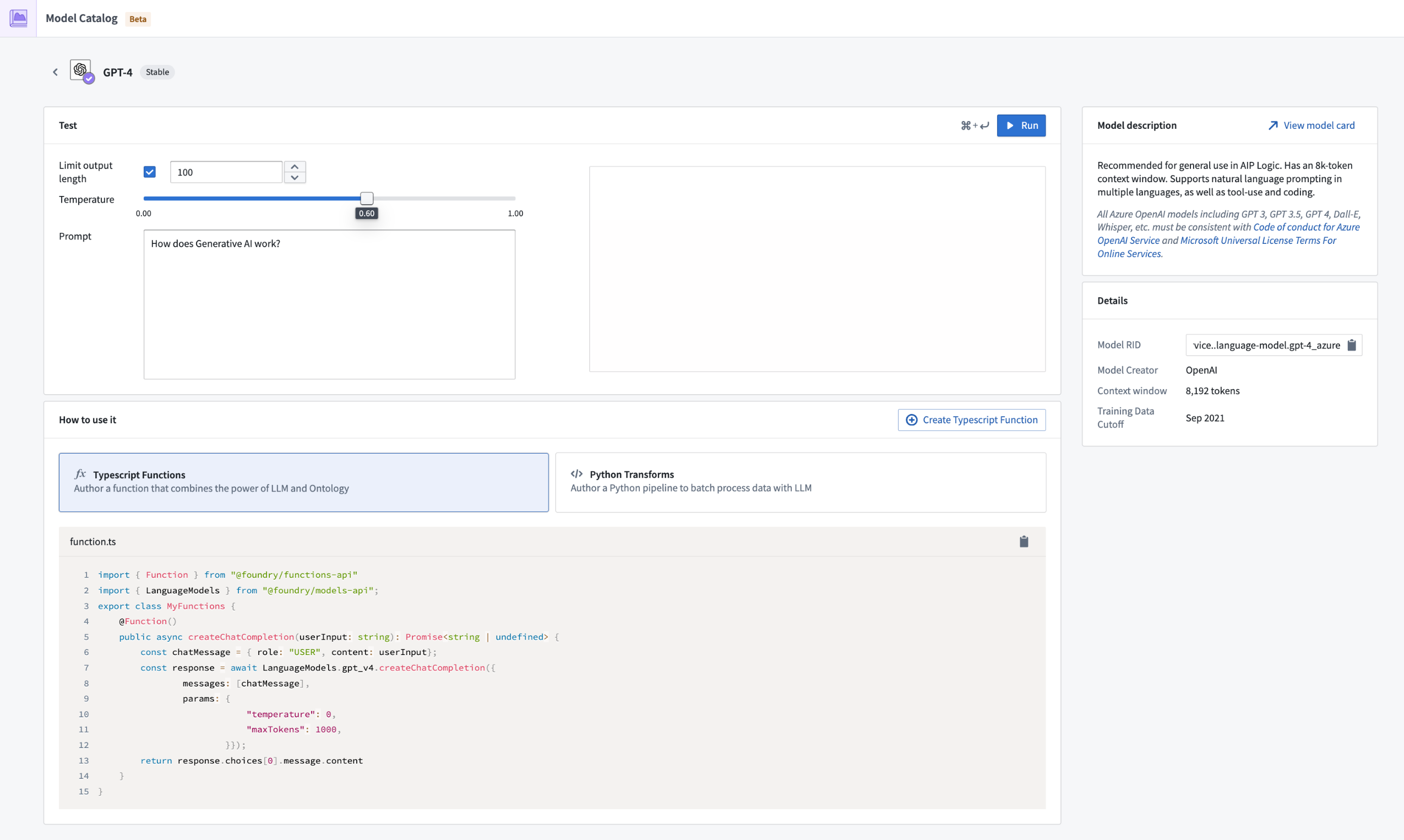

Model entity page

Each model has an entity page with three main sections:

- Test: An interface for builders to try out the different models.

- How to use it: Get started by creating a resource, already populated with the content required to start building your workflow. Model Catalog currently supports Functions and Transforms.

- Model description: View a basic description, legal disclaimer, context window of the model such as tokens limit, training data cutoff, and more.

The Model entity page.

What's next on the development roadmap?

- Provide more tools and benchmarks for comparing models and making decisions.

- Improve the testing sandbox to support different features and prompt types.

- Expand the How to use it functionality to all AIP applications.

For more information on Model Catalog, review the documentation.

Announcing Ontology SDK TypeScript 1.1

Date published: 2024-04-24

In preparation for a major version upgrade (TypeScript 2.0), we are releasing minor version 1.1. Migrating your code to this new version will help you get ready for TypeScript 2.0.

Why are we developing TypeScript 2.0?

To benefit from new Ontology primitives such as Ontology interfaces, real-time subscription, and for a more efficient way to work with data, we refactored the OSDK TypeScript semantics. This change will be labeled as major release v2.0, to be released before the end of 2024.

Prior to the release of version 2.0, we are aiming to minimize the impact for customers by first providing version 1.1 and 1.2 as a bridge to the upcoming upgrade.

To clarify, the next two upcoming versions are detailed below:

-

Version 1.1: This version maintains the current language syntax but will deprecate a few methods and properties that will no longer be supported.

-

Version 1.2: Released later this year, version 1.2 will mainly provide the same functionality as the 1.x version but will introduce the new language syntax.

What will change in OSDK TypeScript 1.1?

The following table is a summary of deprecated features and their replacements, with more detailed info on the changes below:

| Deprecated | Replacement |

|---|---|

| page | fetchPage, fetchPageWithErrors |

| __apiName | $apiName |

| __primaryKey | $primaryKey |

| __rid | $rid |

| all() | asyncIter() |

| bulkActions | batchActions |

| searchAround{linkApiName} | pivotTo(linkApiName) |

Page()

We are deprecating the page function, and adding the following two methods for convenience:

-

fetchPageWithErrors- This functions exactly like

pagedid, and was renamed for consistency with API naming conventions in V2.0 to come. - Before

-

Copied!

1 2 3 4 5 6const result: Result<Employee[], ListObjectsError> = await client.ontology .objects.Employee.page(); if(isOk(result)){ const employees = result.value.data; }

-

- After

-

Copied!

1 2 3 4 5 6const result: Result<Employee[], ListObjectsError> = await client.ontology .objects.Employee.fetchPageWithErrors(); if(isOk(result)){ const employees = result.value.data; }

-

- This functions exactly like

-

fetchPage- This will return a page that does not have a result wrapper (no

Result<Value,Error>) - This was added to for users who prefer the

try/catchpattern as it will throw errors where relevant. - Before

-

Copied!

1 2 3 4 5 6const result: Result<Employee[], ListObjectsError> = await client.ontology .objects.Employee.page(); if(isOk(result)){ const employees = result.value.data; }

-

- After

-

Copied!

1 2 3 4 5 6 7 8try{ const result: <Page<Employee>> = await client.ontology .objects.Employee.fetchPage(); const employees = result.data; } catch(e) { console.error(e); }

-

- This will return a page that does not have a result wrapper (no

apiName, primaryKey, rid

We are deprecating __apiName, __primaryKey and __rid properties and replacing them with $apiName, $primaryKey and $rid properties.

All()

We are deprecating all() method and added the following method instead for convenience:

-

asyncIter()This returns an async iterator that will allow you to iterate through all results of your object set -

Before

Copied!1 2 3 4 5 6const result: Result<Employee[], ListObjectsError> = await client.ontology .objects.Employee.all(); if(isOk(result)){ const employees = result.value.data }

- After

Copied!1const employees: Employee[] = await Array.fromAsync(await client.ontology.objects.Employee.asyncIter());

BulkActions

We are deprecating bulkActions and renaming it to batchActions This was renamed to match the REST API name.

- Before

await client.ontology.bulkActions.doAction([...])

- After

await client.ontology.batchActions.doAction([...])

SearchArounds

We are deprecating searchAround{linkApiName} and replacing it with:

-

pivotTo(linkApiName)- This will function exactly the same as search around, but the name will better reflect that the result is of the type of the object you pivoted to.

-

Before

await client.ontology.objects.testObject.where(...).searchAroundLinkedObject().page()

- After

await client.ontology.objects.testObject.where(...).pivotTo("linkedObject").fetchPage()

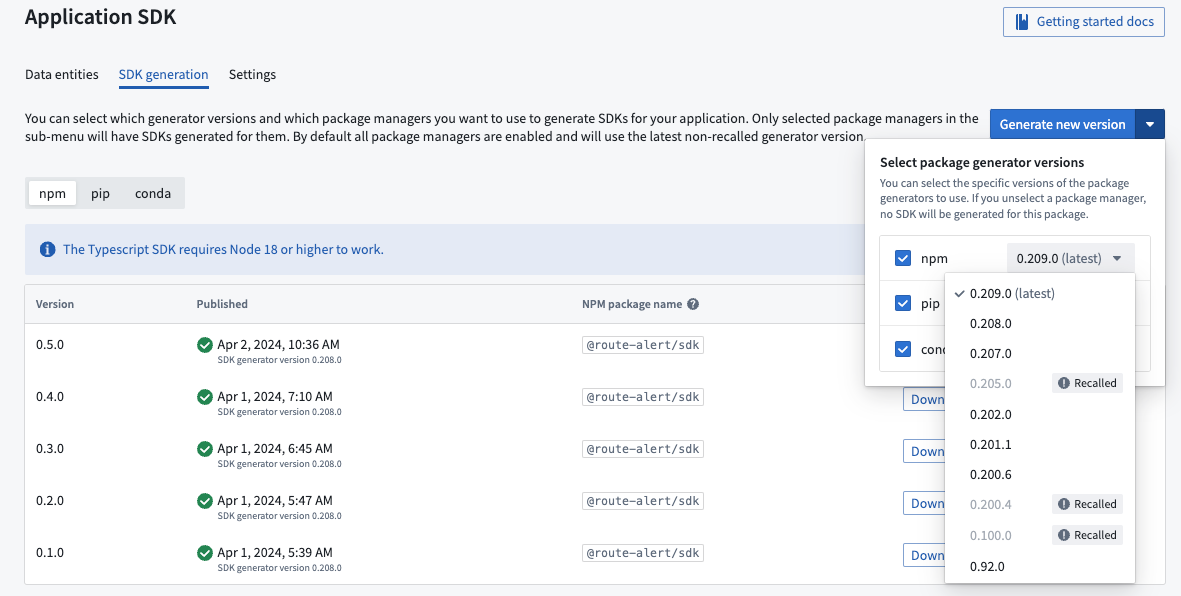

How can I try this out?

After the updated generator version is released, when you create a new SDK version for your application using Developer Console, the produced version will contain these features. Once you update the dependencies in your application, you will see the deprecated methods and properties with a crossover line searchAroundLinkedObject(), but the code will still function as before. Version 2.0 will drop the support for the deprecated methods.

You can also decide to use an older version of the generator by using the dropdown list using the Generate new version option.

What’s coming next?

As a summary of the above, the OSDK TypeScript versions to come are as follows:

Version 1.1: This version maintains the current language syntax but will deprecate a few methods and properties which will no longer be supported.

Version 1.2: With release marked for later this year, version 1.2 will introduce the new language syntax.

Version 2.0: Marks the new language syntax as general availability (GA), while dropping support for the deprecated methods and properties and introduce new capabilities which are not available yet.

Time-based group memberships for data access are now generally available

Date published: 2024-04-11

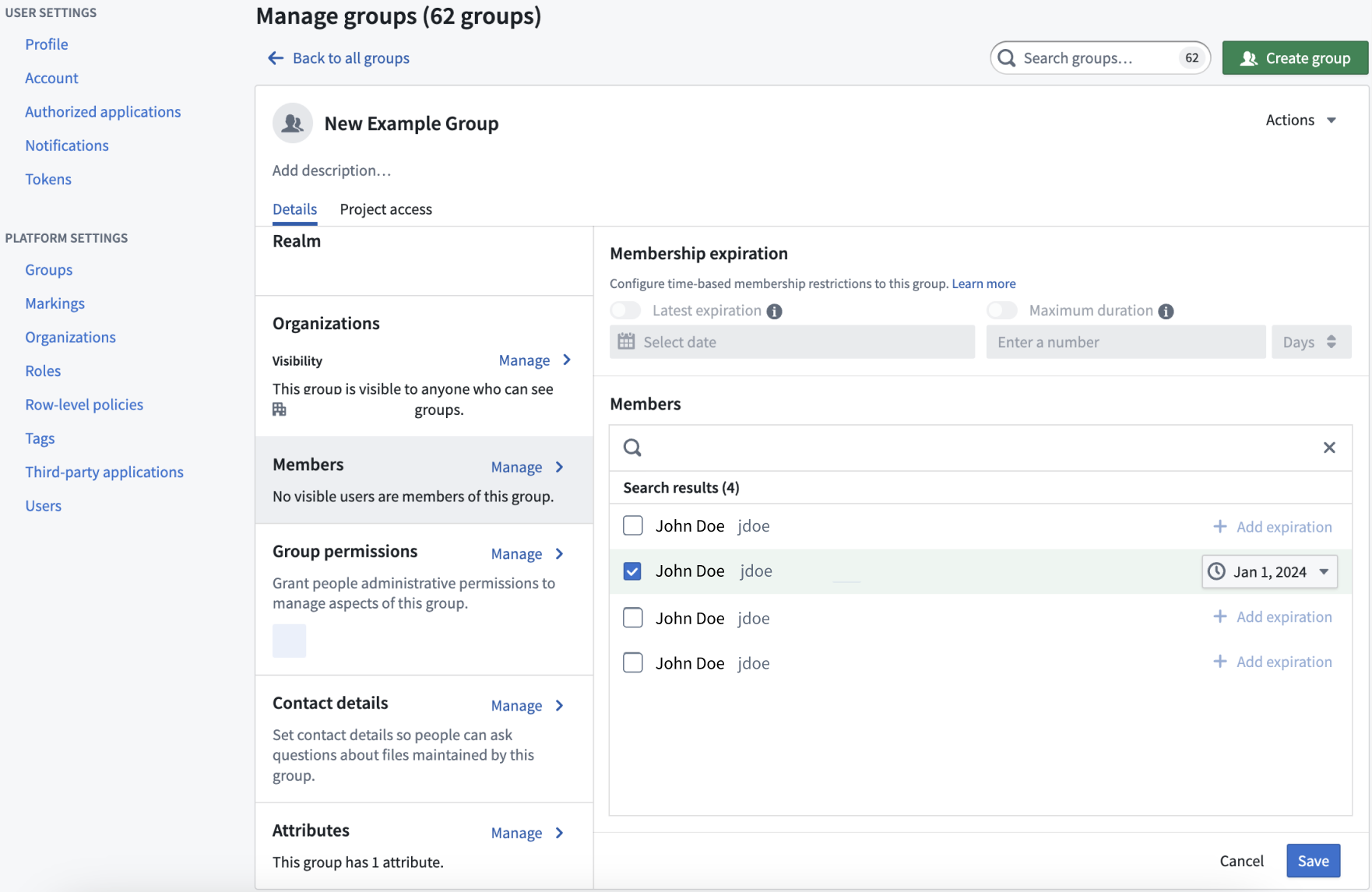

Time-based group memberships are now available as a security feature in the platform. This feature can support data protection principles such as use limitation and purpose specification by limiting user access to data.

Users with Manage membership permissions can now grant group memberships for a given amount of time, allowing temporary access to data based on the set configuration. You can choose to configure access based on latest expiration or maximum duration:

- Latest expiration: All new memberships must have an expiration date that is earlier than this date.

- Maximum duration: All new memberships must expire within the specified duration.

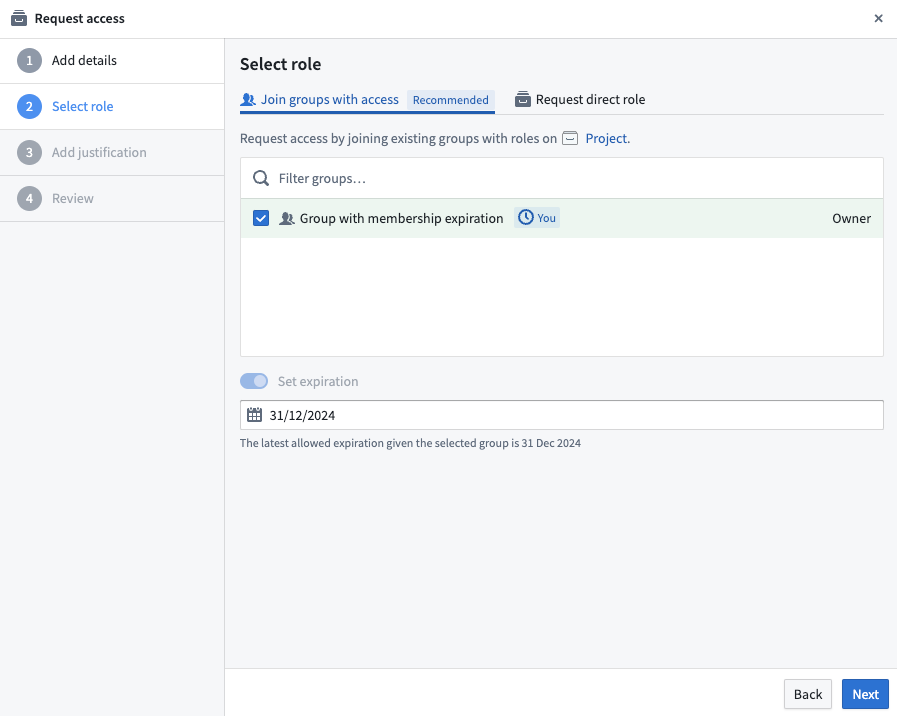

The group settings page with time constraint settings.

Any membership access requests to groups with time constraints applied to them will be bounded by those constraints.

A group membership request with the latest expiration constraint of 31/12/2024.

For more information, review our documentation on how to manage groups.

Introducing an improved Foundry resource sidebar

Date published: 2024-04-11

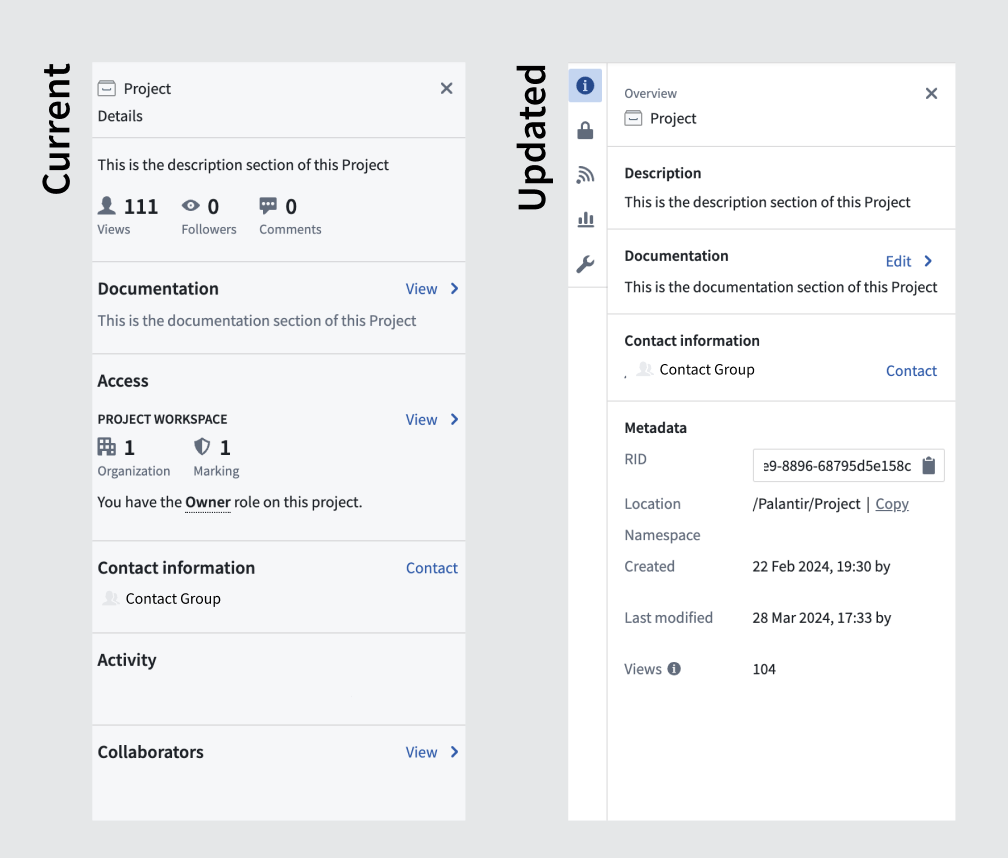

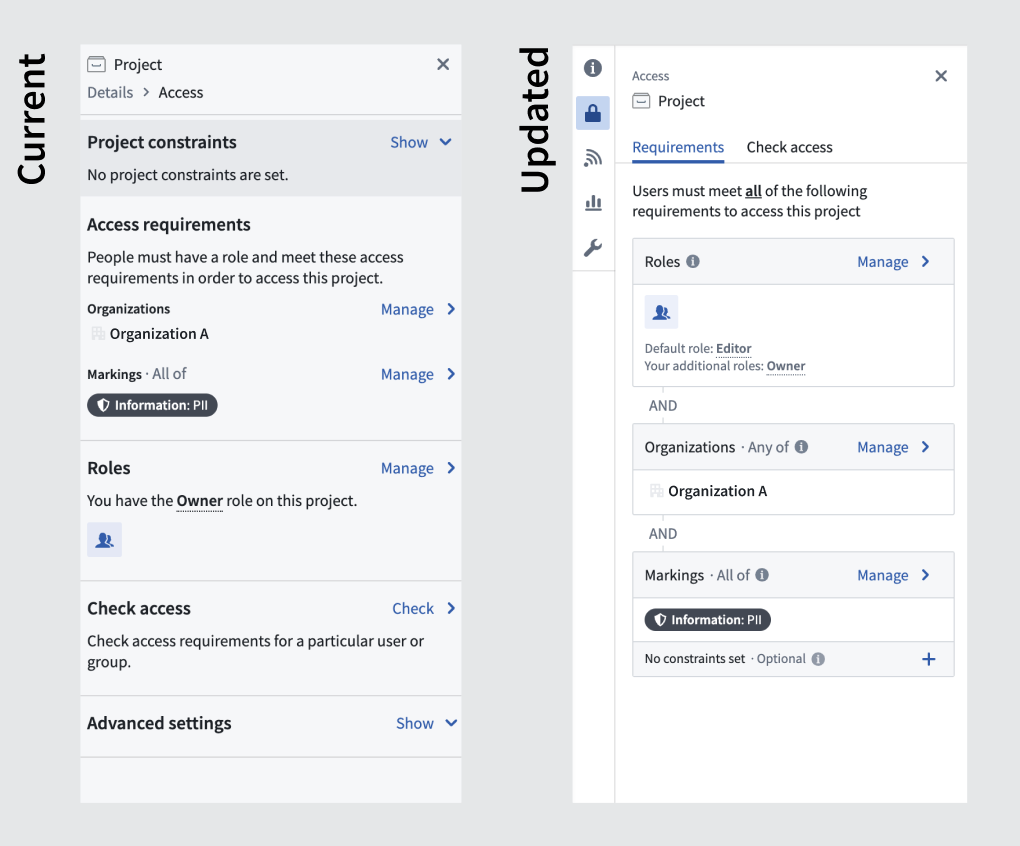

We are happy to announce the launch of our newly redesigned resource sidebar, targeted at streamlining administrative workflows and making them more easily discoverable. You can experience the new visual refresh and organization on the Overview, Access, Activity, Usage, and Settings right-side panels of the resource sidebar in Palantir Foundry.

What is changing?

- Improved navigation: The levels of nesting have been minimized to ensure all workflows are accessible with fewer clicks with an aim for boosting productivity.

- Enhanced security model legibility: Security governance features, such as Access requirements, have been revamped o make them more user-friendly and easier to interpret.

- A fresh visual update: Enjoy a more streamlined visual appearance.

An updated interface for the Project overview pane.

An updated appearance of the Project access panel.

The updated resource sidebar retains all existing functionality and continues to support all your current workflows without any disruption.

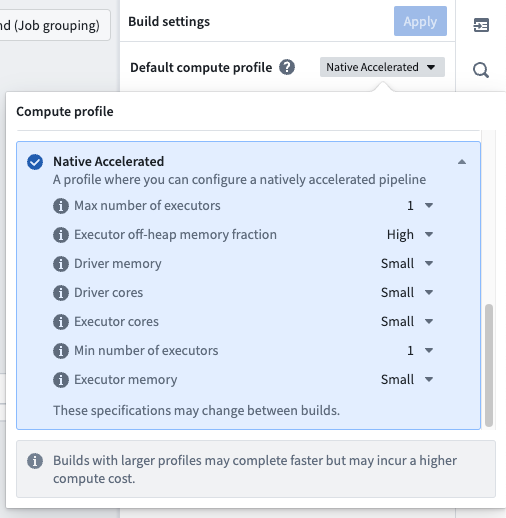

Natively Accelerated Spark in Python Code Repositories

Date published: 2024-04-11

We are excited to introduce Natively Accelerated Spark in Pipeline Builder and Python code repositories, a feature designed to enhance performance and reduce the cost of batch jobs. This feature allows you to leverage low-level hardware optimizations to increase the efficiency of your data pipelines.

Use native acceleration for the following benefits:

- Faster performance: Native acceleration optimizes general SQL functions like

select,filter, andpartition, significantly improving the speed of your batch jobs. - Cost reduction: By boosting performance, native acceleration also helps reduce costs associated with batch job execution, making it ideal for those with big data needs or high throughput requirements.

- Detailed implementation: Guidance is available to help you set up and integrate this feature into your workflows.

Usage and Limitations

You can enable native acceleration within Pipeline Builder and Python transforms. Note that repositories that use user-defined functions (UDFs) and resilient distributed dataset (RDD) operations may marginally benefit from this feature as well.

Native acceleration is particularly beneficial for data scientists with big data or latency-critical workflows. If you are running frequent builds (every 5 minutes) or operating in a cost-sensitive environment, native acceleration offers a powerful solution.

To test the benefits of native acceleration, simply run your pipeline once with the feature enabled. If you notice an improvement in performance, keep the feature enabled for the pipeline.

How to enable native acceleration

To leverage the full potential of native acceleration, review the documentation for Natively Accelerated Spark in Pipeline Builder and Python code repositories.

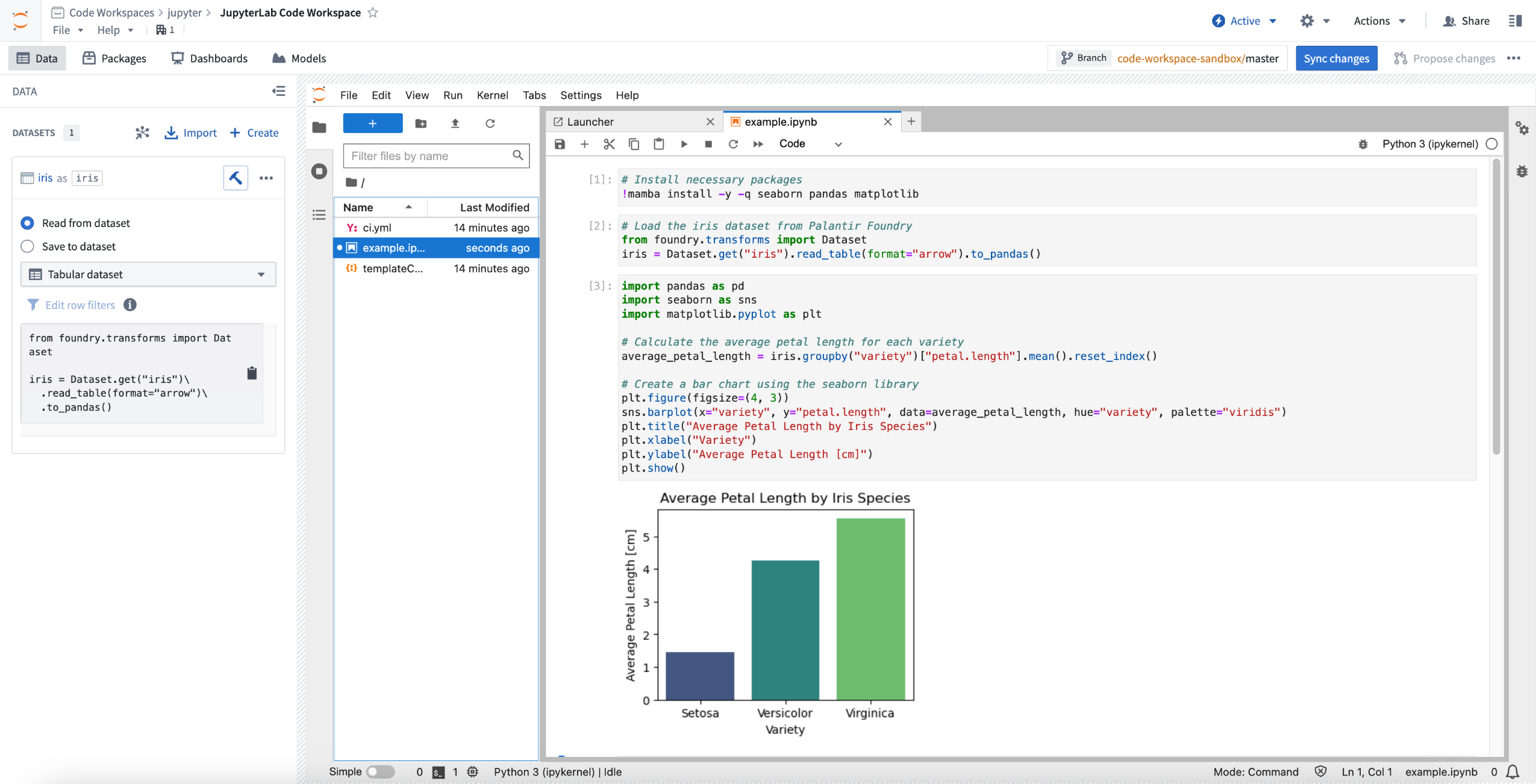

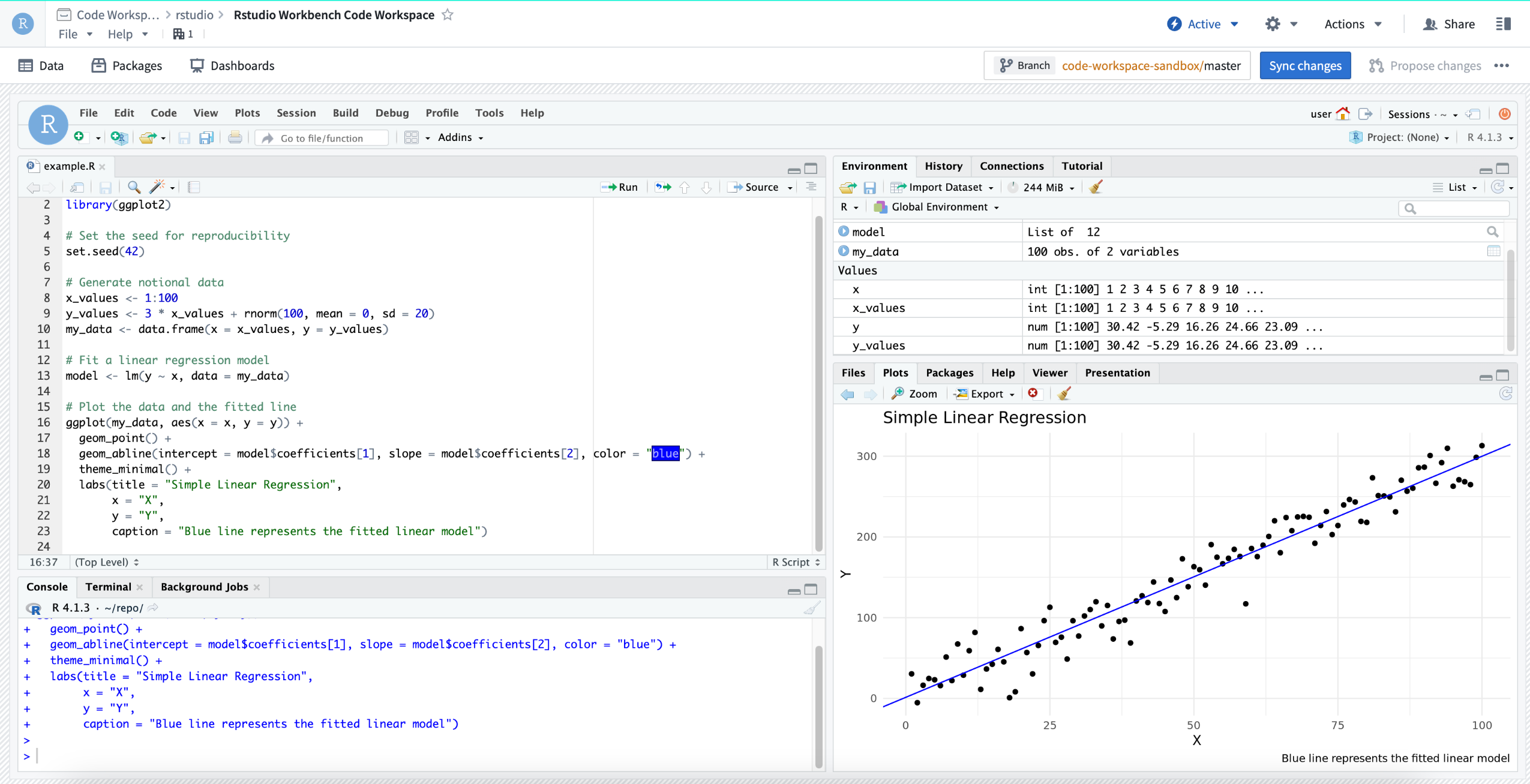

Code Workspaces [GA]: JupyterLab® and RStudio® is now generally available

Date published: 2024-04-09

We created Code Workspaces so users can conduct effective exploratory analyses using widely-known tools while seamlessly leveraging the power of Palantir Foundry. Now generally available, Code Workspaces will bring the JupyterLab® and RStudio® third-party IDEs to Foundry, enabling users to boost productivity and accelerate data science and statistics workflows. Code Workspaces serves as the definitive hub within Foundry for conducting comprehensive exploratory analysis by delivering unparalleled speed and flexibility.

Jupyter® Code Workspace used for data science.

Flexible and efficient exploratory analyses

Code Workspaces integrates widely-used tools such as JupyterLab® Notebooks and RStudio® Workbench with the power of the Palantir ecosystem, establishing itself as the go-to place in Foundry for efficient exploratory analysis.

You can soon benefit from the following capabilities:

- Use widely adopted data science and machine learning tools and seamlessly integrate RStudio® and Jupyter® on top of your organization's data.

- Load data from any dataset while benefiting from Foundry’s world-class primitives of security, provenance, governance, and data lineage.

- Interactive code development and write-back, instant feedback loops, previews with cell-by-cell analyses and REPL terminal commands.

- Transform or generate data, visualization, reports, and insights that can be consumed by the rest of the Palantir platform.

- Efficiently train, optimize, and implement machine learning models before using them in production.

- Fully customize multiple Python and R environments with an interactive, integrated package manager solution supporting Conda, pip, and CRAN.

- Use native Git versioning and collaborative functionality provided by Code Repositories.

- Create interactive Dash, Streamlit®, and Shiny® dashboards to share analyses with peers and drive decision-making.

- Fully customize your compute settings to cater to the scale of data to be processed.

RStudio® Code Workspace used for machine learning training.

When should I use Code Workspaces?

Code Workspaces provides a comprehensive set of tools for performing quick and efficient iterative analysis on your data, seamlessly integrating the best of third-party tools with Palantir's extensive suite of built-in data analytics capabilities. Use it for data visualization, prototyping, report building, and iterative data transformations. Code Repositories and Pipeline Builder can be employed to convert these data transformations into robust production pipelines.

For most workflows, Code Workspaces and other Foundry tools complement each other, with the outputs of any pipeline being accepted as inputs to Code Workspaces analyses, and vice versa. Both Code Repositories and Code Workspaces rely on the same Git version control system. Large R pipelines, model training, or extensive visualization workflows can also be exported to Code Workbook to leverage its distributed Spark architecture.

Real-world use cases that drive impact

The following examples are some many real-world use cases that leverage Code Workspaces:

- Large insurance retailers employing Code Workspaces for the fine-tuning of their insurance pricing models.

- A health board using Streamlit dashboards in Code Workspaces to make spatial analysis on multiplex immunofluorescence data accessible to even computationally-novice biologists.

- A retail company using Jupyter® Code Workspaces to conduct exploratory analysis of their sales data and develop forecasting models.

- A pharmaceutical company using RStudio® Code Workspaces to generate completely reproducible lab reports detailing the effectiveness of drug trials.

To learn more about what you can do in Code Workspaces, review the relevant documentation:

What's on the development roadmap?

The following features are currently under active development:

- Code Workspaces transforms: Take the contents of a JupyterLab® Notebook or an RStudio® R script and persist it as a reproducible pipeline, leveraging Palantir’s robust build infrastructure.

- Integration with Palantir-managed models: Load and use models developed elsewhere in Foundry.

- AIP Integration: Call out to any language model supported by AIP directly from Code Workspaces.

- Ontology support: Fully leverage the power of the Ontology, and load objects into Code Workspaces to perform exploratory analysis.

- First-class reporting solution: Turn data analyses into reports that can be shared to inform decision-making across your entire organization.

Jupyter®, JupyterLab®, and the Jupyter® logos are trademarks or registered trademarks of NumFOCUS. RStudio® and Shiny® are trademarks of Posit™. Streamlit® is a trademark of Snowflake Inc.

All third-party referenced trademarks (including logos and icons) remain the property of their respective owners. No affiliation or endorsement is implied.

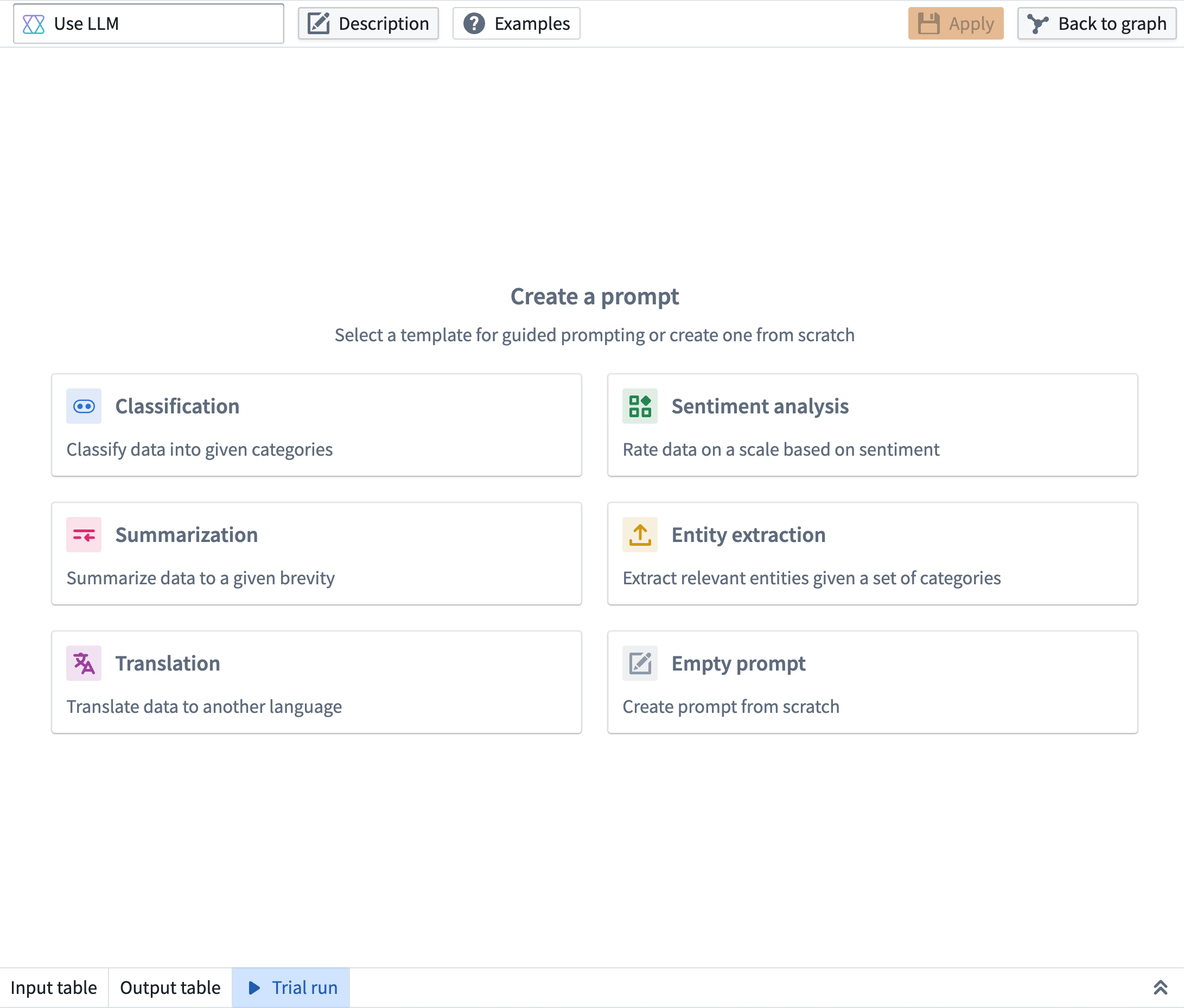

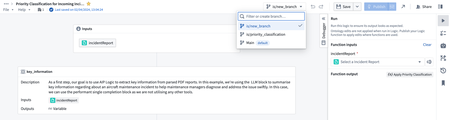

Pipeline Builder now supports running LLMs in your pipeline at scale

Date published: 2024-04-04

We're excited to launch a new cutting-edge feature in Pipeline Builder: the Use LLM node. This feature allows you to effortlessly combine the power of Large Language Models (LLMs) with your production pipelines in a few simple clicks.

Integrating LLM capabilities with production pipelines has traditionally been a complex challenge, often leaving users in search of seamless, scalable solutions for their data processing requirements. Our solution addresses these challenges by providing the following features:

- Production guarantees for LLM processing: Many LLM use cases are based only on foundational data and don't require iterative user input. Save time by processing and running your LLM model on your data directly in Pipeline Builder, leveraging the production pipelining features that Pipeline Builder offers, such as branching and build monitoring.

- Preview before deploy on multiple rows: Users can run trials on a few rows of their input data to iterate on their prompt before running a full build. Preview computations are completed in just seconds, greatly accelerating the feedback loop.

- Pre-engineered prompt templates: Users can benefit from prompt engineering expertise provided by our experienced team. These templates provide a warm start, enabling users to quickly initiate their projects.

Five beginner-friendly prompt templates to jumpstart your projects

Pipeline Builder interface showing the addition of five guided prompts.

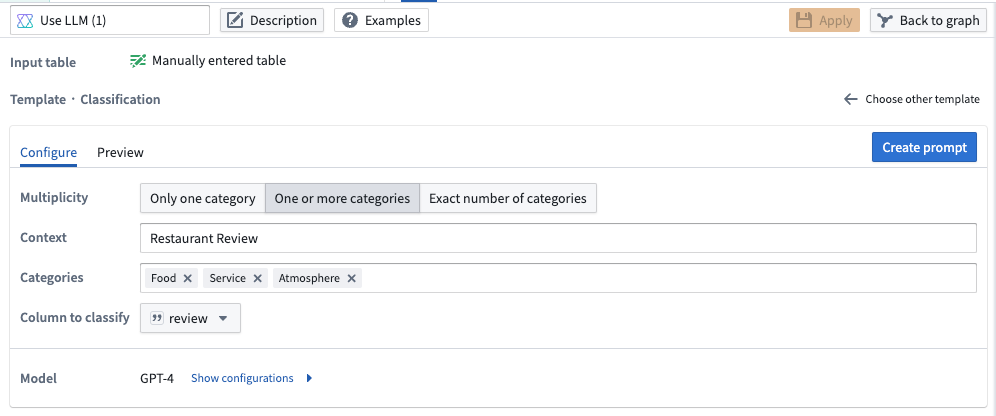

- Classification: Use LLMs to classify your data into given categories. In the example below we are classifying restaurant reviews into three different categories: food, service, and atmosphere. Our model will categorize each review into one or more of those categories.

Pipeline Builder interface showing a prompt to classify restaurant reviews into three different categories.

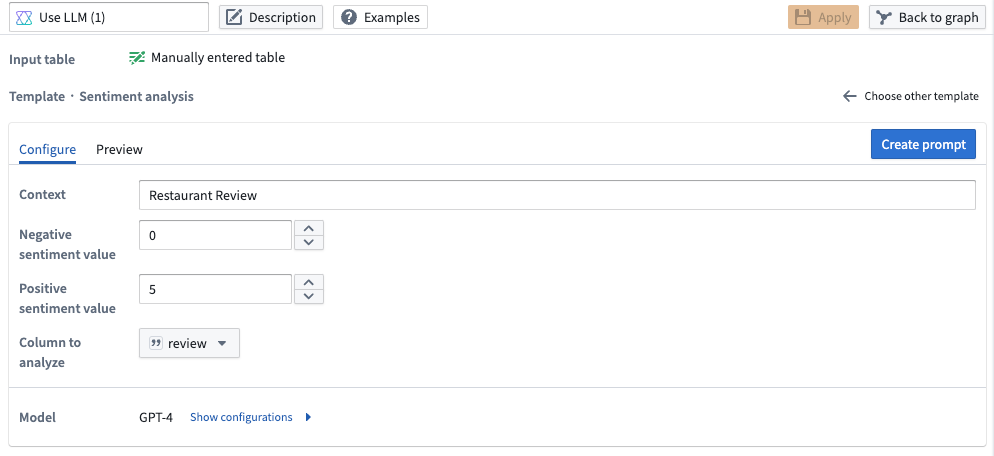

- Sentiment Analysis: Use LLMs to rate your data on a scale based on positive or negative sentiment. In the example below, we rate the positive or negative sentiment of restaurant reviews on a scale of 0 (most negative) to 5 (most positive).

Pipeline Builder interface showing a prompt to rate positive or negative sentiment of restaurant reviews.

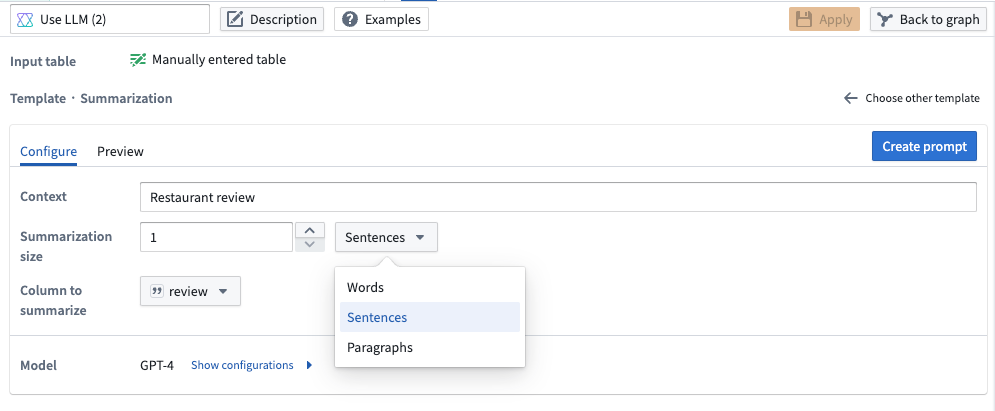

- Summarization: Use LLMs to summarize your data to a given length. In the example below, we are summarizing the restaurant reviews into one sentence.

Pipeline Builder interface showing a prompt to summarize restaurant review by length.

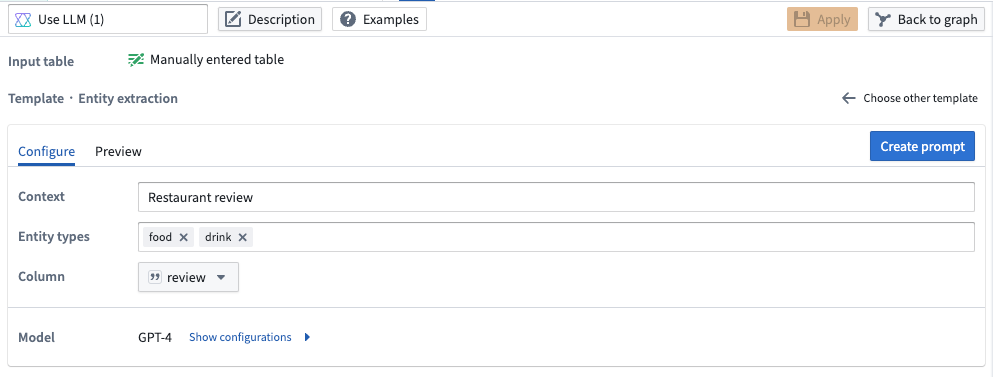

- Entity Extraction: Use LLMs to extract relevant elements given a set of categories. In the example below, we want to extract any food or drink elements in each restaurant review.

Pipeline Builder interface showing a prompt to extract any food or drink elements from each restaurant review.

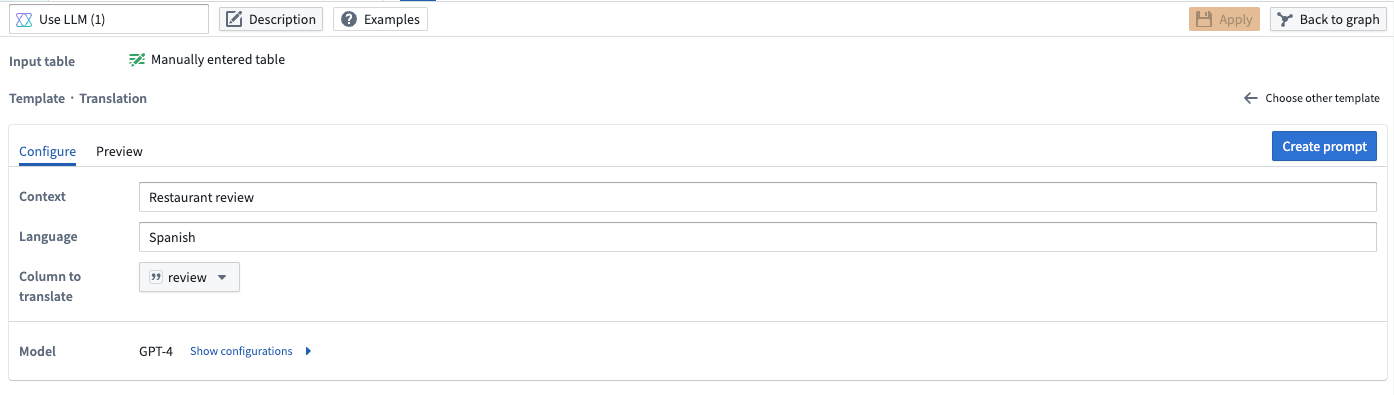

- Translation: Use LLMs to translate your data into another language. In the example below, we are translating the restaurant reviews to Spanish.

Pipeline Builder interface showing a prompt to translate restaurant reviews to Spanish.

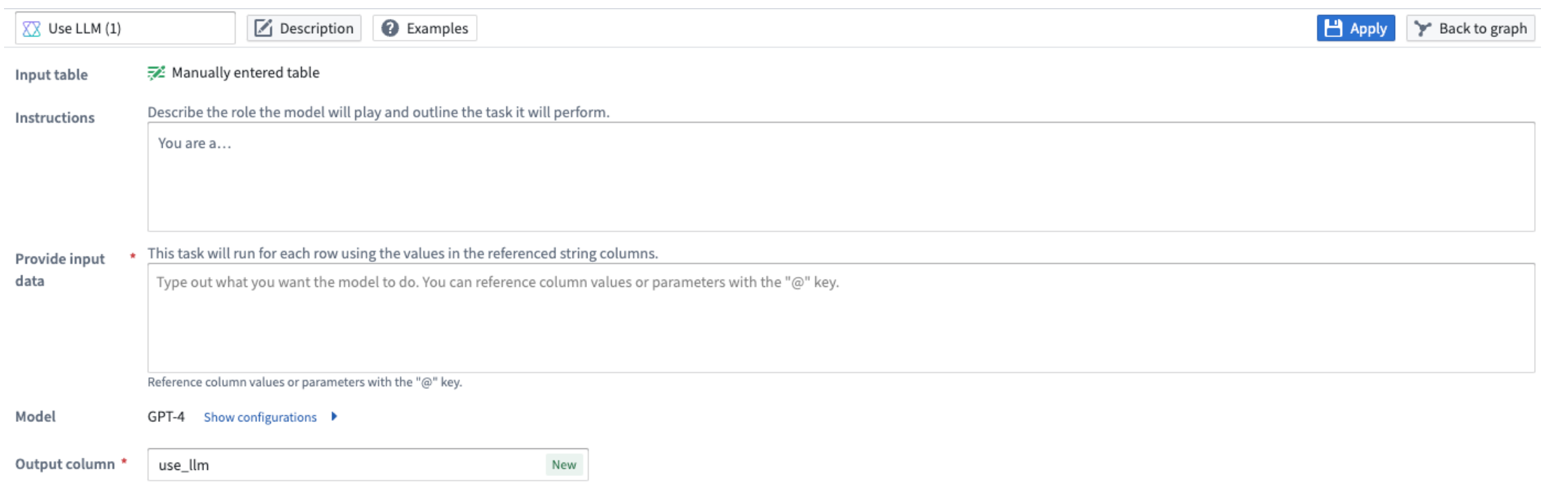

Customize your own LLM prompt

Tailor the model to your specific requirements by creating your own LLM prompt.

Pipeline Builder interface showing a custom prompt.

See our documentation for more on using the new LLM node in Pipeline Builder.

Introducing the Workshop module interface

Date published: 2024-04-02

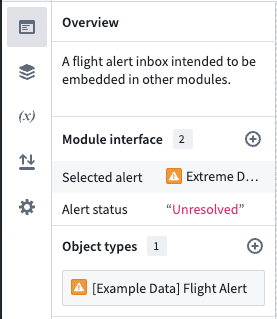

Workshop has rebranded "promoted variables" as "module interface." The module interface is the set of variables that are able to be mapped to variables from a parent module when embedded, and initialized from the URL. You can think of the module interface as the API for a Workshop module.

To add a variable to the module interface, navigate to the Settings panel for a variable, add an external ID, and make sure the toggle for module interface is enabled. Optionally, you can give a module interface variable a display name and description, which will be shown when the module is embedded or used in an Open Workshop module event.

The transition from promoted variables to the module interface entails minimal functionality changes, which are detailed below.

Preview module interface values

The Workshop module Overview tab now provides a comprehensive overview of the module interface, along with a new ability to set a static override preview value for interface variables.

New module interface overview section in the overview panel of the Workshop editor.

Variable settings panel

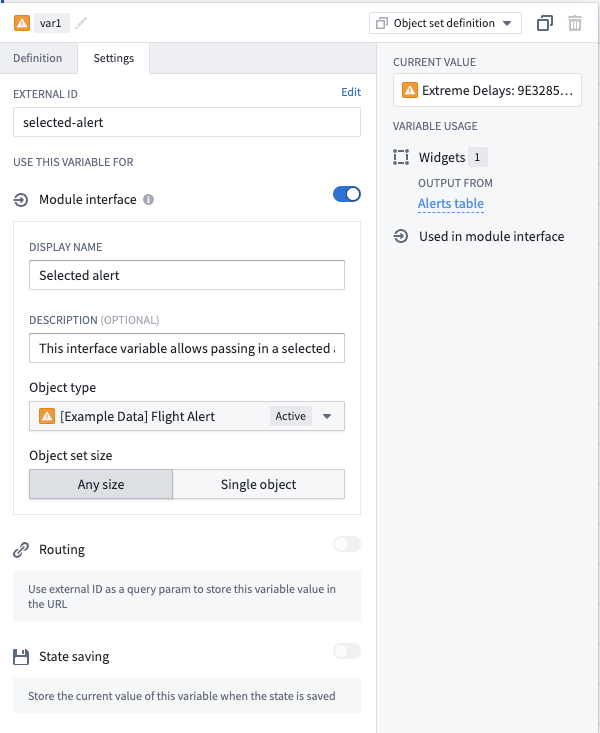

Variables can be added to the module interface using the newly added variable settings panel. The settings panel provides the ability to set a display name and description for module interface variables, offering an improved experience when configuring variables to pass through the module interface from another module, such as when embedding a module or configuring an open Workshop module event. Moreover, the state saving and routing features can now be independently enabled, offering greater flexibility and control over the module interface.

The variable settings panel, featuring an object set variable configured for use with the module interface.

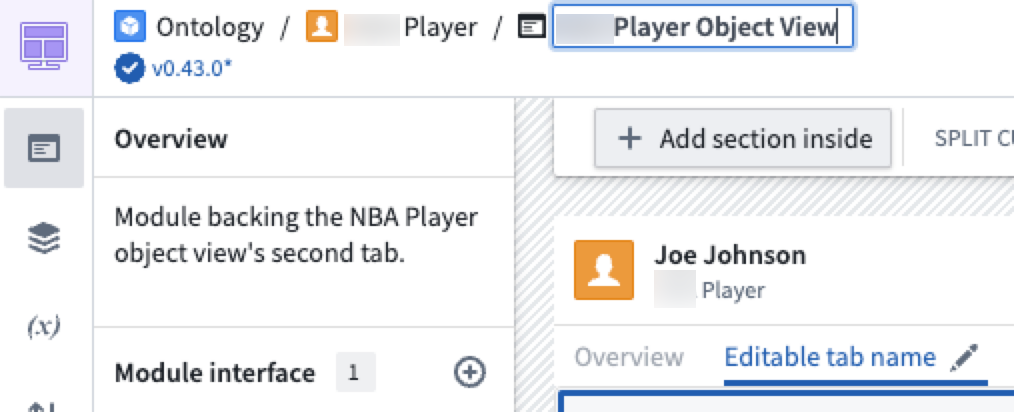

New object view tabs are now built solely in Workshop

Date published: 2024-04-02

Workshop is now the sole application builder for content in new tabs in object views. While existing Object View tabs built using the legacy application builder are still supported, you can no longer create new tabs of this type.

Building with Workshop means more flexible layout options, more powerful widgets and visualizations, and configurable data edits via actions. Creating a new tab will now automatically generate a new Workshop module to back the tab. When a new object type is created, Workshop also backs a cleaner default object view which contains a single tab displaying the object's properties and links.

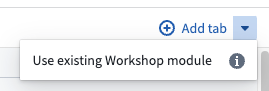

Additionally, you can also embed existing modules to be used as object views by using the ▼ dropdown menu available next to Add tab.

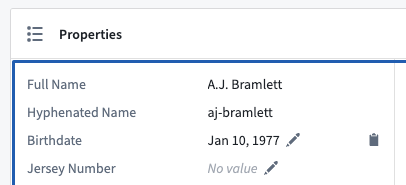

Recent improvements to the Workshop object view building experience include the following:

- Improvements to the Property List widget including inline editing support, one-click value copying, time dependent property support, and value wrapping.

Inline editing and one-click value copying.

Time dependent properties.

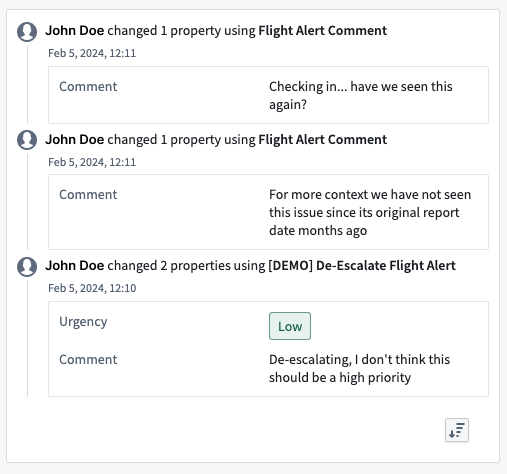

- A new Object Edits History widget object types using Objects Storage V1.

Object edits history at a glance.

- The ability to rename object view modules and publish the object views directly in Workshop.

Editable module name, and editable object view tab name from within Workshop.

Review the Configure tabs documentation to learn more about editing object views.

Additional highlights

Administration | Upgrade Assistant

Listing Maintenance Operator contact information in Upgrade Assistant and related resources | We are working on providing maintenance operators and end users with a seamless, efficient experience when performing required upgrades on Palantir Foundry. As a first step, the maintenance operator role will be more visible across the platform. Users will be able to find their Organization's maintenance operator listed in Upgrade Assistant and notifications related to upgrades, as well as any other relevant areas. Users are now able to easily identify the maintenance operator when action is required for upgrades and communicate with the operator when clarification and guidance is required in a timely manner.

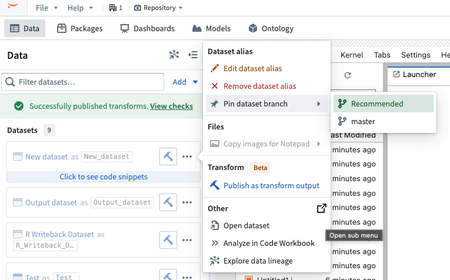

Analytics | Code Workspaces

Branch pinning for dataset aliases | Dataset alias mappings in Code Workspaces can now be pinned to a specific branch, allowing you to use data from the exact branch of a dataset. As part of this change, it is now also possible to import the same dataset more than once so that you can use data from multiple branches of the same dataset. To use this feature, open the context menu for an imported dataset and select Pin dataset branch. Select a specific branch to pin, or select Recommended to restore the default behavior.

Analytics | Notepad

Seamless Media Set Link Transformation in Notepad | Embedding a media set link into Notepad now intuitively transforms it into a resource link, enhancing the ease of connecting to media sets.

Analytics | Quiver

Autocomplete match conditions for object-derived datasets in the Materialization join editor | The Materialization join editor now provides autocomplete functionality for datasets derived from object sets. If both the left and right sides of the join are converted object sets, the editor will attempt to auto-complete the join condition with the primary / foreign key columns. The autocomplete logic will run whenever either of the two sides is changed; if either of the two is not an object set, the configuration will be left unchanged. If both sides are object types but have no link, the match condition will be undefined. Note that the inputs must be directly-converted object sets; an object set that has been previously converted to a materialization will not be detected.

App Building | Ontology SDK

Refined Ontology SDK Resource Handling | The Ontology SDK now offers improved mechanisms for managing application resources, ensuring dependencies are automatically accounted for when resources are added or removed. A new alert feature cautions developers about potential issues when excluding resources, providing an option to preserve them and prevent application disruptions. This enhancement promotes a seamless transition across SDK updates and simplifies the removal of outdated resources through the OAuth & Scopes interface.

App Building | Workshop

Gantt Chart widgets now support more timing options | The Gantt Chart widget has been upgraded to support custom timings backed by functions on objects (FoO) to provide more flexibility in visualizing Gantt charts. Previously, Gantt Chart widgets only supported properties for determining event timing.

Expanded Workshop Conversion Capabilities | Workshop now supports additional data type conversions in Variable Transforms, including Date to Timestamp, Timestamp to Date, and String to Number, enabling more flexibility in data manipulation.

Enable column renames on export button events | Workshop now offers the ability to personalize property column names when setting up exports to CSV or clipboard formats. This update introduces a new level of customization for organizing and labeling exported data.

Data Integration | Code Repositories

Data Integration | Pipeline Builder

Accelerated Batch Processing in Pipeline Builder | Pipeline Builder now supports natively accelerated pipelines, offering significant performance improvements for batch processing. Refer to the updated documentation for details on managing native acceleration.

Improved Dataset and Time Series Labeling in Pipeline Builder | Within Pipeline Builder, users now have the enhanced capability to directly modify the names of dataset inputs and constructed dataset outputs via the graph's context menu. This enhancement extends to the renaming of constructed time series synchronizations, which is also accessible through the same context menu interaction.

Dev Ops | Marketplace

Customizable Thumbnails for Marketplace Listings | The Marketplace has been enhanced to support customizable thumbnails for each product version within the DevOps application, ensuring a more visually appealing presentation on the Marketplace Discovery page. Should a thumbnail not be provided, a default image corresponding to the product category will be displayed instead.

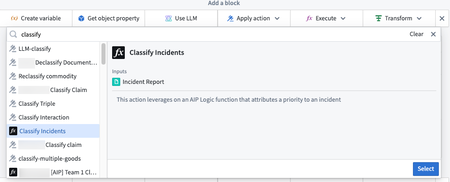

Model Integration | AIP Logic

Introducing Branching in AIP Logic | AIP Logic now supports branching, which is a more efficient way to collaboratively manage edits. Users can now create a new branch, save changes, and merge those changes into the main version when they are done iterating.

Precise Parameter Control with New Apply Action Block | The new Apply Action block in AIP Logic enables users to invoke actions with exact parameter configurations without having to go via an LLM block. This block gives you precise control over how parameters are filled out and speeds up the execution.

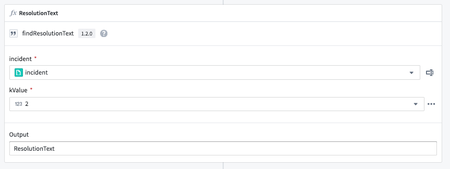

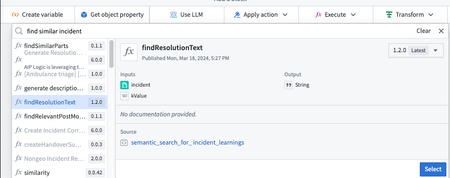

Streamlined Function Execution in AIP Logic | Users can now deterministically use functions within AIP Logic through the Execute block. This enhancement allows for precise data loading, which can be passed to an LLM block or used alongside the Transforms block. For example, users can leverage the output from a semantic search function through the Execute block to facilitate the retrieval of resolution text from similar incidents.

Model Integration | Modeling

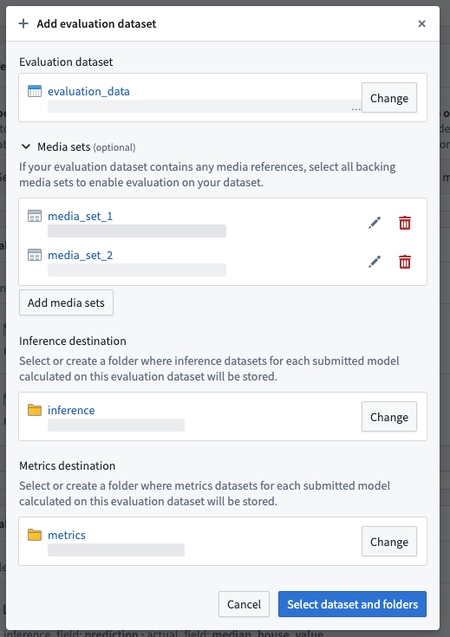

Streamlined Evaluation Setup with Direct Media Set Integration | Users can now directly integrate media sets into the evaluation configuration workflow in Modeling. The new media set integration feature automatically presents available options when selecting a dataset, simplifying the evaluation setup process and ensuring the correct media sets are easily included.

Ontology | Object Storage V2

Enhanced Indexing Prioritization in Object Storage V2 | Enhanced queuing logic in Object Storage V2 now ensures more efficient handling of indexing tasks, particularly for large shards, leading to reduced wait times for metadata updates and an overall boost in system performance.

Ontology | Ontology Management

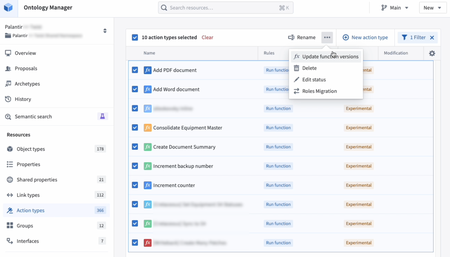

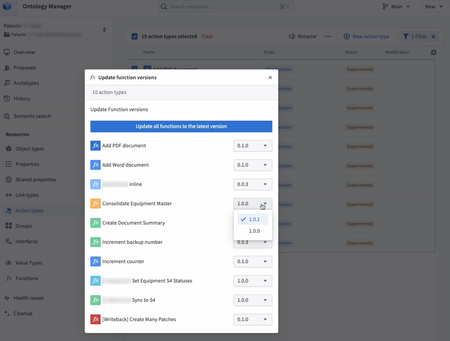

Update your action types to the latest function version in Ontology Manager | You can now update in bulk your function-backed actions to use the latest version of the backing function. This feature is accessible from the Action Types tab in Ontology Manager. Simply filter down the set of action types to those with the Run function rule, select the action types to update, and then select Update function version.

Enhanced Bulk Function Version Management in Ontology Manager | Ontology Manager introduces enhanced capabilities for managing multiple function versions simultaneously. Users can now efficiently update function version references in bulk for a variety of action types, ensuring up-to-date functionality across the board. To optimize server performance, updates are capped at 50 action types per operation. Note, action types linked to high-scale functions are not eligible for bulk updates.

Improved Alias Creation in Ontology Manager | Ontology Manager has been upgraded to facilitate the establishment of alternative designations, or aliases, for object types, enhancing the ability to locate objects when precise names are not known.

Ontology | Vertex

Streamlined Node Operations in Vertex Diagrams | Vertex diagrams now offer enhanced node management, enabling users to directly modify the backing object of a node within the diagram interface. The functionality for adjusting the backing object of edges has also been improved, now accessible via the component root editor for more intuitive use.

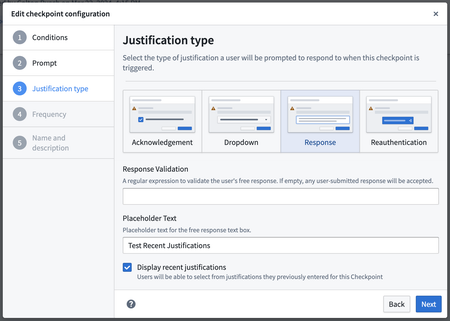

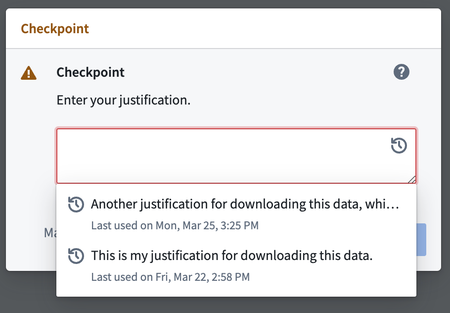

Security | Checkpoints

Streamlined Justification Retrieval for Checkpoints | Users can now swiftly access their top five most recent justifications for text-based prompts within Checkpoints, enhancing efficiency in adhering to organizational standards. This convenience is extended to both Response and Dropdown Checkpoints justification categories and remains available for 30 days post-justification creation. To activate this feature, administrators may select the corresponding setting in the Checkpoints configuration. For further details, refer to documentation on configuring recent justifications.

Introduction of New Checkpoint Varieties: Schedule Delete and Run | Users now have access to additional checkpoint types: Schedule, Delete, and Run. These new options enhance the flexibility and efficiency of managing checkpoint timetables, contributing to more streamlined workflows.

Security | Projects

General Availability of Project Constraints | The introduction of Project constraints allows for the support of advanced governance guardrails on how data can be used. With project constraints, owners can configure which markings may or may not be applied on files within a Project. Project constraints can allow for the effective technical implementation of data protection principles like purpose limitation.

This is typically used to prevent users from accidentally joining data that should not be joined and is relevant in situations where users might need access to multiple markings though specific combinations of marked data should not be allowed. For example, a bank might have a requirement that sensitive investment data can never be joined with research data for compliance reasons. However, compliance officers may need access to both investment and research data separately to do their work.

For more information, see project constraints documentation and details on how to configure.