8. [Repositories] Ontology Data Pipelines27. Scheduling Writeback Dataset Builds

27 - Scheduling Writeback Dataset Builds

This content is also available at learn.palantir.com ↗ and is presented here for accessibility purposes.

📖 Task Introduction

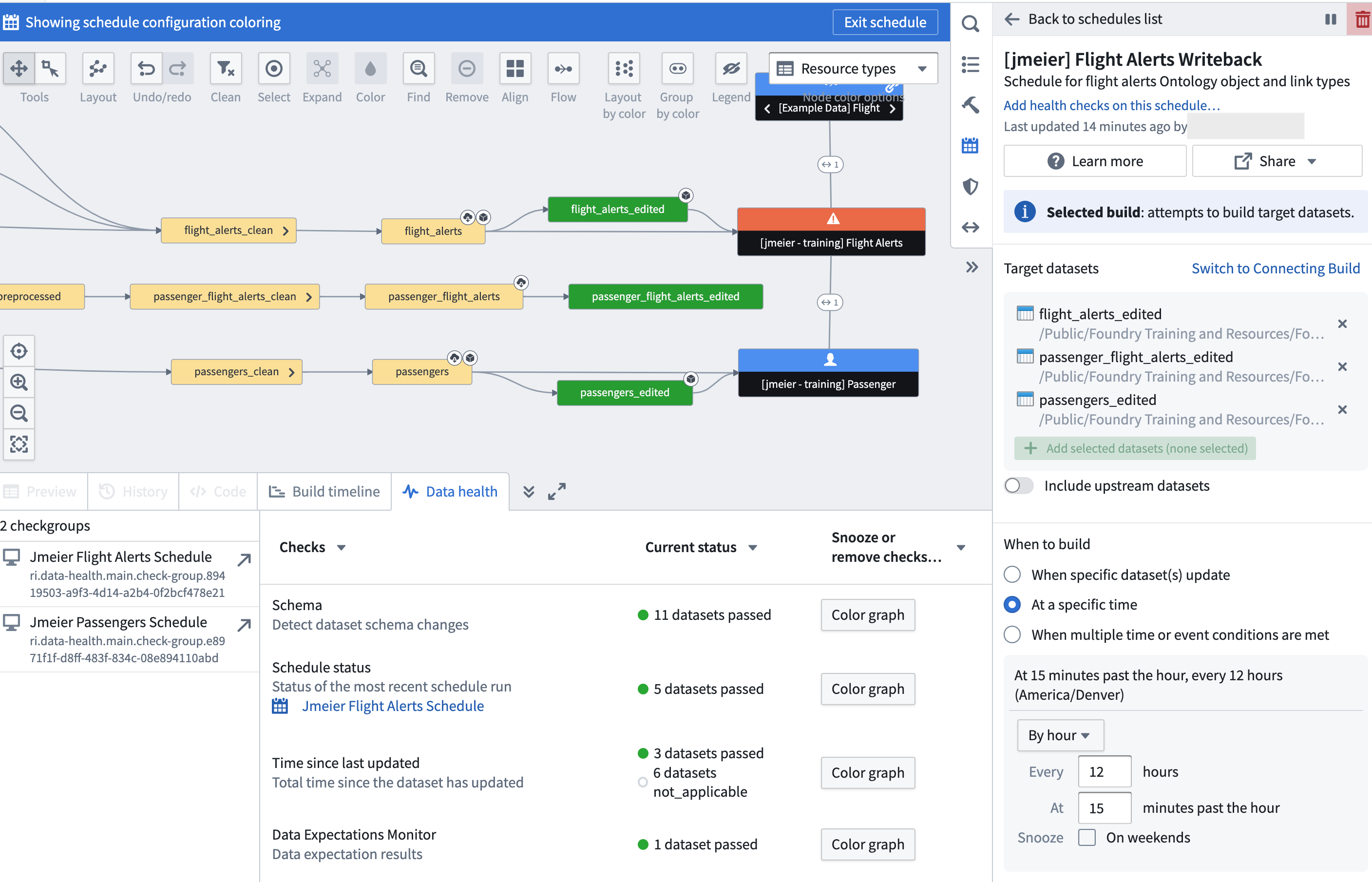

Edits entered into front end Ontology-aware applications (e.g., apps you build in Workshop, Slate, Object Views, and Quiver) are stored in the object storage service and only written into the writeback dataset when it is built. As a data engineer, you'll therefore work with your team to determine how often that writeback dataset should be built and establish a monitored schedule to keep the data fresh.

🔨 Task Instructions

- Open your Data Lineage graph in

/Ontology Project: Flight Alerts/documentation/. - Mouse over your two object type nodes and click the

<that now appears on each, indicating a new linked dataset. This will bring your writeback datasets to the graph. - Expand the new linked node on your

passenger_flight_alertsdataset node. This will bring the writeback dataset for your link type to the graph as shown in the image below.- Let's assume your use case calls for a twice daily build of these writeback datasets based on the expected frequency of edits made through Ontology-aware apps and the need for updated data downstream of these writeback datasets for analysis purposes.

- Highlight all three writeback datasets on the graph, and click the Manage Schedules icon in the right-hand panel of Data Lineage.

- Click the blue Create new schedule button.

- Call your schedule

[yourName] Flight Alerts Writebackand provide a brief description: "Schedule for flight alerts Ontology object and link types."- Notice that your writeback datasets are set as targets of the build.

- In the When to build section, select At a specific time.

- Set the schedule to run By hour every 12 hours at 15 minutes past the hour (to avoid, for example, an anticipated rush across your organization to build at the top of each hour).

- In the Advanced options section, choose to Abort build on failure and set the job to retry twice with one minute between retries.

- Install a Time Since Last Updated check on each of the three writeback datasets that confirms they've been updated in the last 13 hours (since your schedule runs every 12 hours).

- Add the checks to their corresponding check group.