8. [Repositories] Ontology Data Pipelines11. Ontology Data Transforms

11 - Ontology Data Transforms

This content is also available at learn.palantir.com ↗ and is presented here for accessibility purposes.

📖 Task Introduction

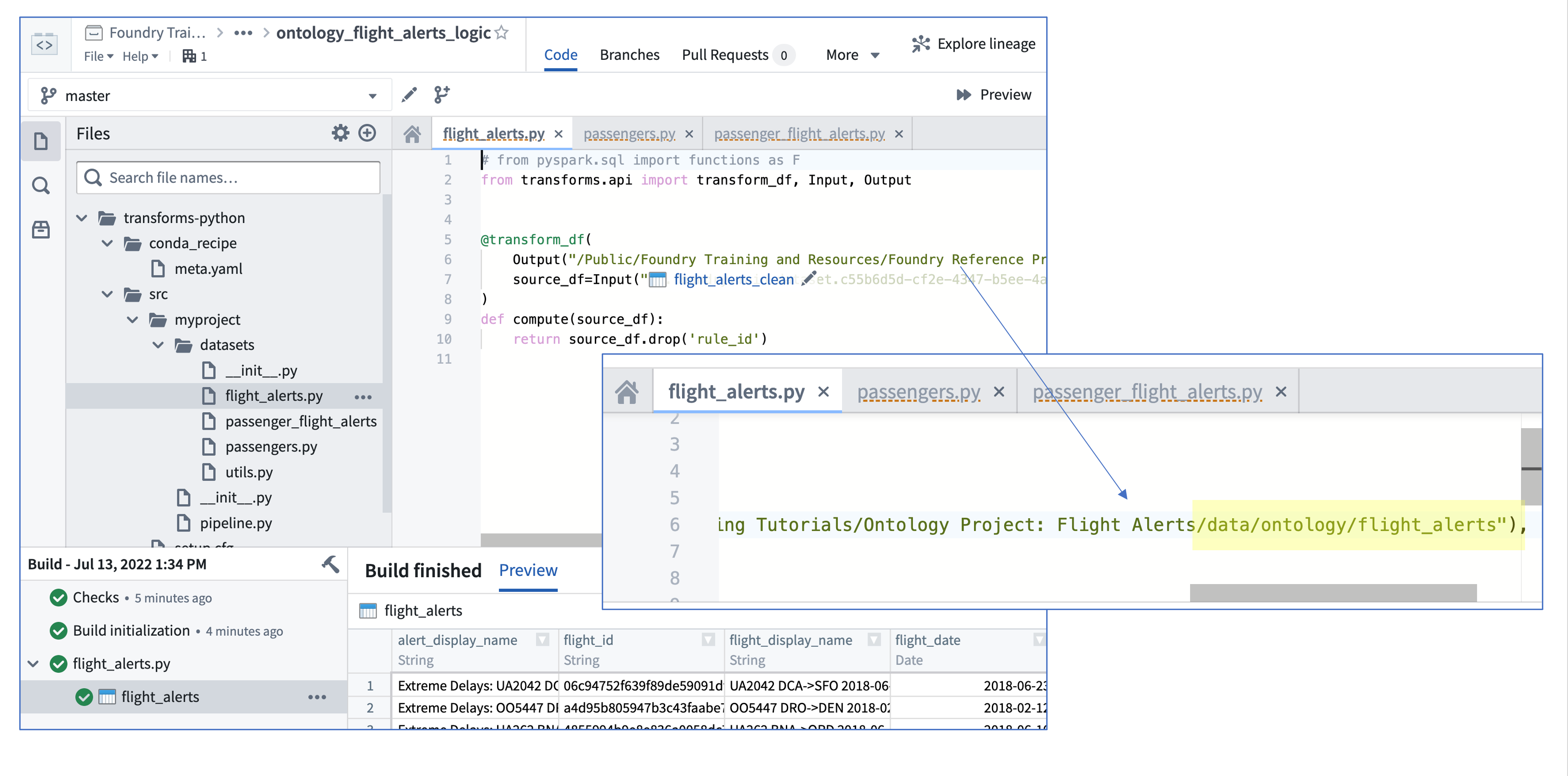

In your new repository, you'll be transforming three input datasets to prepare them to back Ontology object and link types.

flight_alerts_clean: This will back our flight alert object type, but first we want to remove therule_idcolumn, since it's not needed in any anticipated workflows (and reducing the amount of data to be synchronized to the Ontology storage service also reduces computation load).passengers_clean: We determined this dataset requires no updates at this point, so we'll pass it through as an identity transform.passenger_flight_alerts_clean: There is a many-to-many relationship between passengers and flight alerts. Just as with many-to-many joins in a relational database, a join table is needed to back many-to-many link types in the Ontology. We'll therefore also need to prepare this dataset, which is already a part of our pipeline (and which we'll assume also needs no further preparation).

🔨 Task Instructions

- In your code repository files, delete

/datasets/examples.pyby clicking the...next to the file name and choosing Delete from the menu of options. - Using the process you've learned in this training track, create a new file in

/datacalledflight_alerts.py. - Configure the Input to be your

flight_alerts_clean(your Foundry environment will likely contain manyflight_alerts_cleandatasets, so double check that you've got the one you've created in your pipeline). Ensure that your Output location is.../Ontology Project: Flight Alerts/data/ontology/..., creating these subfolders if necessary (see image below)

- Remove the

rule_idcolumn by, for example, calling.drop('rule_id')on the returned dataframe. - Preview your change and commit your code with a meaningful message.

- Create new transform files in

/datasetsforpassengers.pyandpassenger_flight_alerts.py. - For each, adjust the Output path per the instructions in Step 3 above.

- Ensure the input for

passengers.pyis set to yourpassengers_cleanand thatpassenger_flight_alerts.pyuses yourpassenger_flight_alerts_cleandataset as input. - Recall no data transformation is needed for either of these datasets, so we can simply return the inputs.

- Preview and commit your code.

- Build each transform file by clicking the Build button for each file (i.e., you'll need to click the build button once for each transform file you open).