Create a model adapter

There are two primary methods for creating a model adapter. The first is through a standalone Model Adapter Library template, while the second integrates Python transforms and training code into a single repository alongside the custom adapter code.

The Model Adapter Library template should be used for the following:

- Container-backed models

- External models

- Creating a model adapter that can be shared across repositories

The Model Training template should be used for most models that are created in Foundry through Python transforms. By integrating the model adapter definition into the transforms repository, you can iterate on the adapter and training logic quicker than with the Model Adapter Library template.

Below is a comparison of the two templates, along with guidance on when to use each:

| Model Training | Model Adapter Library | |

|---|---|---|

| Supported model types | Python transforms | Python transforms, Container, External |

| Limitations | Hard to re-use across repositories | Slower iteration cycles |

Model Adapter Library template

To create a container, external, or re-usable Python transforms model adapter, open the Code Repositories application and choose Model Adapter Library.

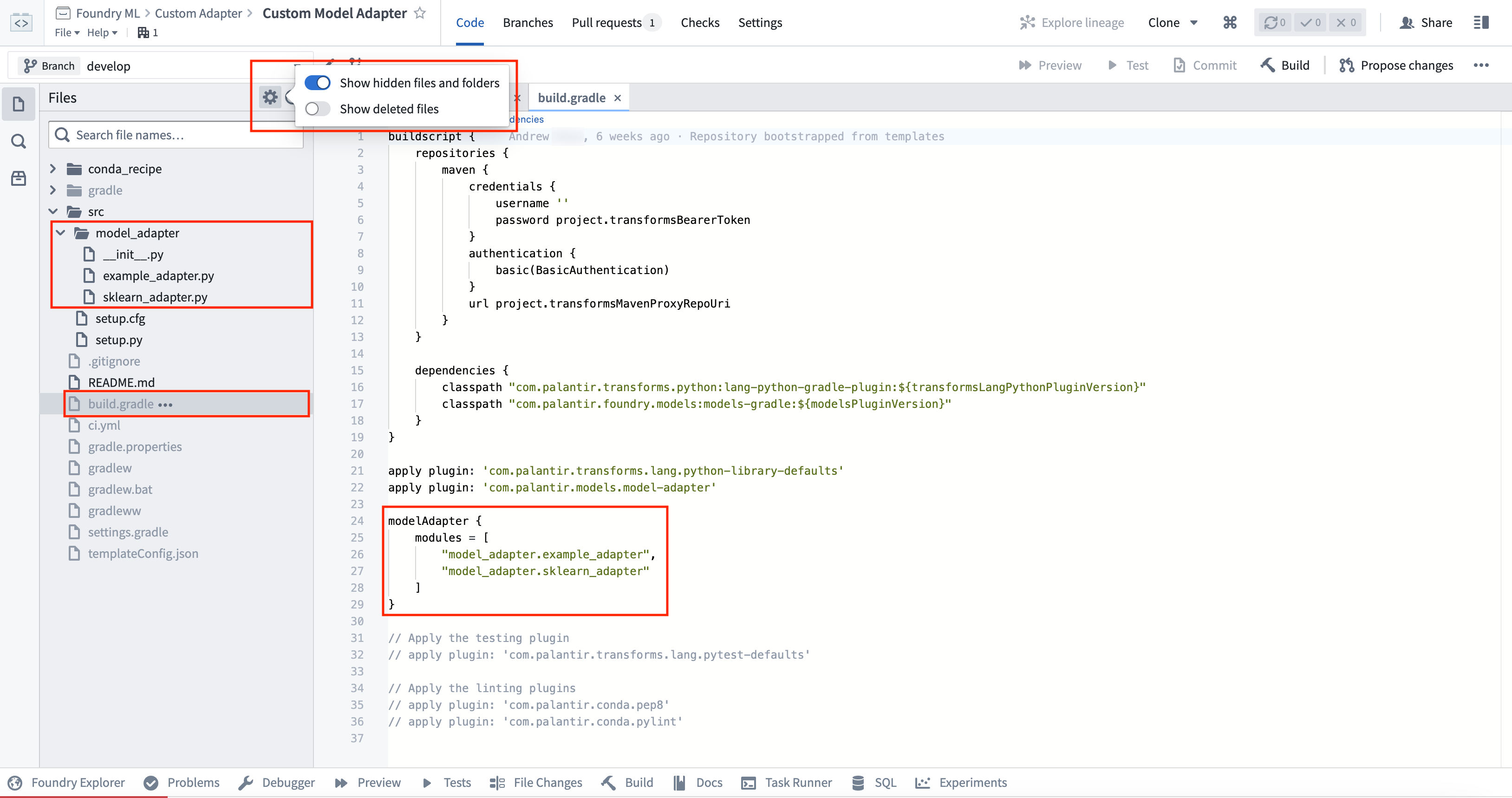

The Model Adapter Library contains some example implementation in the src/model_adapter/ directory. Model adapters produced using this template have a configurable package name, initially derived from the name of the repository. You can view and modify the condaPackageName value in the hidden gradle.properties file (note that spaces and other special characters will be replaced with - in the published library).

As with other Python library repositories, tagging commits will publish a new version of the library. These published libraries can be imported into other Python transforms repositories for both model training and inference.

A repository can publish multiple model adapters. Any additional adapter implementation files must be added as a reference to the list of model adapter modules within the build.gradle hidden file of the adapter template. By default, only the model_adapter.example_adapter will be published.

The implementation of the adapter depends on the type of model being produced. Learn more in the external model adapters documentation, or review the example container model adapter.

Model Training template

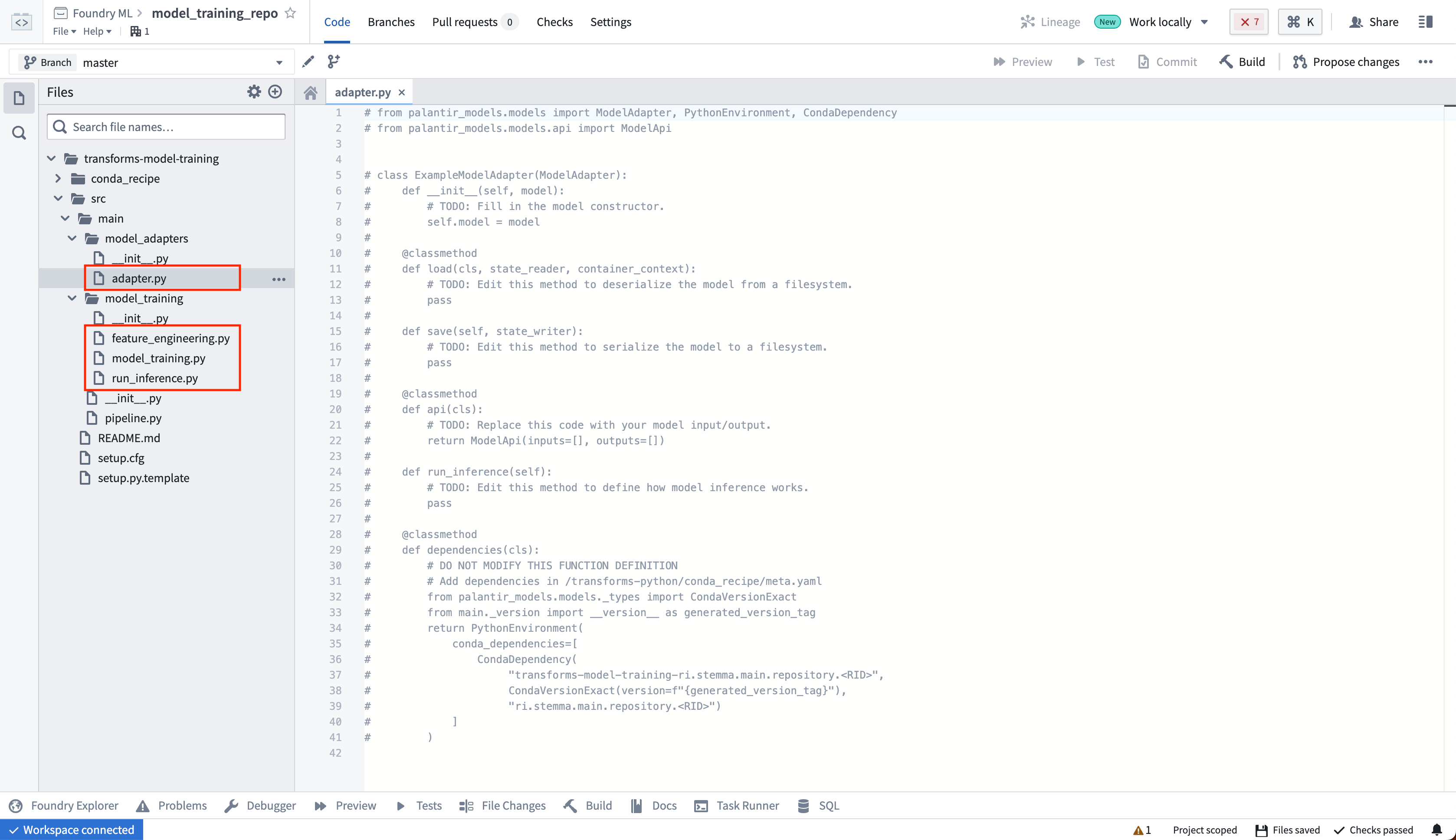

To create a model directly in Foundry, open the Code Repositories application and choose the Model Training template. This repository allows you to author Python data transforms to train and run inference on models.

Model adapters produced using this template cannot have a custom package name or be tagged. The adapter will be published in a library called transforms-model-training-<repository rid>, and the version will be derived from the Git commit. The full name and version of the package can be viewed on the Palantir model page. This library must be added to downstream repositories to be able to load the model as a ModelInput. If this is not suitable for your use case, the Model Adapter Library template allows you to configure both the version and package name.

To learn more, read the documentation on training models in Foundry with the training template.