Example: Integrate an Open AI model

The below documentation provides an example configuration and model adapter for a custom connection to an Open AI model. Review the benefits of external model integration to make sure this is the right fit for your use case.

For a step-by-step guide, refer to our documentation on how to create a model adapter and how to create a connection to an externally hosted model.

Example Open AI model adapter

To use this example in Foundry, publish and tag a model adapter using the model adapter library in the Code Repositories application.

This model adapter configures a connection to our Azure-hosted instance of Open AI. This was tested with Python 3.8.17, pandas 1.5.3, and openai 1.1.0. The following five inputs are required to construct an OpenAI client:

base_url- Provided as base_urlapi_type- Provided in connection configurationapi_version- Provided in connection configurationengine- Provided in connection configurationapi_key- Provided as resolved_credentials

Copied!1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76import palantir_models as pm import models_api.models_api_executable as executable_api from typing import Optional import openai import logging logger = logging.getLogger(__name__) class OpenAIModelAdapter(pm.ExternalModelAdapter): def __init__(self, base_url, api_type, api_version, engine, api_key): # Define engine to be used for completions self.engine = engine # Setup OpenAI Variables openai.api_type = api_type openai.api_key = api_key openai.api_base = base_url openai.api_version = api_version @classmethod def init_external(cls, external_context) -> "pm.ExternalModelAdapter": base_url = external_context.base_url api_type = external_context.connection_config["api_type"] api_version = external_context.connection_config["api_version"] engine = external_context.connection_config["engine"] api_key = external_context.resolved_credentials["api_key"] return cls( base_url, api_type, api_version, engine, api_key ) @classmethod def api(cls): inputs = {"df_in": pm.Pandas(columns=[("prompt", str)])} outputs = {"df_out": pm.Pandas(columns=[("prompt", str), ("prediction", str)])} return inputs, outputs def predict(self, df_in): predictions = [] for _, row in df_in.iterrows(): messages = [{"role": "user", "content": row['prompt']}] try: response = openai.ChatCompletion.create( engine=self.engine, messages=messages, ) except openai.error.Timeout as e: logger.error(f"OpenAI API request timed out: {e}") raise e except openai.error.APIError as e: logger.error(f"OpenAI API returned an API Error: {e}") raise e except openai.error.APIConnectionError as e: logger.error(f"OpenAI API request failed to connect: {e}") raise e except openai.error.InvalidRequestError as e: logger.error(f"OpenAI API request was invalid: {e}") raise e except openai.error.AuthenticationError as e: logger.error(f"OpenAI API request was not authorized: {e}") raise e except openai.error.PermissionError as e: logger.error(f"OpenAI API request was not permitted: {e}") raise e except openai.error.RateLimitError as e: logger.error(f"OpenAI API request exceeded rate limit: {e}") raise e predictions.append(response.choices[0].message.content) df_in['prediction'] = predictions return df_in

Open AI model configuration

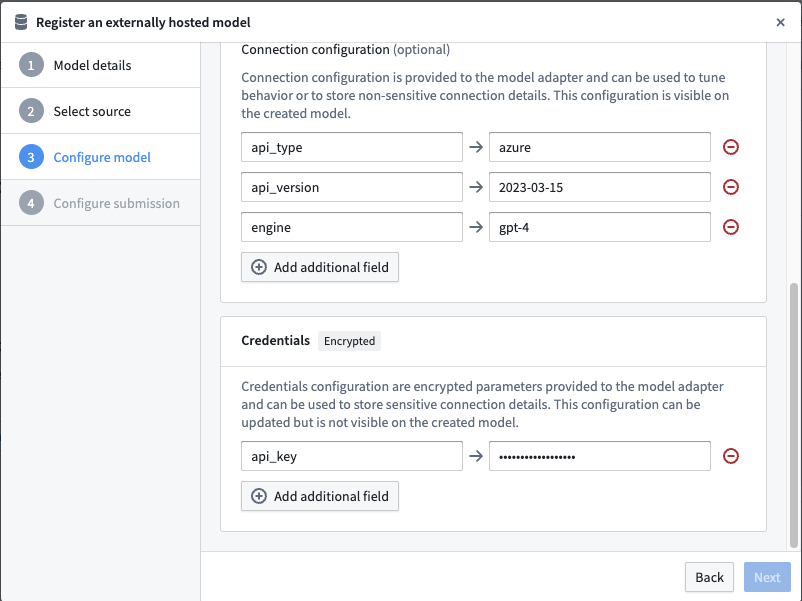

Next, configure an externally hosted model to use this model adapter and provide the required configuration and credentials as expected by the model adapter.

Note that the URL and the configuration and credentials maps are completed using the same keys as defined in the model adapter.

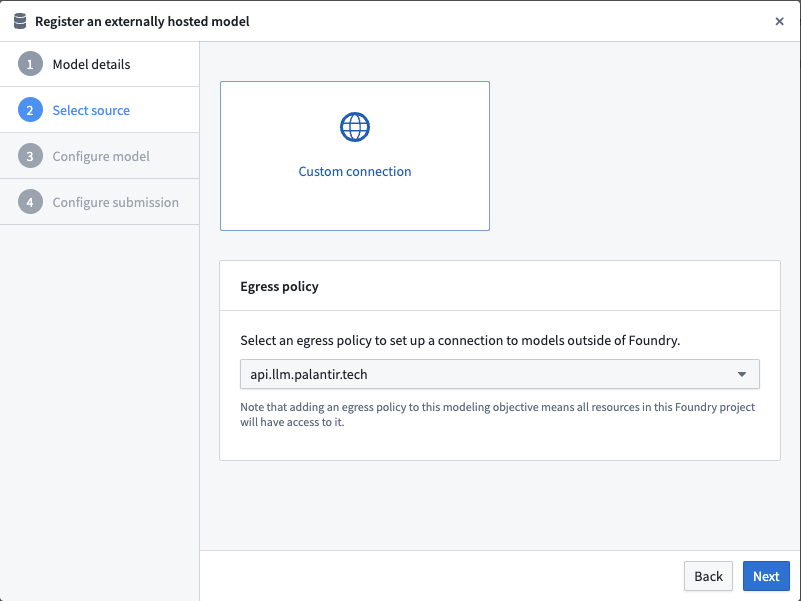

Select an egress policy

The example below uses an egress policy that has been configured for api.llm.palantir.tech (Port 443).

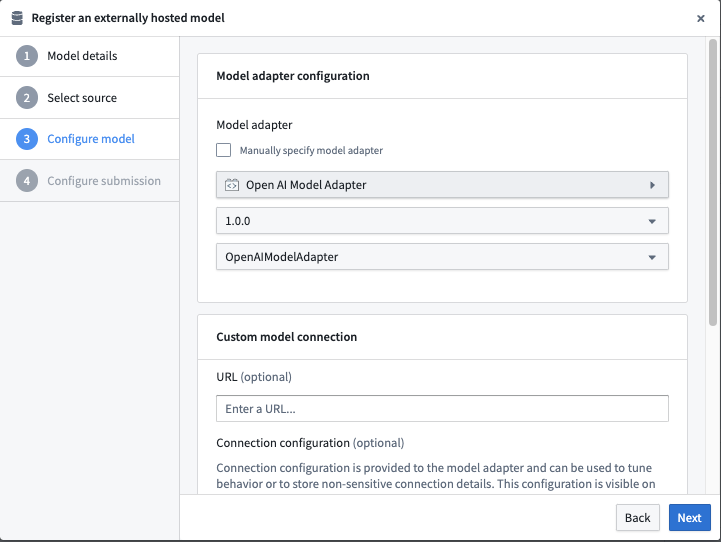

Configure model adapter

Choose the published model adapter in the Connect an externally hosted model dialog.

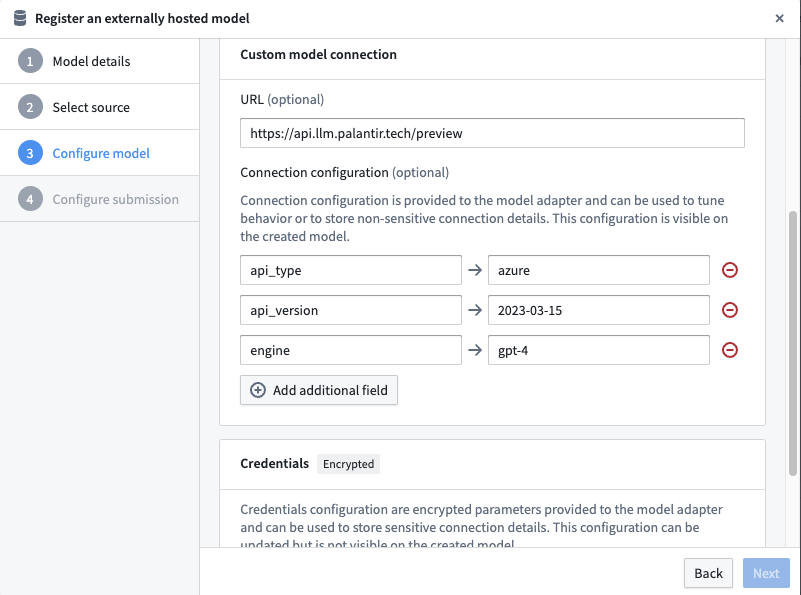

Configure URL and connection configuration

Define connection configurations as required by the example Open AI model adapter.

This adapter requires a URL of: https://api.llm.palantir.tech/preview

This adapter requires connection configuration of the following:

- api_type - The Open AI model type to run inference against.

- api_version - The API version to use.

- engine - The model engine to use.

Configure credential configuration

Define credential configurations as required by the example Open AI model adapter.

This adapter requires credential configuration of the following:

- api_key - The secret key needed to query Open AI.

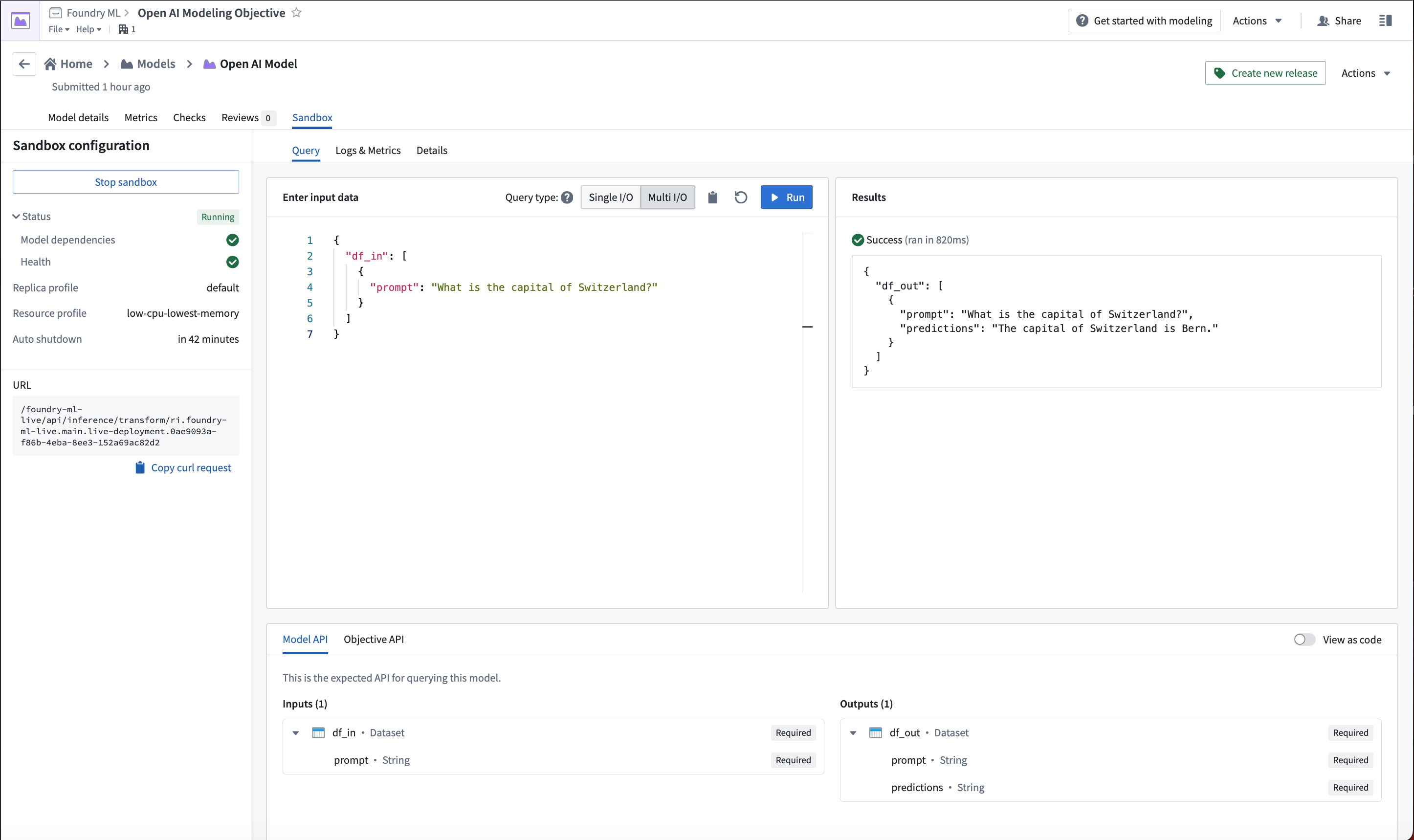

Open AI model usage

Now that the Open AI model has been configured, this model can be hosted in a live deployment or Python transform.

The below image shows an example query made to the Open AI model in a live deployment.