Review model metrics in the evaluation dashboard

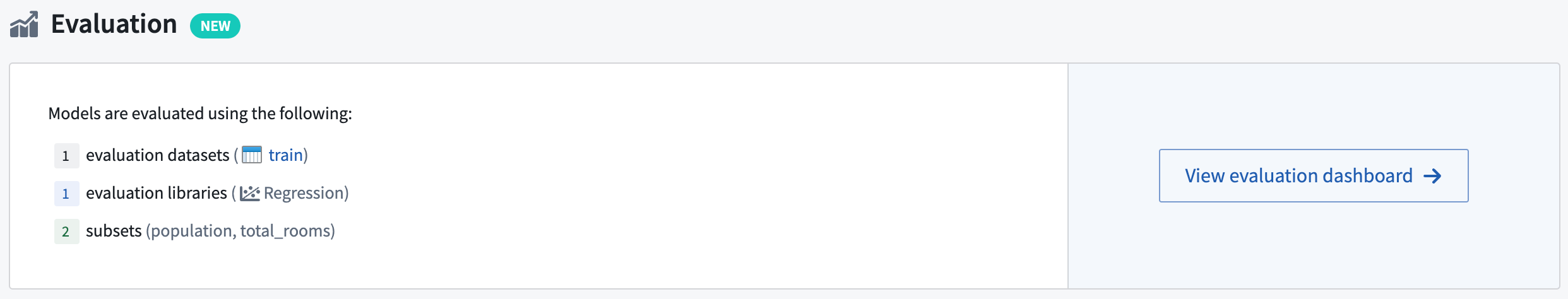

Model evaluation is critically important for operational modeling projects. Once you have generated metrics for the models submitted to your modeling objective either automatically or in code, you can compare models against each other in the evaluation dashboard. From the Modeling Objective home page, navigate to the evaluation dashboard by selecting View evaluation dashboard.

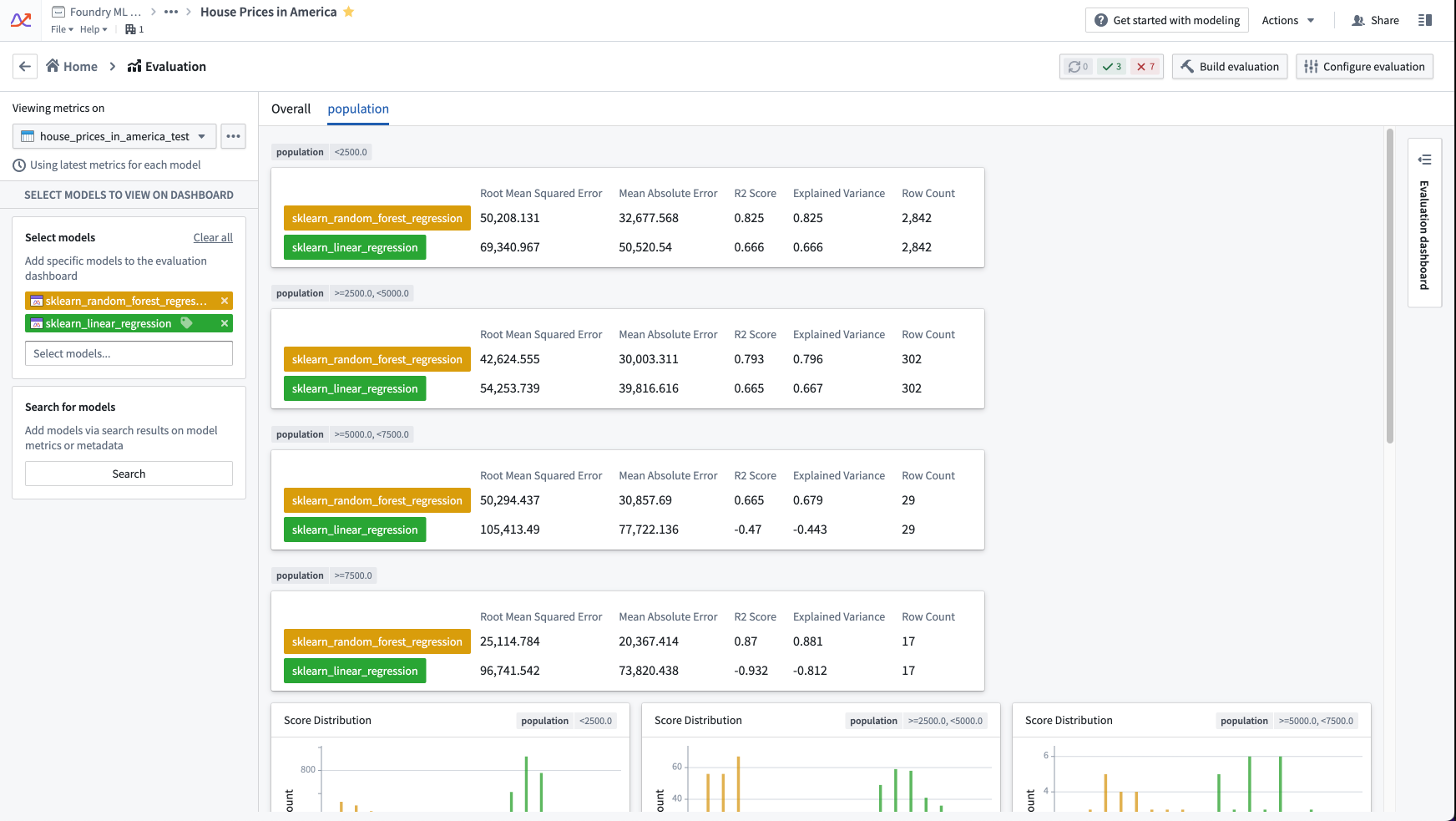

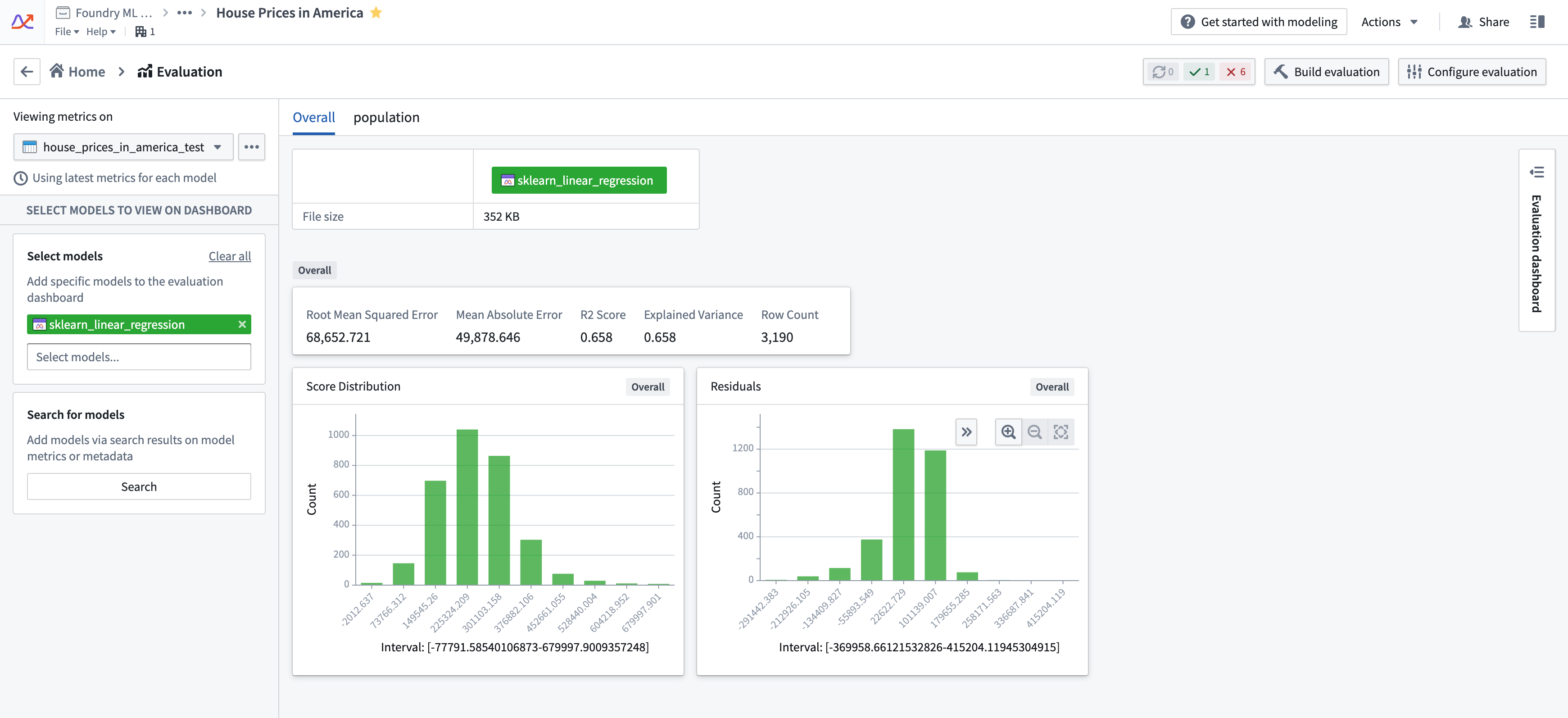

Evaluation dashboard

The evaluation dashboard provides a standard and centralized place for model metric and performance evaluation. All metrics that are associated with any model submission are available for review inside a modeling objective.

To ensure standardization of model metrics, only metrics that have been generated by the evaluation dashboard configuration are displayed by default in the evaluation dashboard. If you are instead manually evaluating models in code, you will need to adjust the settings for your modeling objective to disable Only show metrics produced by evaluation configuration in the modeling objective settings.

In the model evaluation dashboard, metrics are shown based on the intersection of the selected evaluation dataset and model submissions.

Select an input dataset

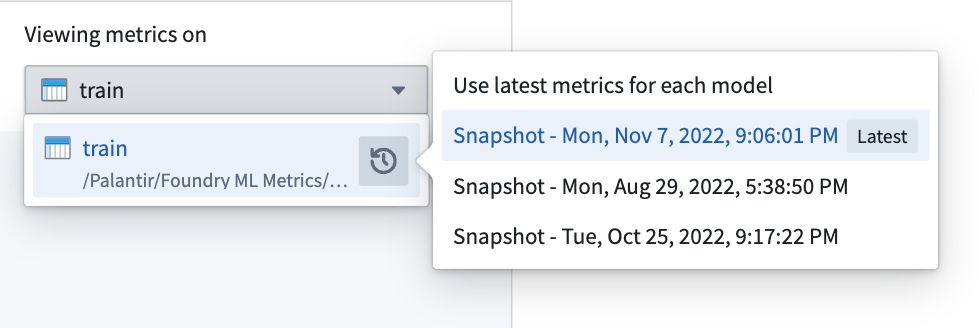

To select an input dataset, choose an evaluation dataset from the evaluation dataset dropdown at the top left of the evaluation dashboard. This selector allows you to choose an evaluation dataset or a specific dataset transaction of an evaluation dataset.

If you do not choose a specific transaction of an evaluation dataset, you will see the most recent metrics results for every selected model submission. If you select a specific transaction, you will only see the metrics that were built using that specific transaction of the evaluation dataset as input.

It is most statistically accurate to compare models across a specific transaction of the evaluation dataset. However, cross-transaction comparison can be useful to compare performance against model submissions where the model schema has changed.

Select models for evaluation

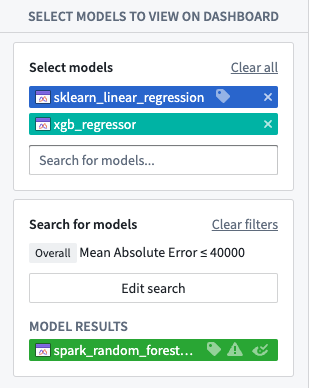

The next step to view evaluation results is to select the models for which you want to view metrics. The models displayed on the evaluation dashboard are the union of the models chosen in Select models and Search for models.

Select models

The first way to add model submissions to the evaluation dashboard is to select individual model submissions. The Select models dropdown allows you to choose one or more specific model submissions to add to the evaluation dashboard.

Search for models

If you have a large number of model submissions, it can be useful to add models that meet certain search criteria. The model submission search results will be added to the evaluation dashboard in addition to the models selected in Select models.

Model submission search supports searching for model submissions in several ways:

- Matching metrics criteria on all data in the evaluation dataset ("Overall")

- Matching metrics criteria on a defined subset of the evaluation dataset

- By a specific model owner or model submitter

- By model metadata

Evaluation dashboard layout

The evaluation dashboard separates the metrics on model submissions into tabs. The tabs displayed are a combination of the subsets configured in the evaluation configuration and, if enabled in the modeling objective settings, the custom pinned metrics views.