Announcements

Introducing Linter: Available mid-September 2023 [Beta]

Date published: 2023-08-30

This feature is now generally available. Read the latest announcement.

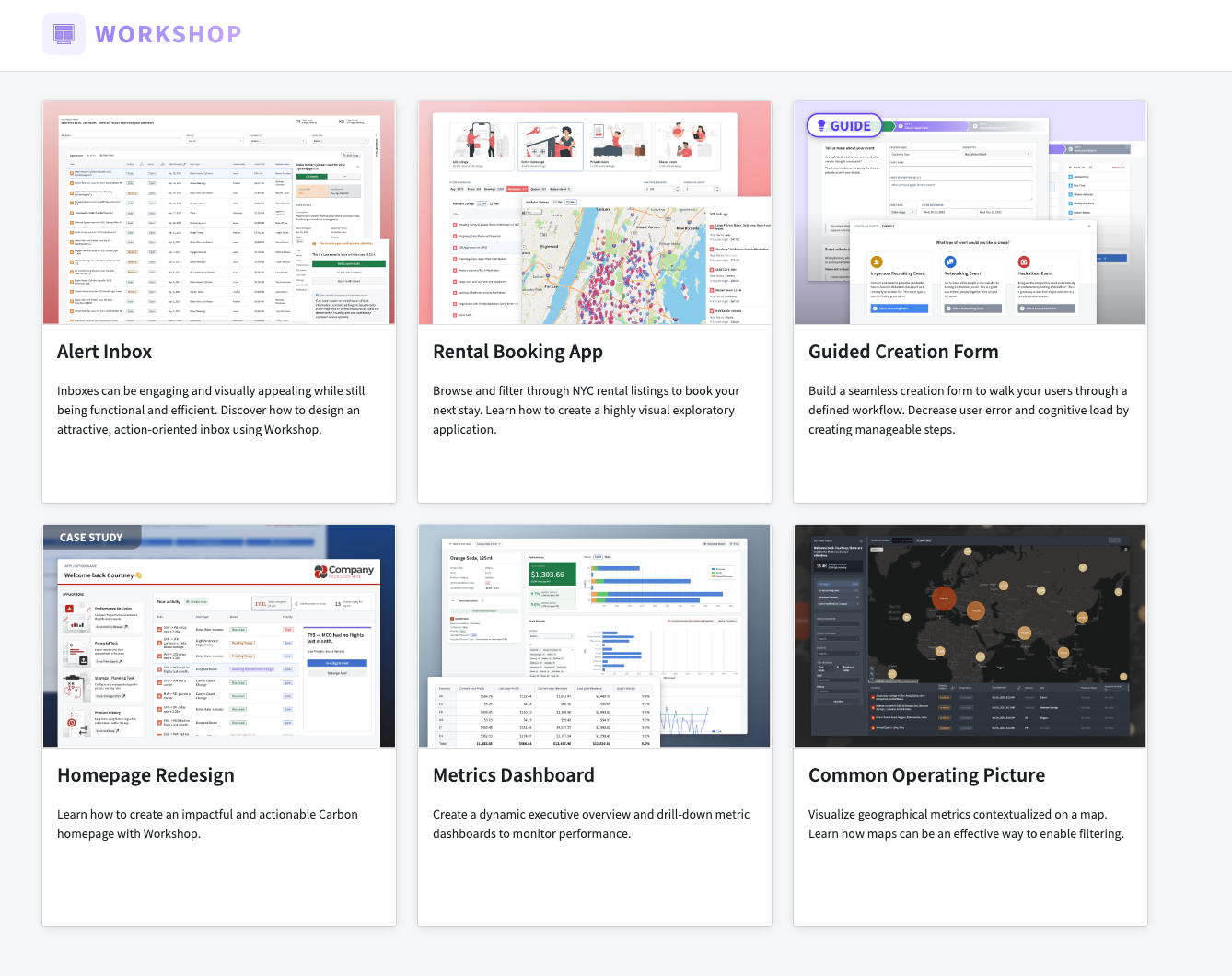

Introducing the Workshop Design Hub, a Marketplace product

Date published: 2023-08-24

The Workshop Design Hub, a set of modules designed to help inspire you in developing beautiful low-code applications, is now available. These high-quality Workshop modules are end-to-end examples that employ notional data to showcase a variety of application types and use case needs highlighting the versatility of Workshop. You can use these modules to explore Workshop functionality, reverse-engineer for your own needs, or level up your application building skills to create a tailored application from scratch.

Install and Access the Workshop Design Hub

The Workshop Design Hub is a Marketplace product. Foundry enrollment administrators must first install the Workshop Design Hub via Marketplace and make it accessible to all enrollment users. Once installed, the modules can be found in a project named "Workshop Design Hub".

For guidance on installing Workshop Design Hub, administrators can visit the Marketplace documentation.

To learn more about the Workshop Design Hub and the modules available, review its documentation.

Introducing the Retention Policies application: Available September 2023 [GA]

Date published: 2023-08-22

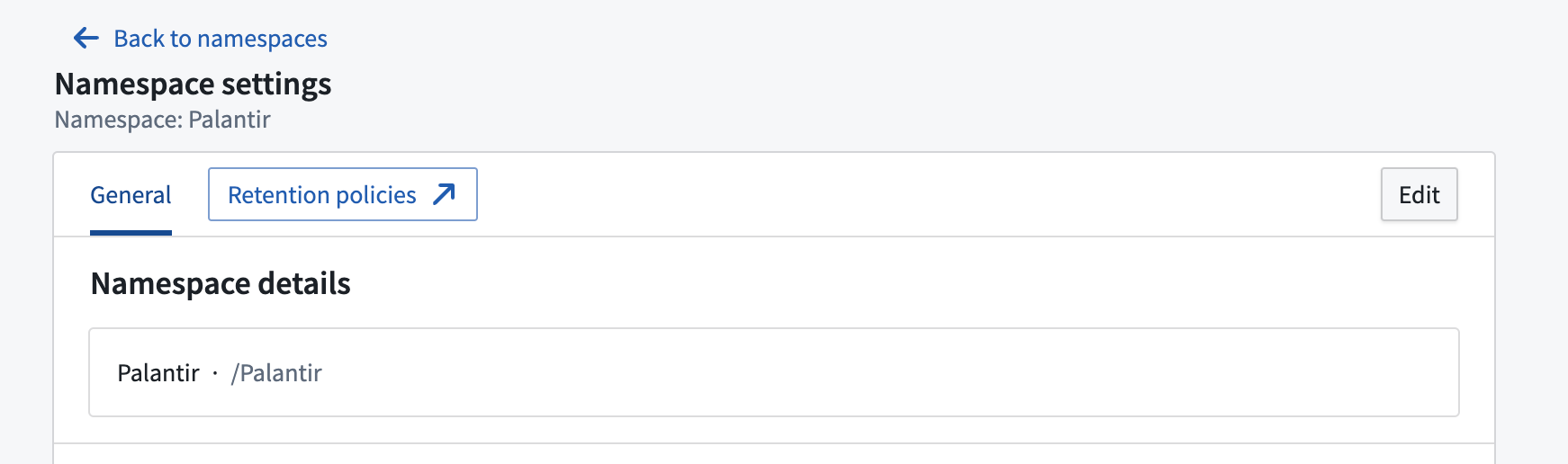

The Retention Policies application will be GA and available on all stacks by September 2023. The Retention Policies application enables namespace owners to define and manage both static retention policies and data lifetime policies. The new application replaces the use of the legacy YAML-based repository.

The Namespace settings page with a link to the Retention Policies application.

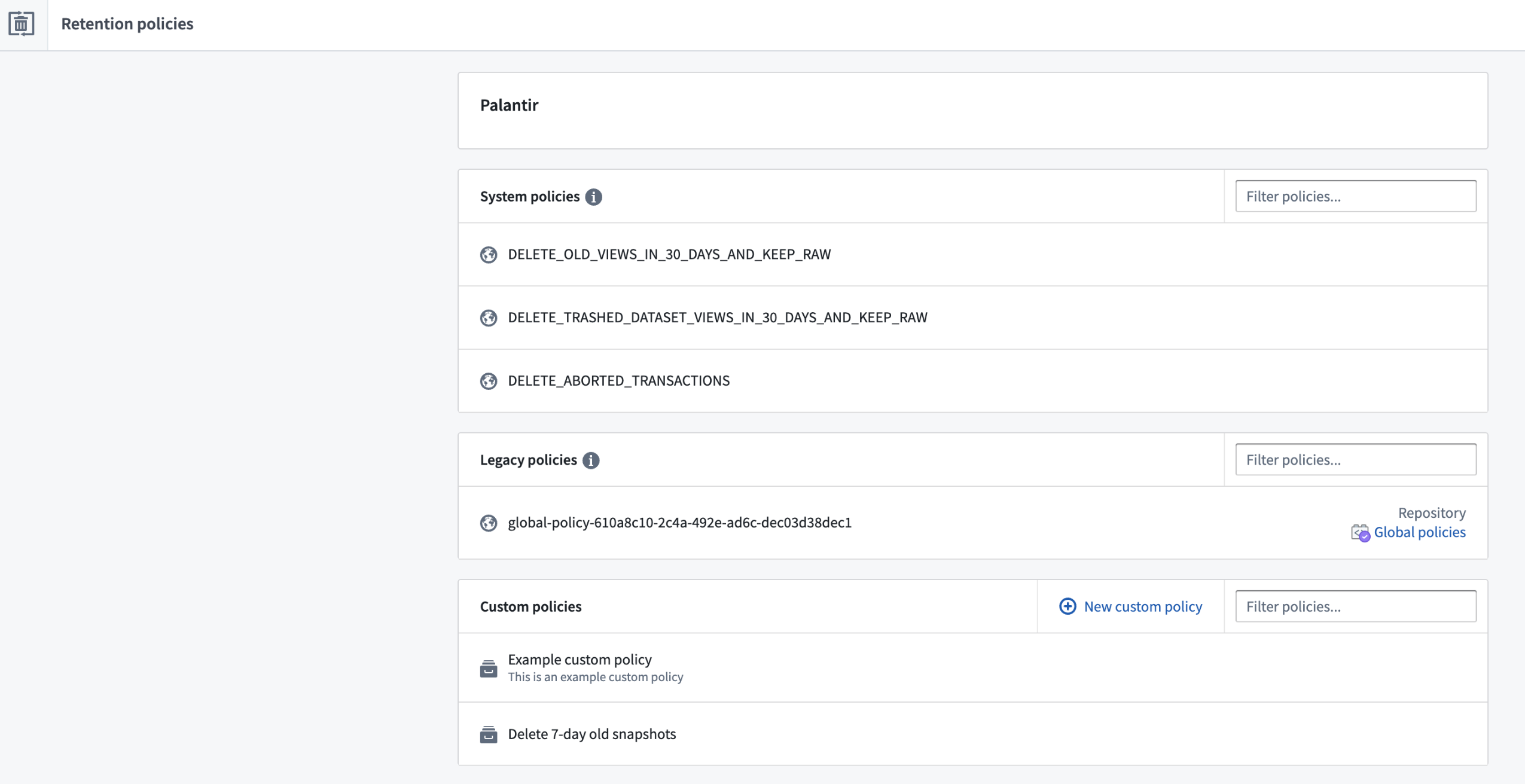

The application lists all retention policies defined on that namespace, including system, legacy, and custom policies.

- System policy: Retention policies managed by the platform. These policies are not configurable and are designed to only target old versions of data. If your use case requires these policies to be removed, contact your Palantir representative.

- Legacy policy: Retention policies managed by namespace administrators through Code Repositories. These are considered deprecated. We recommend migrating these policies to new custom policies and deleting the policy definition from the repository using the migration option on the legacy policy page.

- Custom policy: Policies managed by the namespace administrator using the Retention Policies application.

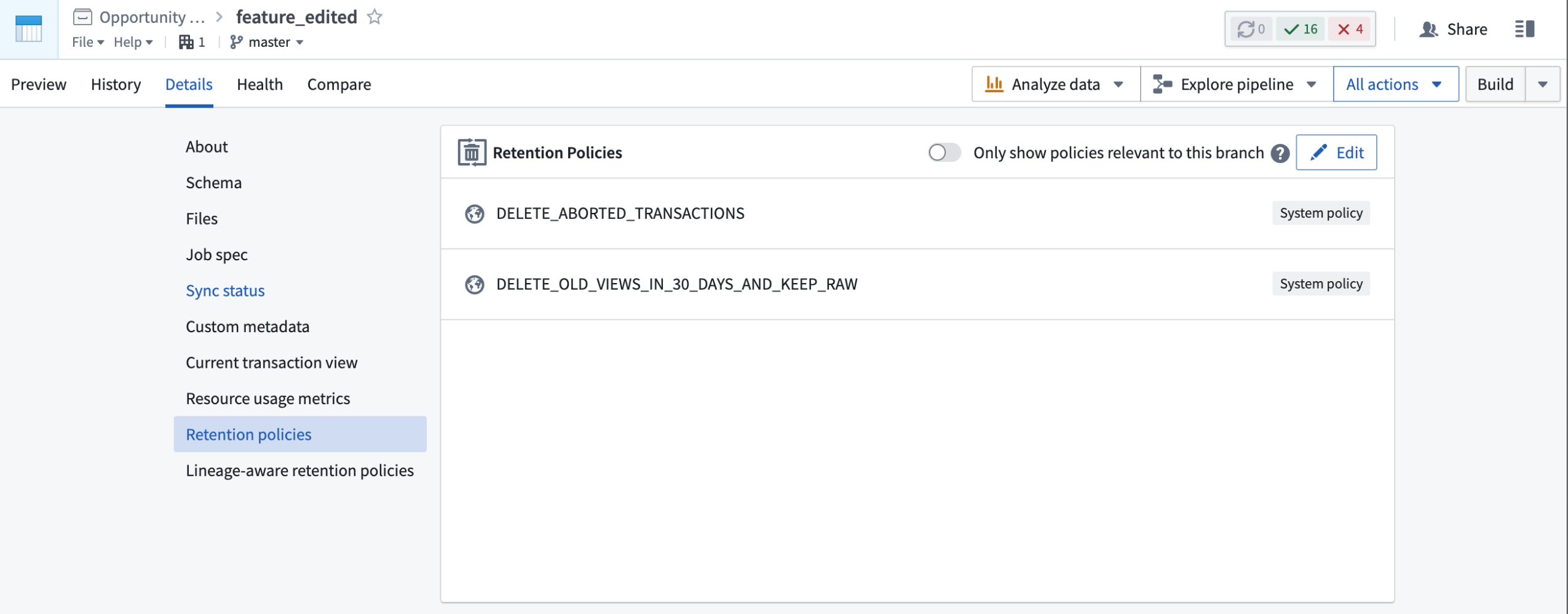

The Retention Policies application showing system, legacy, and custom policies.

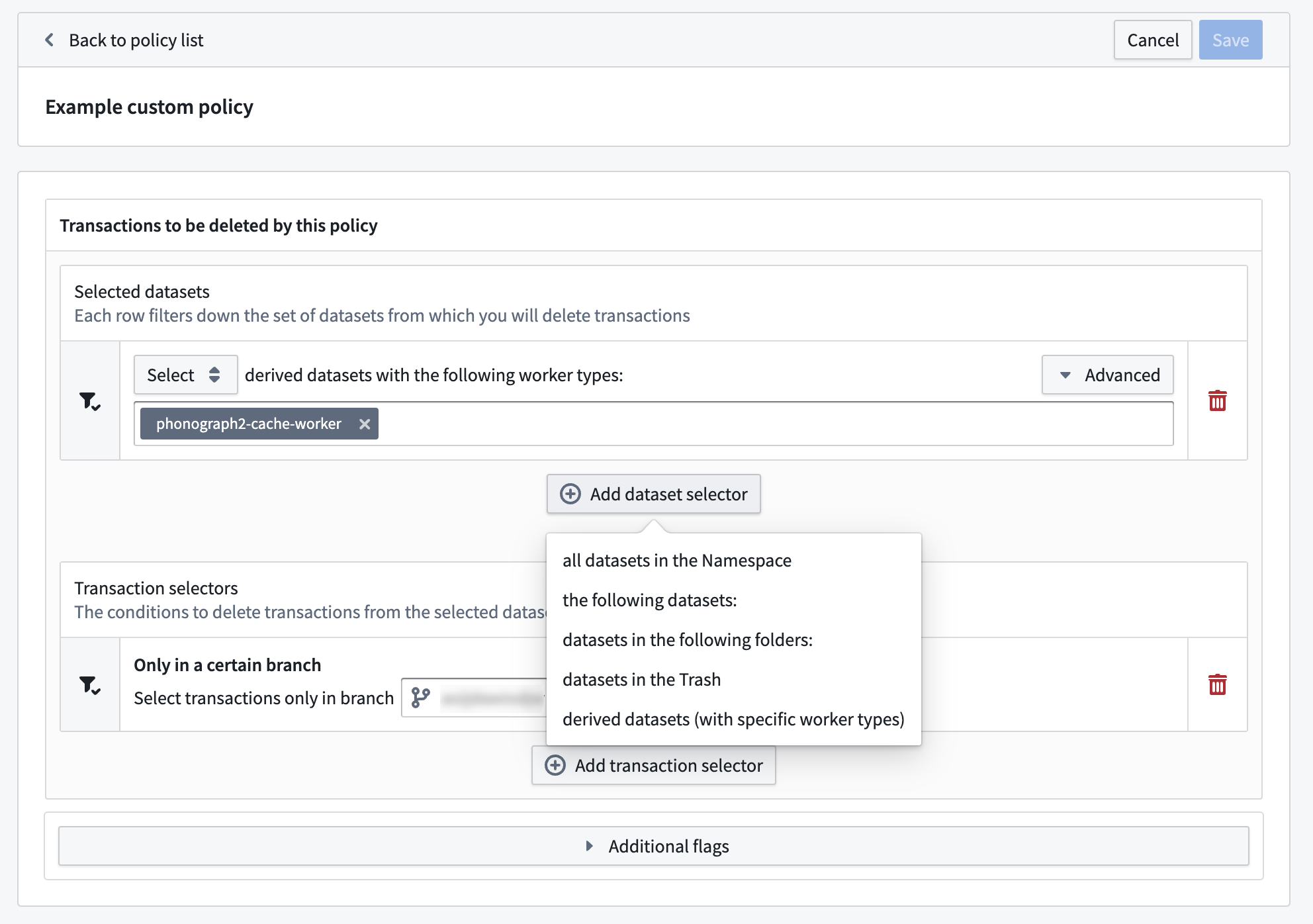

Benefits of Retention Policies

Retention Policies provides an intuitive, point-and-click interface to define and manage retention policies. These policies help administrators reduce storage costs by automating the deletion of redundant data and meeting regulatory and deletion requirements.

When managing retention policies, you can choose to add dataset selectors to identify the datasets required for the policy, and transaction selectors to narrow the scope of dataset transactions required for the policy.

A custom retention policy with an added dataset selector.

A condensed view of Retention Policies is also available through a dataset preview under the Details tab. This view will list all policies that effect the dataset and allow you to filter to the policies that affect only the current branch.

A condensed Retention Policies view listing two system policies.

What's next?

Our upcoming work includes using the Approvals application in Foundry to add approval flow support for each retention policy. This capability will allow administrators to review each policy before approving it.

Introducing HyperAuto V2 [Beta]

Date published: 2023-08-22

HyperAuto V2 will be available for installation by the end of August 2023 and will support SAP at release.

HyperAuto is Foundry’s automation suite for the integration of ERP and CRM systems, providing a point-and-click workflow to dynamically generate valuable, usable outputs (datasets or Ontologies) from the source’s raw data.

HyperAuto V2 introduces significantly updated user experience, performance and stability for automated SAP-related ingestion and data transformation.

The ability to create new SAP pipelines in HyperAuto V1 will be deprecated from August 22, 2023. Existing SAP pipelines and non-SAP systems based on HyperAuto V1 will remain available and unchanged.

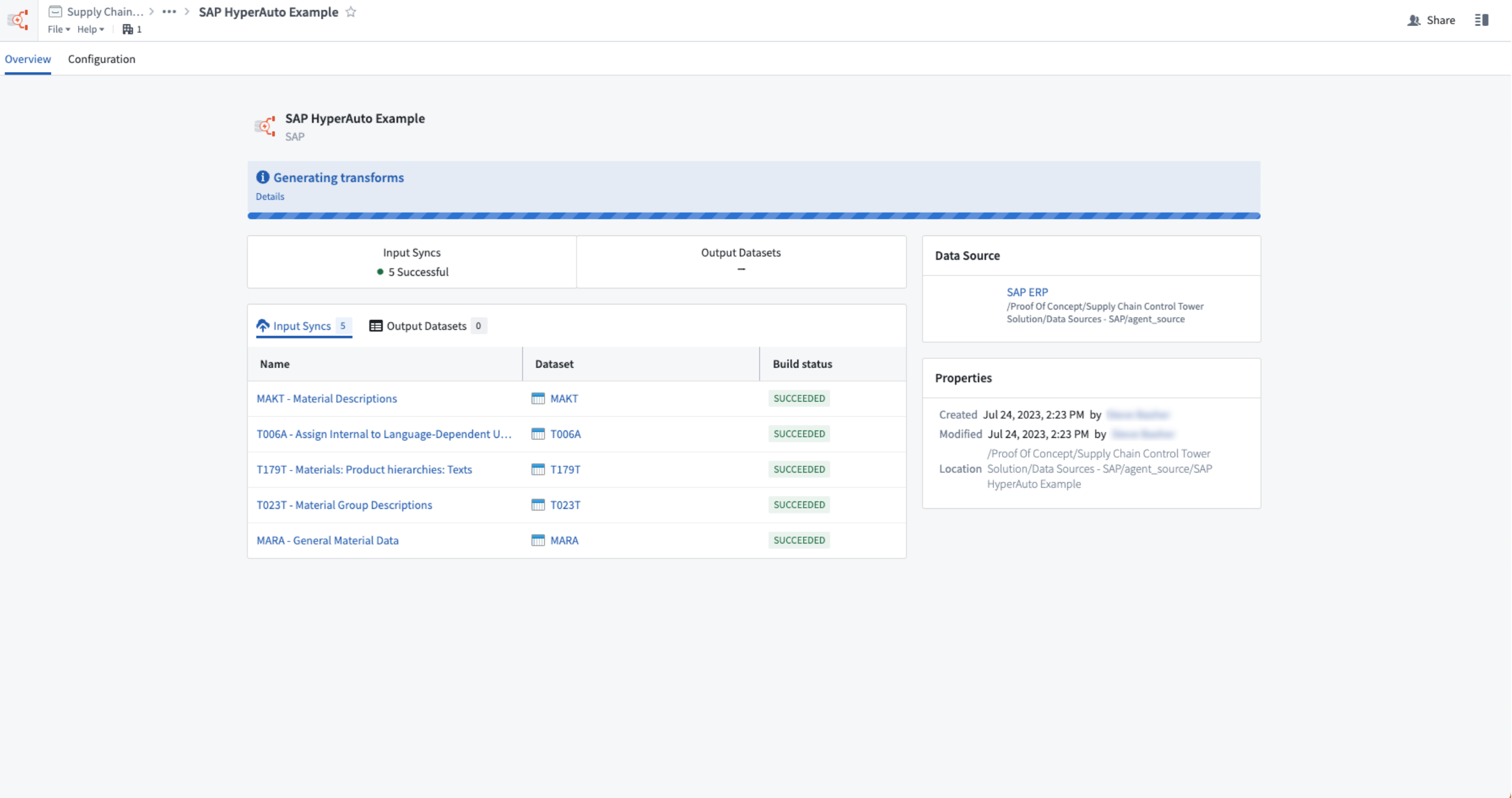

Overview of a live HyperAuto pipeline.

Point-and-click configuration

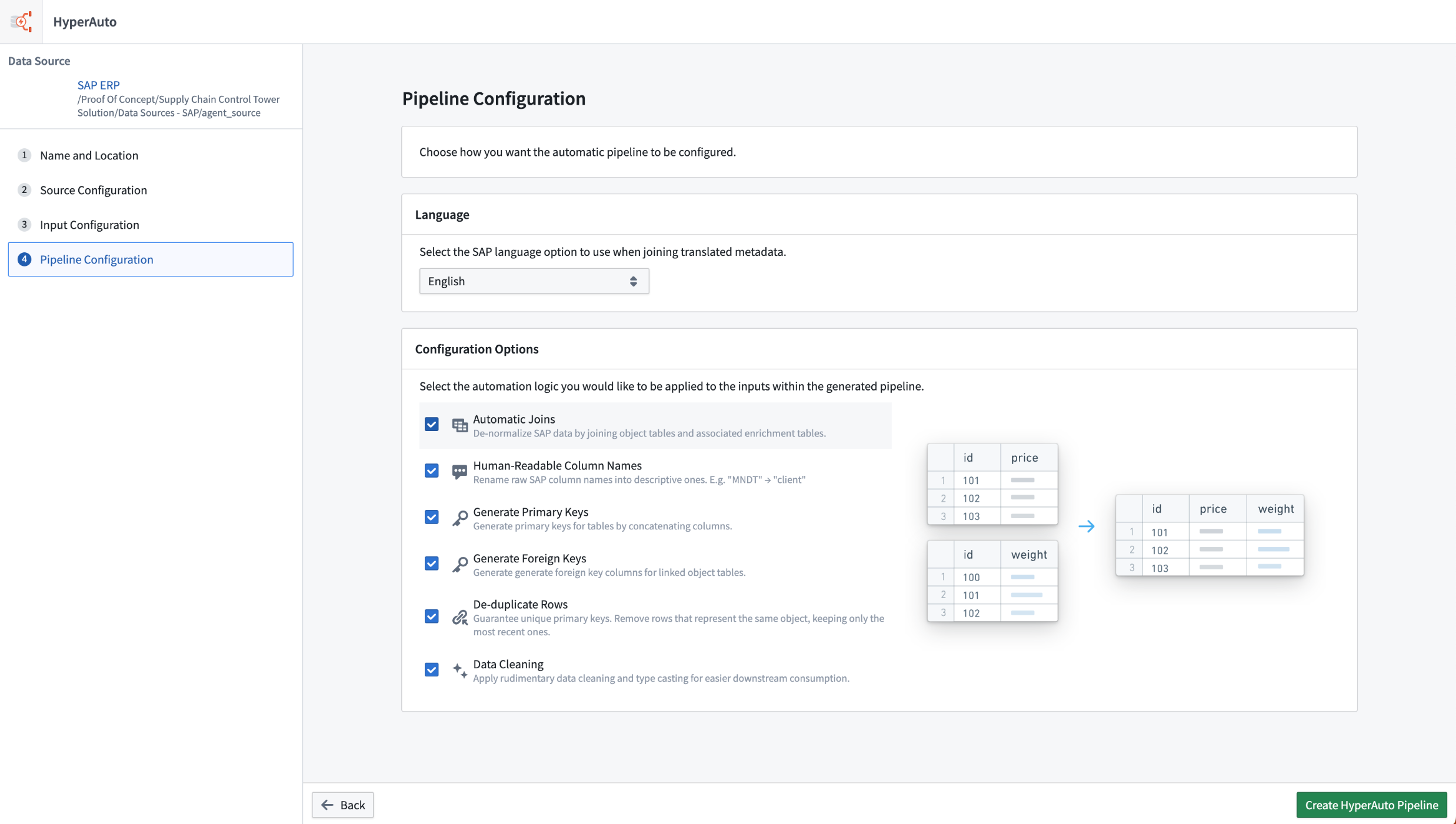

HyperAuto V2 has been rebuilt with ease-of-use in mind. To create and configure a HyperAuto pipeline, users must simply interact with an intuitive step-by-step wizard. Opinionated defaults are preconfigured to streamline the process, but users can adjust the settings as they deem fit if required.

HyperAuto’s key automation features include:

- Enrich core tables by automatically de-normalizing the data model, joining on tables containing relevant metadata and additional information.

- Format and clean the source’s data, ensuring column types are accurate, column names are intuitive and that null data is handled correctly.

- Intelligently de-duplicate change-log source data, ensuring an up-to-date, clutter-free version of each table exists for operational usage and analysis

For more, review the HyperAuto V2 documentation.

Pipeline configuration with opinionated defaults applied.

Data transformation via Pipeline Builder

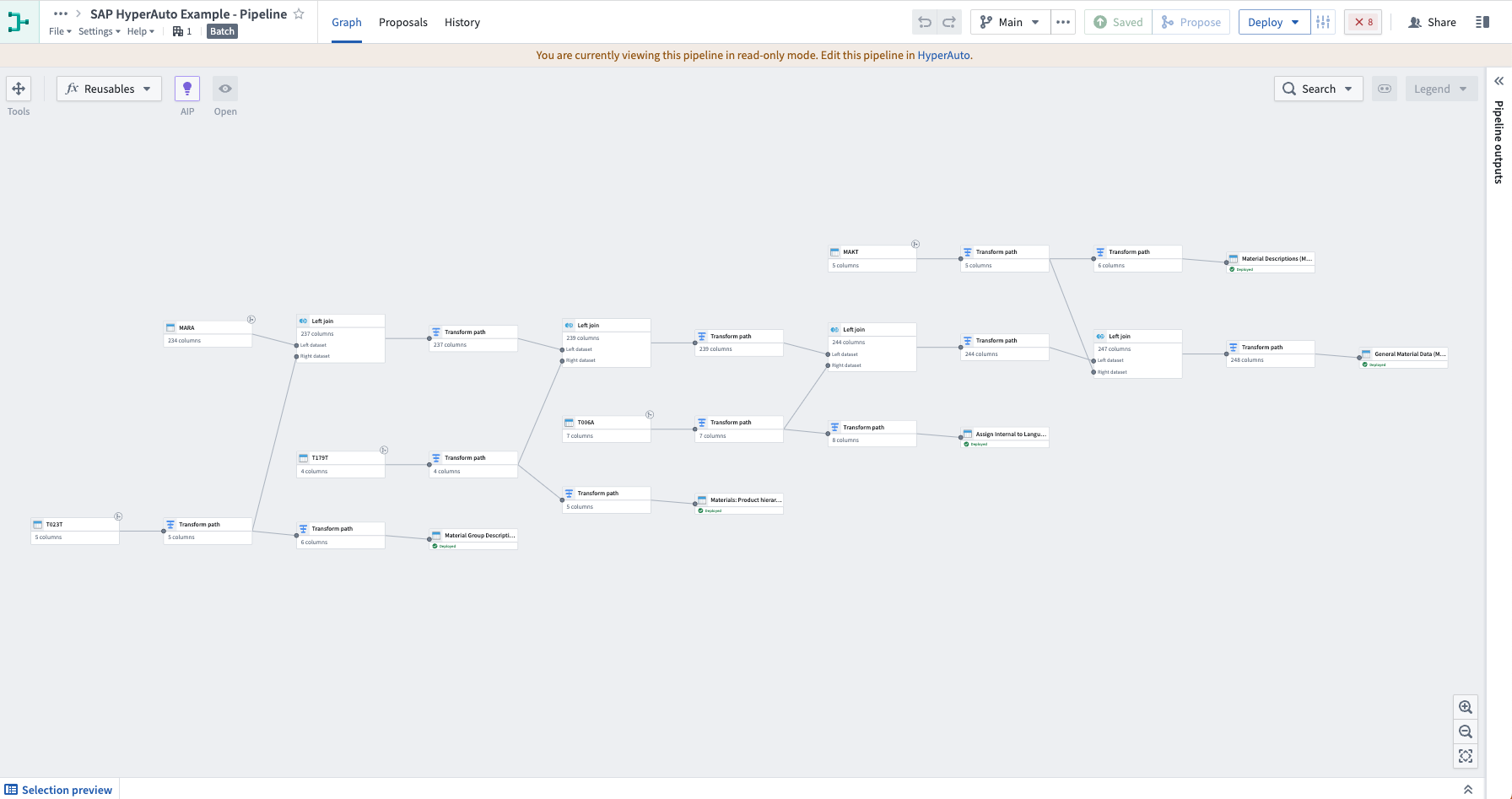

HyperAuto V2 dynamically generates Pipeline Builder pipelines to process the source data as required. This provides a familiar, easy-to-understand UI which enables full transparency on how the data is being processed and makes it easier to understand what configuration users might want to change within HyperAuto.

Any changes to an existing HyperAuto pipeline’s configuration creates a new proposal within the underlying Builder pipeline which must be approved and merged by a user before being deployed to the live pipeline. Pipeline Builder comes with a comprehensive change management workflow which HyperAuto makes use of, providing quality-assurance for critical workflows.

Dynamically generated Pipeline Builder pipeline to process source data.

Real-time data processing from SAP

HyperAuto V2 supports real-time streaming connections and data transformation for SAP sources (requires an SLT Replication Server), enabling critical operational applications that require a real-time view of the relevant SAP data. Additionally, HyperAuto V2 also brings significant performance improvements for batch pipelines.

If you would like to enable SAP streaming, contact your Palantir representative.

Time series chart now integrated with Ontology Actions

Date published: 2023-08-22

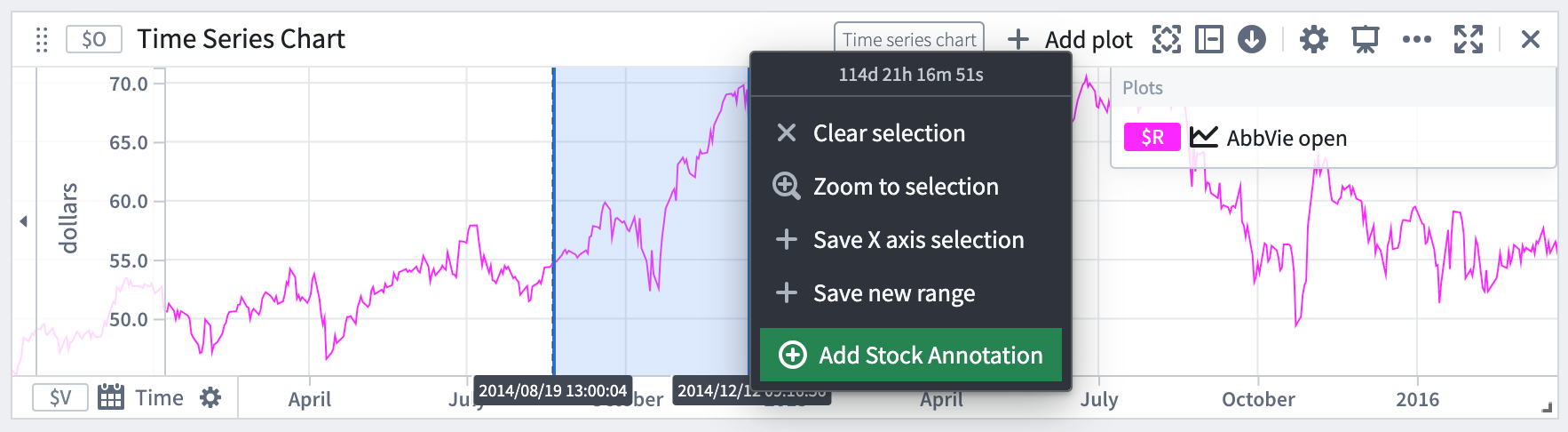

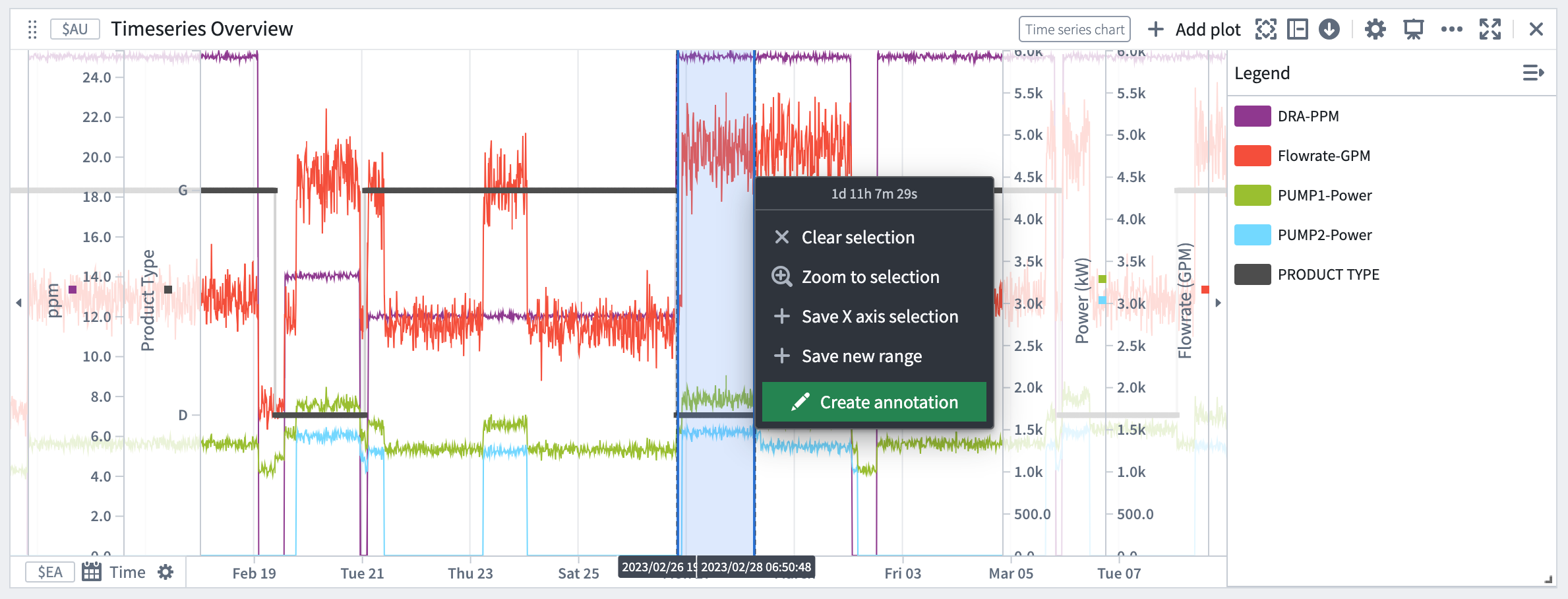

Quiver dashboard and Workshop application builders can now expose Ontology actions directly from the selection menu of the time series chart, offering a more intuitive user experience to take action in the context of the observed time series. This new integration between the Ontology action button widget and time series chart allows users to pass the chart selection X or Y boundary values as inputs to the Ontology action, enabling practical applications such as contextualizing an observed phenomenon on a time series or adjusting object property values based on a sensor time series values.

Expose Ontology actions directly from the selection menu of the time series chart.

In action

The new integration can be used to enhance your existing workflows. For instance:

Creating an "Annotation" object instance

Within a time series chart, you can now create an object that captures context on an observed phenomenon. For instance, this could include reasons for a machinery malfunction, or a market macro event that affected a stock index. The start and end temporal values of the x-axis selection are passed as inputs to the Create annotation Ontology Action, resulting in an annotation event. The ability to annotate the plot with multiple events provides greater clarity on the data visuals at hand.

The start and end temporal values of the x-axis selection pass as inputs to the "Create annotation" Ontology Action.

The start and end temporal values of the x-axis selection pass as inputs to the "Create annotation" Ontology Action.

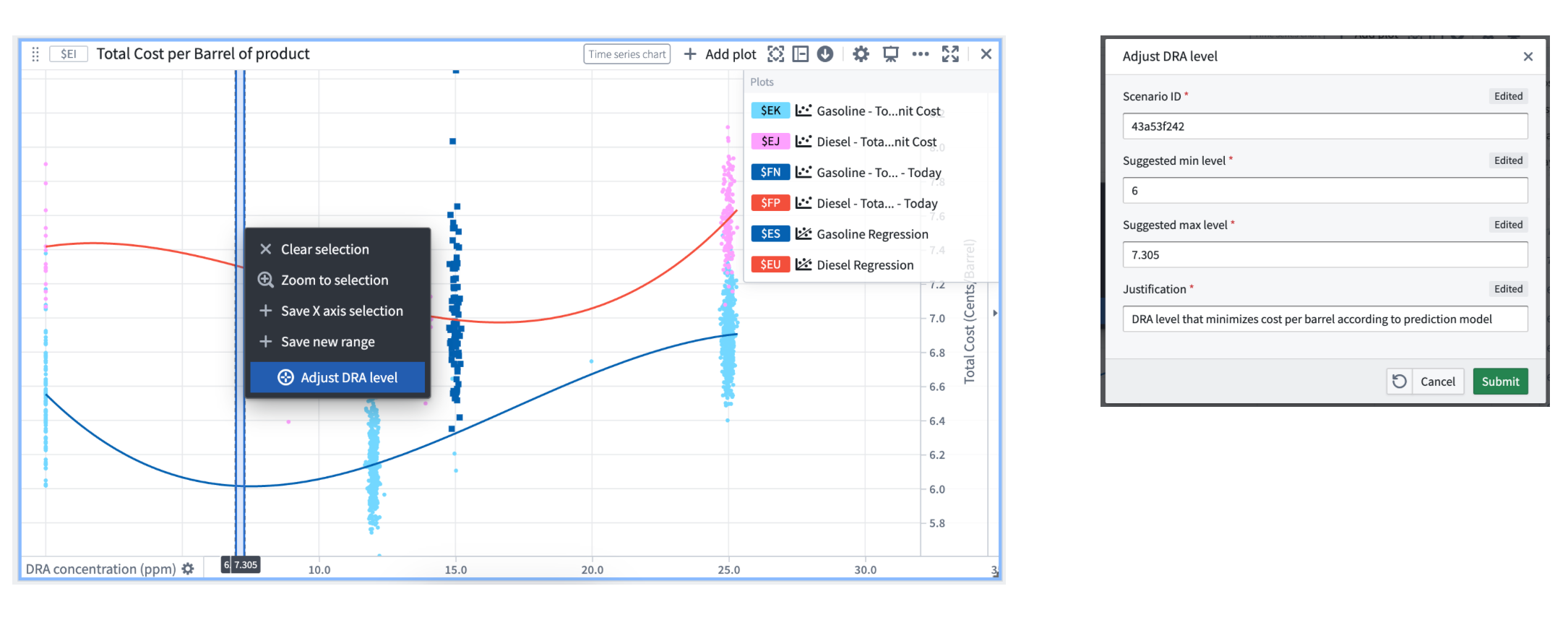

Adjust operational values

You also have the ability to invoke Ontology actions to update a property value based on an observed phenomenon. For example, the time series chart below displays two curves that show the predicted total cost per barrel for two types of products, Gasoline (in blue) and Diesel (in red). We see that today’s actual total cost per barrel (y-axis) for Gasoline (dark blue dots) ranges from 6.35 to 8.45 cents/barrel and is far from the minimal predicted total cost, which is 6.01 cents/barrel. One action we can take to lower the production cost is to adjust the DRA concentration rate from 15 ppm to a value closer to 6.5 ppm. By selecting a range around 6 on the x-axis, we can then select the Adjust DRA level Ontology action which will be set with the selection boundary values automatically.

Ontology action invoked to update a property value based on an observed phenomenon.

Streaming transforms migration to Java 17 scheduled end of September 2023 [Migration/Deprecation]

Date published: 2023-08-17

At the end of September 2023, all Foundry Streaming transforms will migrate their runtimes from Java 11 to Java 17 in order to take advantage of Java 17's improved performance and security posture. The Java 17 runtime introduces a breaking change that prevents reflective access to members of internal JDK classes. Most streaming transforms will not be affected by this change, but this announcement is being broadly published for general awareness.

While we expect that the majority of Foundry Streaming transforms will be unaffected by this migration, it is possible for breaks to occur in user-defined function (UDF) streaming transforms where implementations (or their associated external libraries) reflectively access JDK internals. Palantir will work to identify at-risk UDF implementations and contact potentially-affected pipeline owners.

If you own a Foundry UDF Definition repository that is used in any Foundry Streaming pipeline, verify that your UDF is compatible with Java 17 by completing the following steps:

-

If your UDF definition depends on any external libraries, verify that the versions of those libraries are compatible with Java 17.

- Open the hidden

versions.propertiesfile in the root of your UDF definition repository. - Identify any dependencies that were added manually from external repositories (that is, dependencies that were not added by default by the repository template).

- For each such dependency, verify that the declared minimum version in the

versions.propertiesfile is compatible with the Java 17 runtime. This may require checking the dependency’s release notes.

- Open the hidden

-

Verify that the UDF implementation does not reflectively access internal JDK classes.

- If your UDF implementation does not use reflection, no further steps are required.

- If your UDF implementation does use reflection, ensure that either:

- The accessed classes are not JDK internal classes.

- Your implementation can properly handle an

IllegalAccessExceptionand will not break if certain reflective operations begin throwing this exception.

If you encounter any issues remediating UDF definition repositories, or if you have any questions or concerns related to this notification, contact your Palantir administrator.

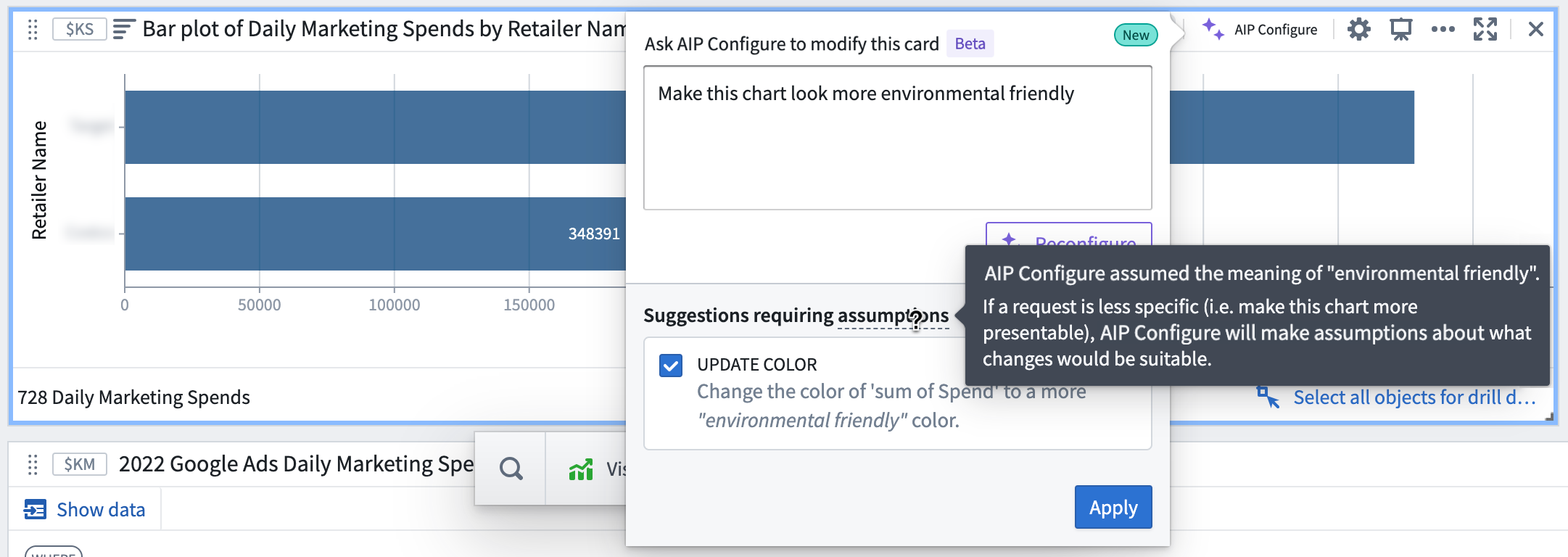

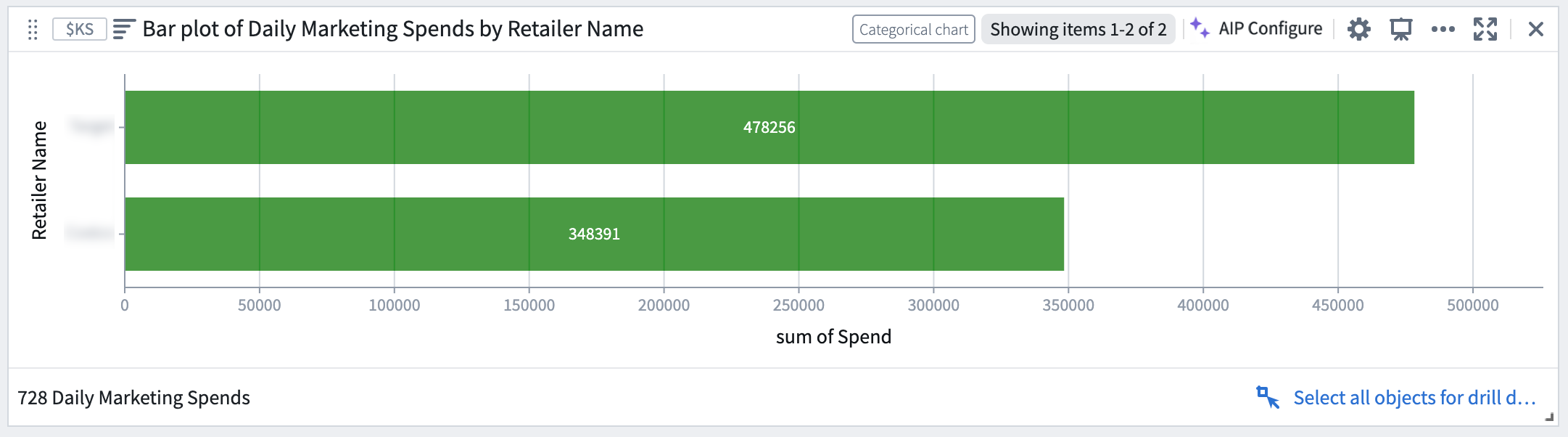

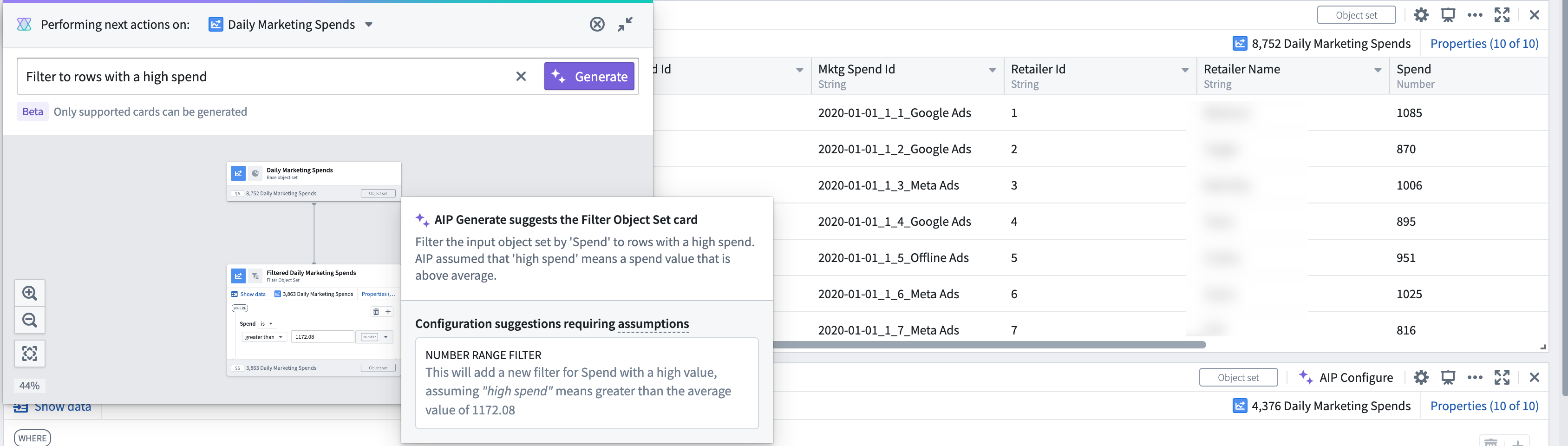

AIP in Quiver: Transparent assumptions and enhanced ambiguity handling

Date published: 2023-08-09

AIP in Quiver can now answer prompts that are ambiguous, subjective or that use relative terms, such as “Filter transactions to high spend values” or “Group by the most important customers”. Additionally, with increased contextual awareness, AIP can now handle spelling mistakes in column names and data values by inferring the correct values from the object set schema and data.

Transparent assumptions made by AIP Configure

Suggested AIP Configure card configurations have been divided into two categories: configuration suggestions and configuration suggestions requiring assumptions. If a request is less specific, such as "Make this chart more presentable", AIP will make assumptions about the ambiguous parts of the prompt (in this example, ambiguity is inherent in the meaning of "presentable") to infer what changes might be suitable. Users are able to see the parts of their prompt where AIP made assumptions and what those assumptions were before they accept the suggested change.

AIP Configure-suggested configuration changes showing assumptions made to answer the prompt

State of the bar plot after accepting the suggested configuration changes

How AIP uses Ontology data

Along with the user prompt, Quiver now sends AIP a summarized version of the object set data, or “property value hints” for processing. Property value hints are only computed for properties that are found to be relevant to answering the user prompt, as follows:

- String property types: Up to 100 unique values are computed

- Numeric property types: The min, max, and average are computed

Previously, without having a sample of valid values, the LLM-filtered object sets were using values that were not in the set. This often resulted in an empty filtered object set, which would then affect the downstream cards in the produced graph. With these improvements, AIP can now use property value hints to correct misspellings of column names and property values and to determine threshold values when making quantitative descriptions such as high, low, and maximum.

State of the bar plot after accepting the suggested configuration changes

To better understand how AIP works in Quiver, review AIP features in Quiver documentation.

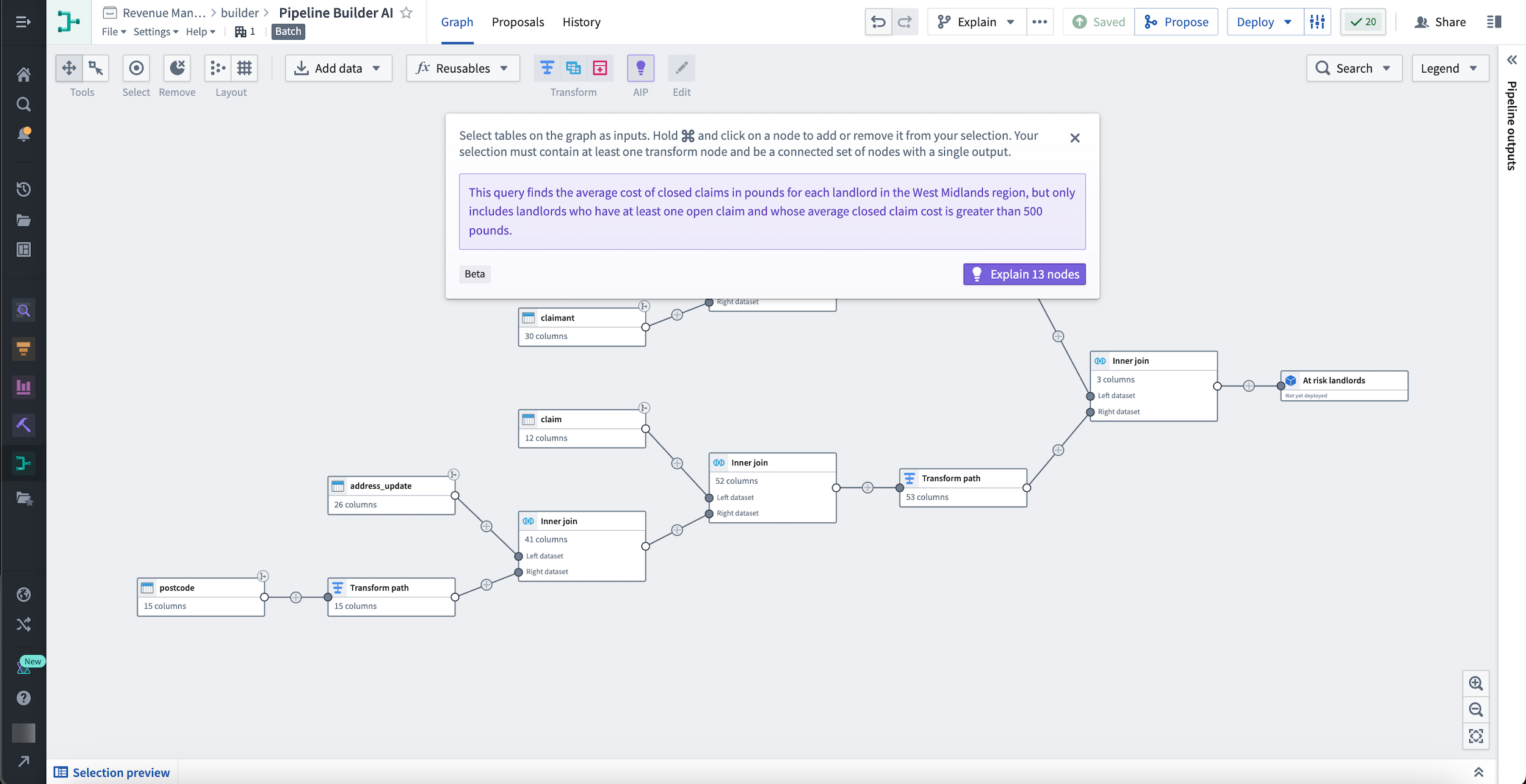

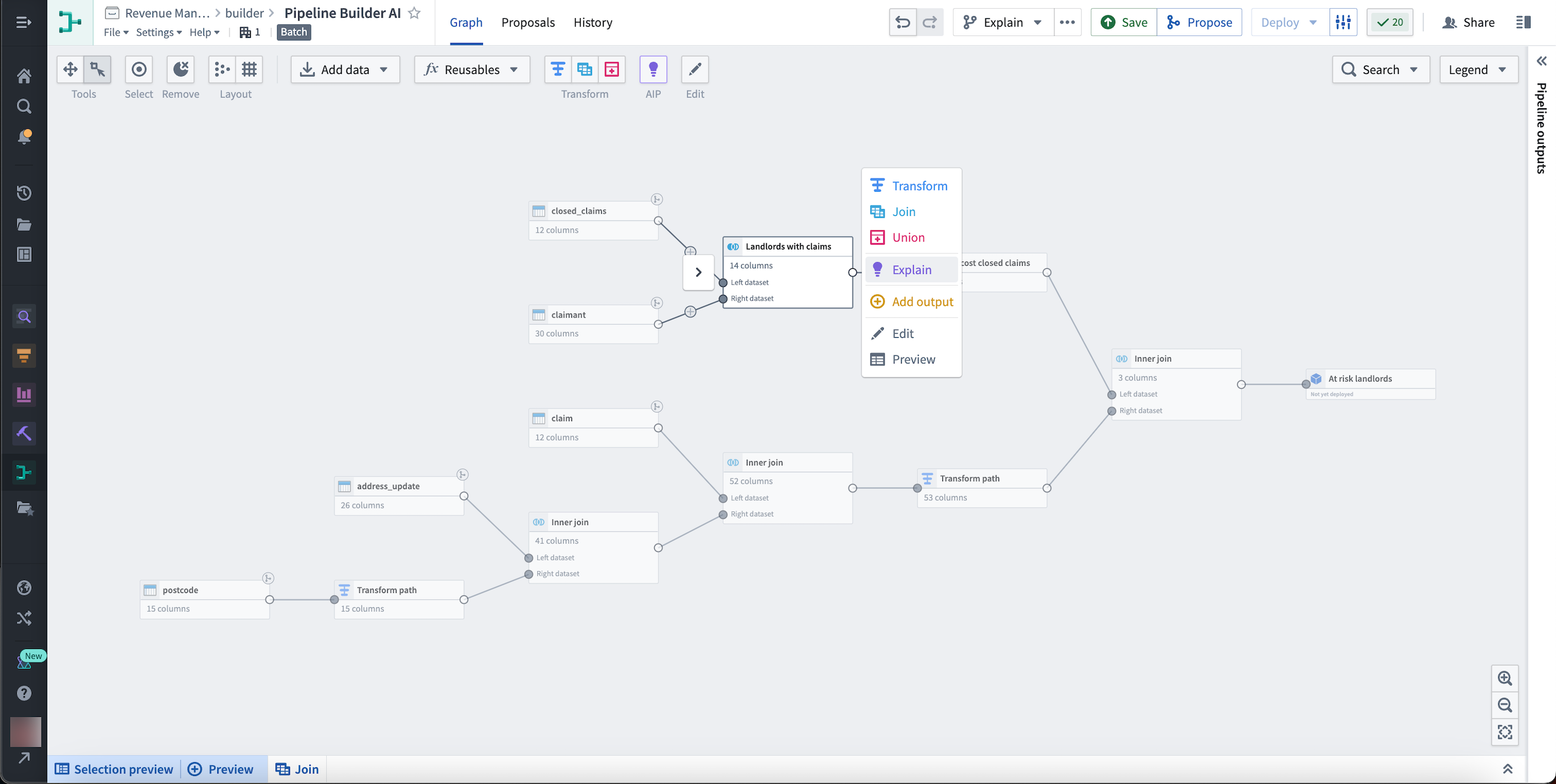

Introducing AIP in Pipeline Builder

Date published: 2023-08-09

AIP in Pipeline Builder helps you harness the power of AI to build production pipelines with greater ease and transparency in your result. With AIP in Pipeline Builder, you can benefit from many capabilities:

- Understand complex pipelines with the Explain feature.

- Generate suggestions for useful transform path names and descriptions.

- Quickly create and modify regular expressions.

- Easily cast strings to set timestamp formats.

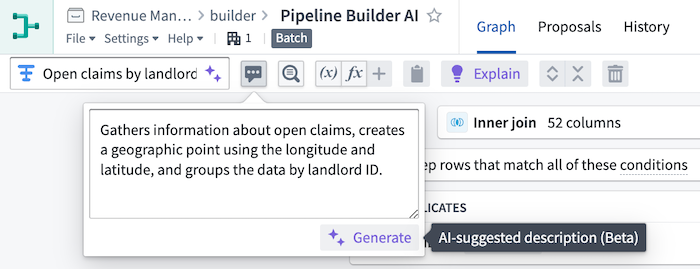

Explain pipelines with a single click

Dynamically obtain descriptions for your pipelines through every step of the development process and keep your collaborators in sync on the current state at all times. Use the Explain feature to provide valuable context for new approvers or facilitate knowledge transfer between new team members with minimal maintenance effort.

You can use Explain to learn more about multiple nodes, as shown below:

Alternatively, learn more about an individual node by selecting it and choosing the Explain option from the menu that appears to the right.

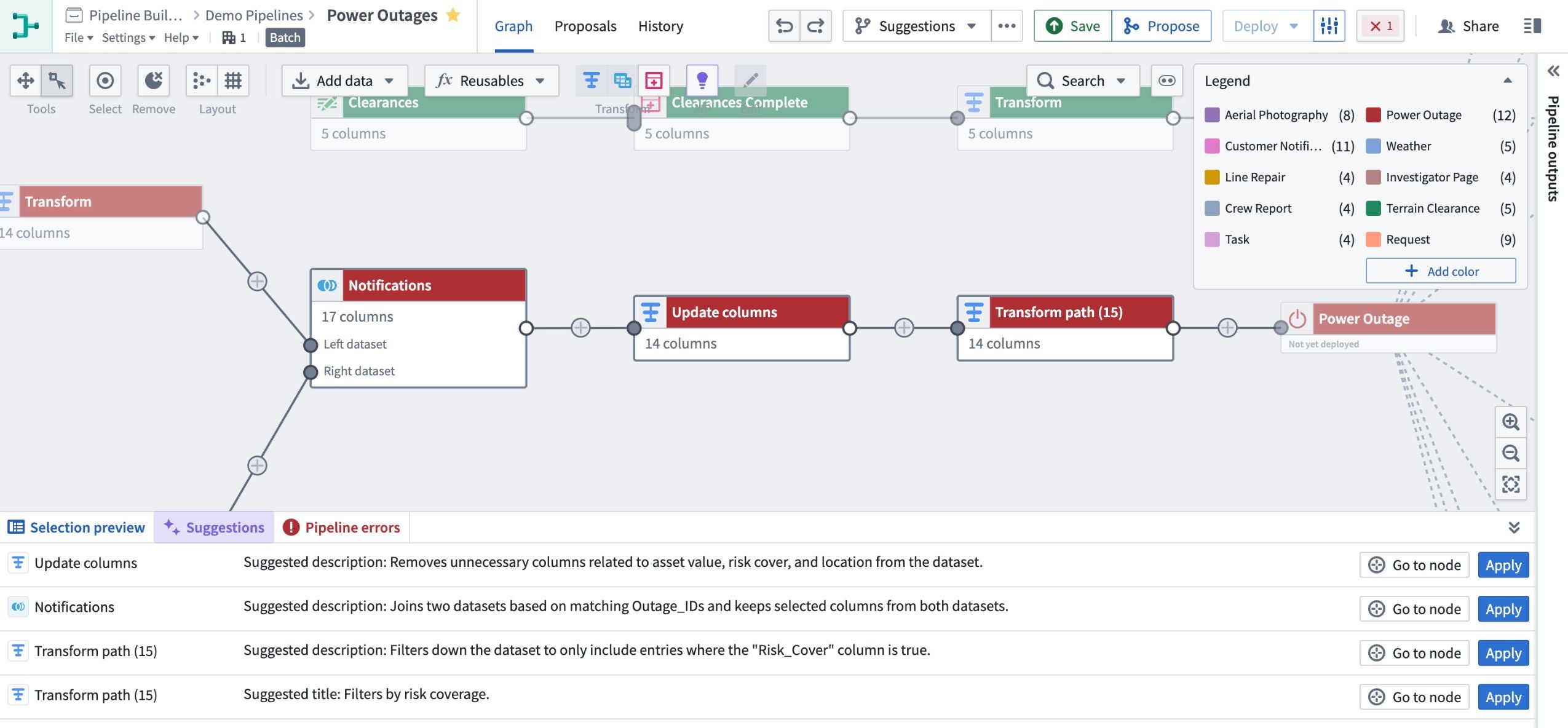

Generate better path names and descriptions

You can also use Explain to suggest and apply helpful transform path names and descriptions to facilitate collaboration and transparency throughout your pipeline. This can be especially useful when understanding complex pipelines and transformations in a given use case.

You can even generate new names and descriptions in bulk using the Suggestions tab in the bottom of the graph view.

Easily configure and apply transforms

Use Pipeline Builder's Transform Assist features to help build complex paths in your pipeline.

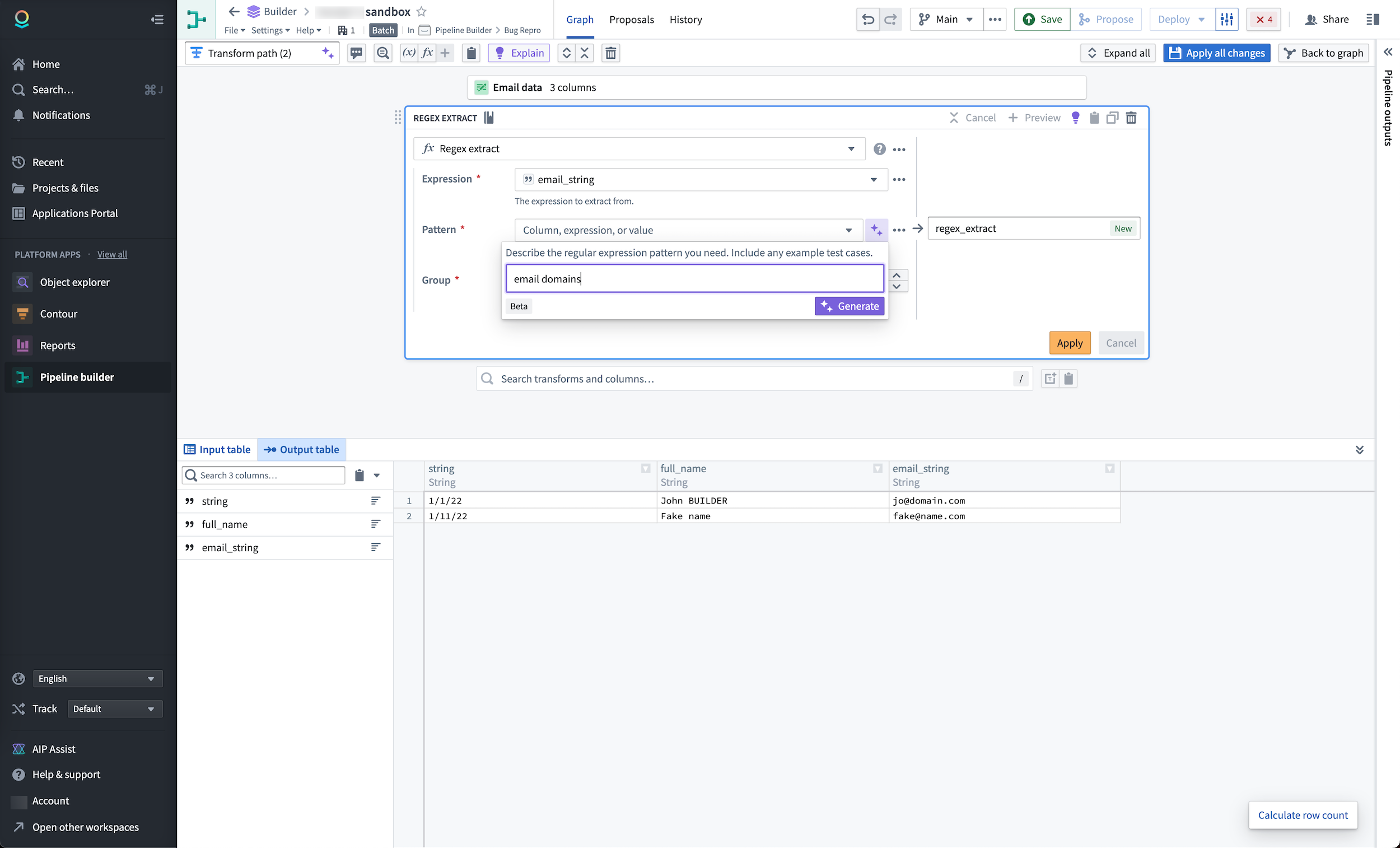

Modify and create regular expressions

With Pipeline Builder's new Transform Assist features, you can easily create and modify complex regular expressions by entering a description of the information you want to find into the regex helper. In our example below, we want to apply a regex to return email domains in a dataset.

AIP will generate a regex that will return the results defined when applied.

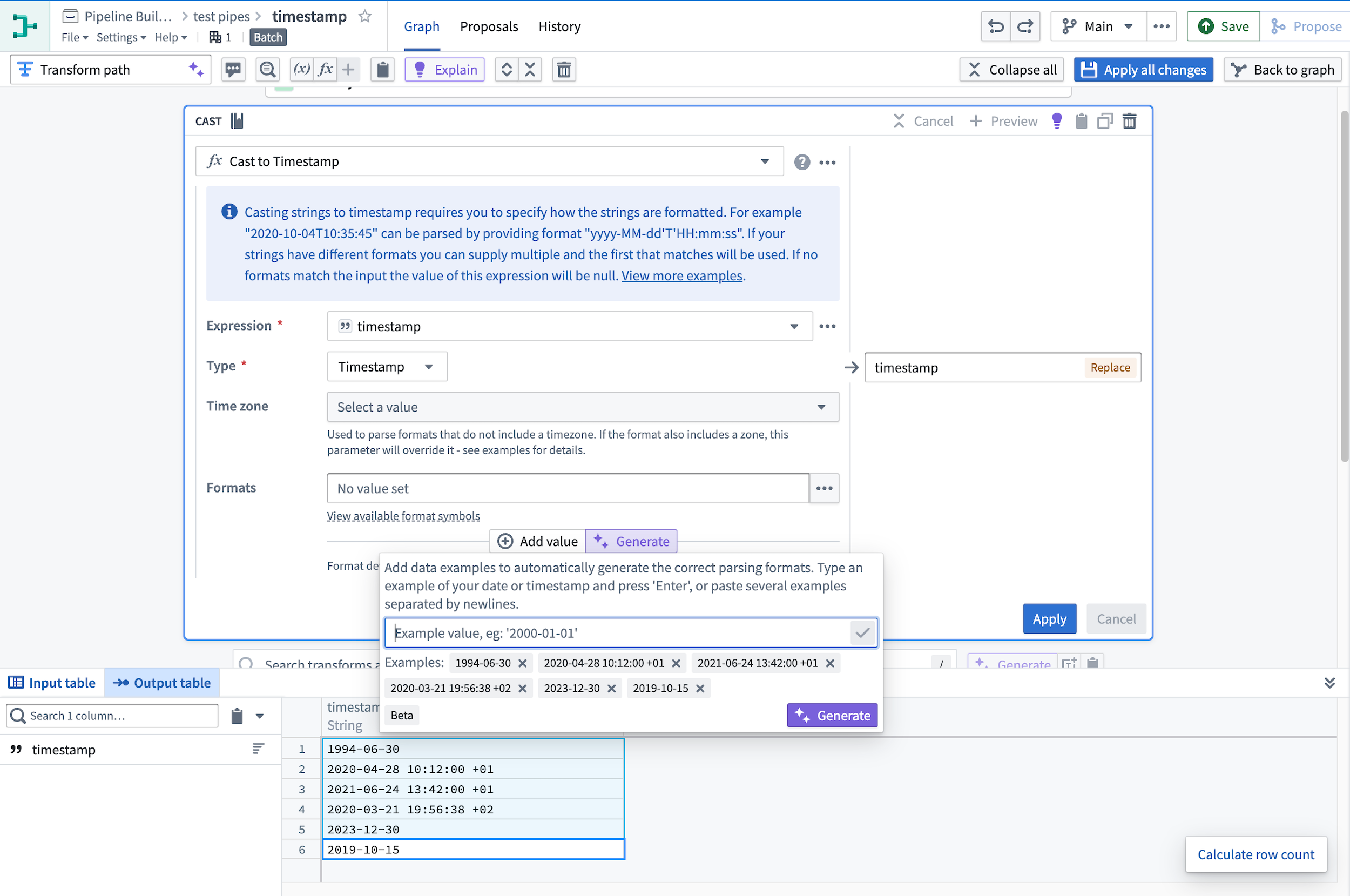

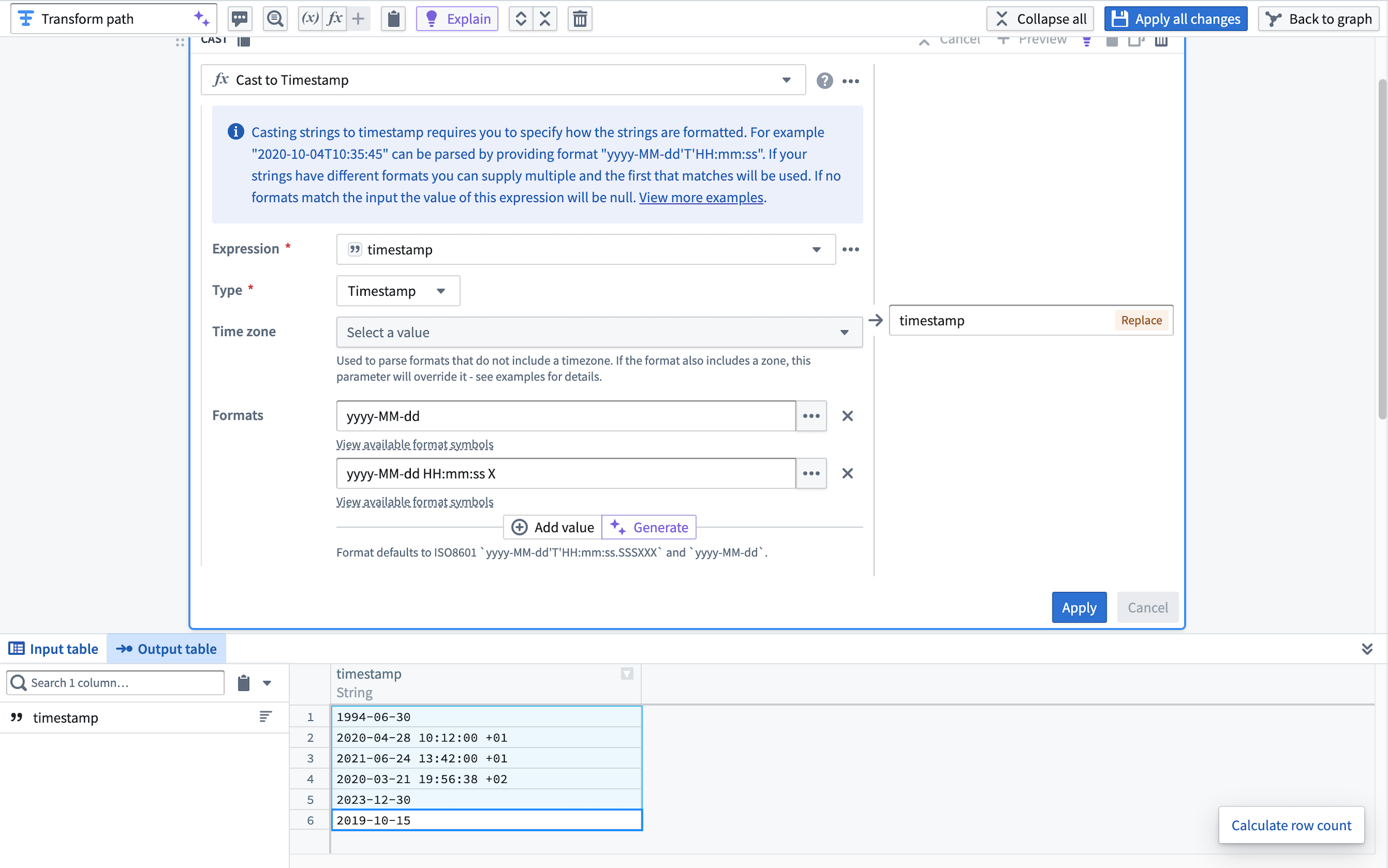

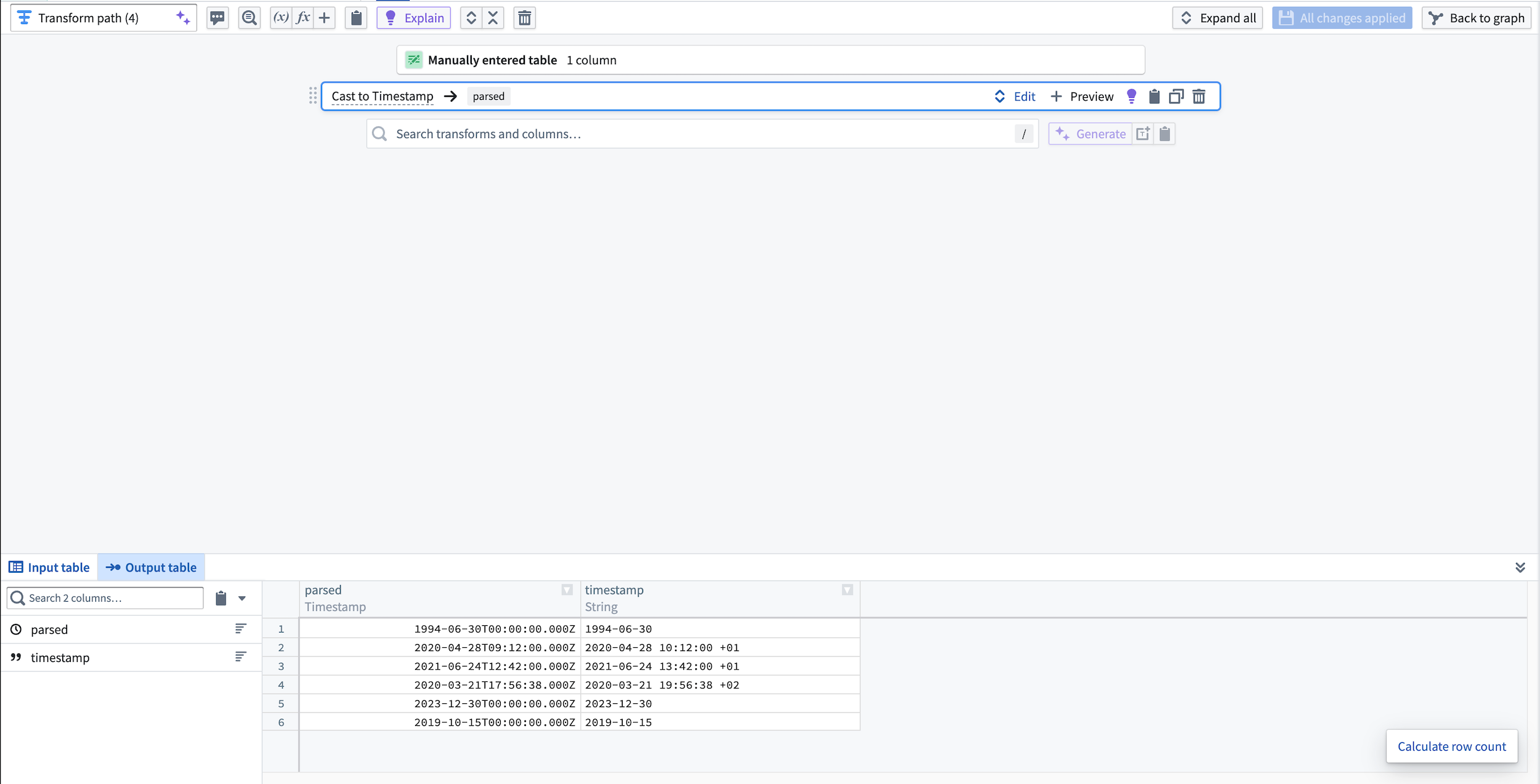

Quickly cast strings to timestamps

With Transform Assist, you can also use the timestamp formatter tool to efficiently cast string columns to specified timestamps. Within a cast board, define the parsing format and select Generate to quickly add your format configurations to the board.

Select Apply to cast the string column into the specified timestamp format.

View the new parsed timestamp column in the output dataset preview.

Learn how to get started using AIP features in Pipeline Builder.

Legacy data connection agents to be deprecated in favor of optimized direct connections August 2023 [Sunset]

Date published: 2023-08-07

Palantir Foundry is deprecating its legacy data connection agents in favor of a data connection migration to Rubix ↗, a secure, more reliable, and more efficient cloud offering that runs on Kubernetes. This update means data connection agents are no longer required as they were a provisional solution that allowed Palantir to connect with outside customer networks.

As such, all agents installed on Palantir-provisioned AWS hosts must be decommissioned by the end of August 2023 and all affected workflows need to be moved to direct connection. Palantir engineers are currently working to complete the migration for customers on stacks that have Rubix installations that can run cloud ingests.

Benefits of moving workflows to direct connection include:

- High reliability: Direct connection is more reliable and easier to manage than an agent. There is no requirement of a weekly maintenance window, thus removing downtime.

- Better parallelism: Direct connection is better able to handle multiple parallel syncs and takes advantage of auto-scaling directly in Foundry since each sync runs as an independent job.

- Better networking management: Jobs only have access to the endpoints they need to run, as opposed to the agent-based connection where a single agent host must satisfy networking requirements for all jobs that need to run via that agent.

- Self-service: Direct connection removes reliance on Palantir support, since its administration can be managed directly in Foundry's UI.

If you prefer to continue using agent-based workflow, you can create a new agent hosted on your own hardware, and assign your agent(s) to the affected source.

To complete the migration, follow the instructions provided in the documentation for switching a source from an agent worker runtime to a direct connection.

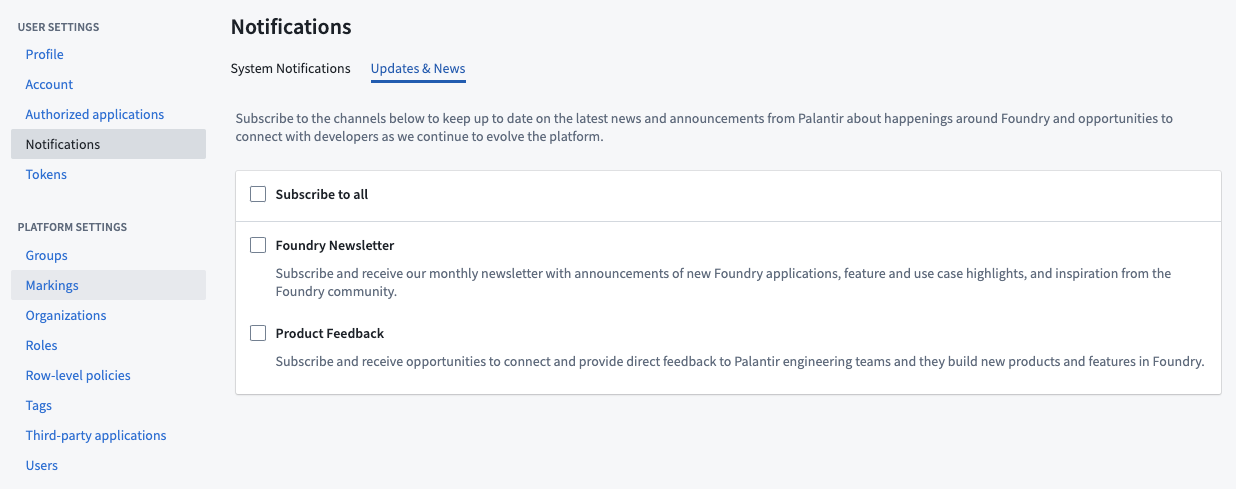

Introducing the Foundry Newsletter [Beta]

Date published: 2023-08-01

Keeping up to date of all the exciting changes going on across Foundry can be challenging. While we strive to diligently collect our announcements and release notes, we know you've been waiting to receive these updates directly. We're excited to announce the beta release of the Foundry Newsletter subscription, available soon under the Notifications preferences within user Settings.

In addition to the monthly Foundry Newsletter, which summarizes new products within Foundry, new features and improvements across the platform, and general news and updates, users can also opt-in to the Product Feedback channel. This channel will share opportunities to connect with Palantir engineers as they seek targeted user input for ongoing development across the Foundry ecosystem.

Newsletters and other content shared through these opt-in subscriptions will be sent to the email address associated with the Foundry user account. Note that subscription information, as with all other account details, is stored solely within the boundaries of the Foundry enrollment. No email addresses or other user identifying information are collected centrally or sent outside the enrollment.

How to subscribe to Foundry Newsletter and Product Feedback

Additional highlights

App Building | Workshop

Cut, copy, and paste widgets and sections | Workshop builders can now cut, copy, and paste entire sections and individual widgets within a module for a faster building experience.

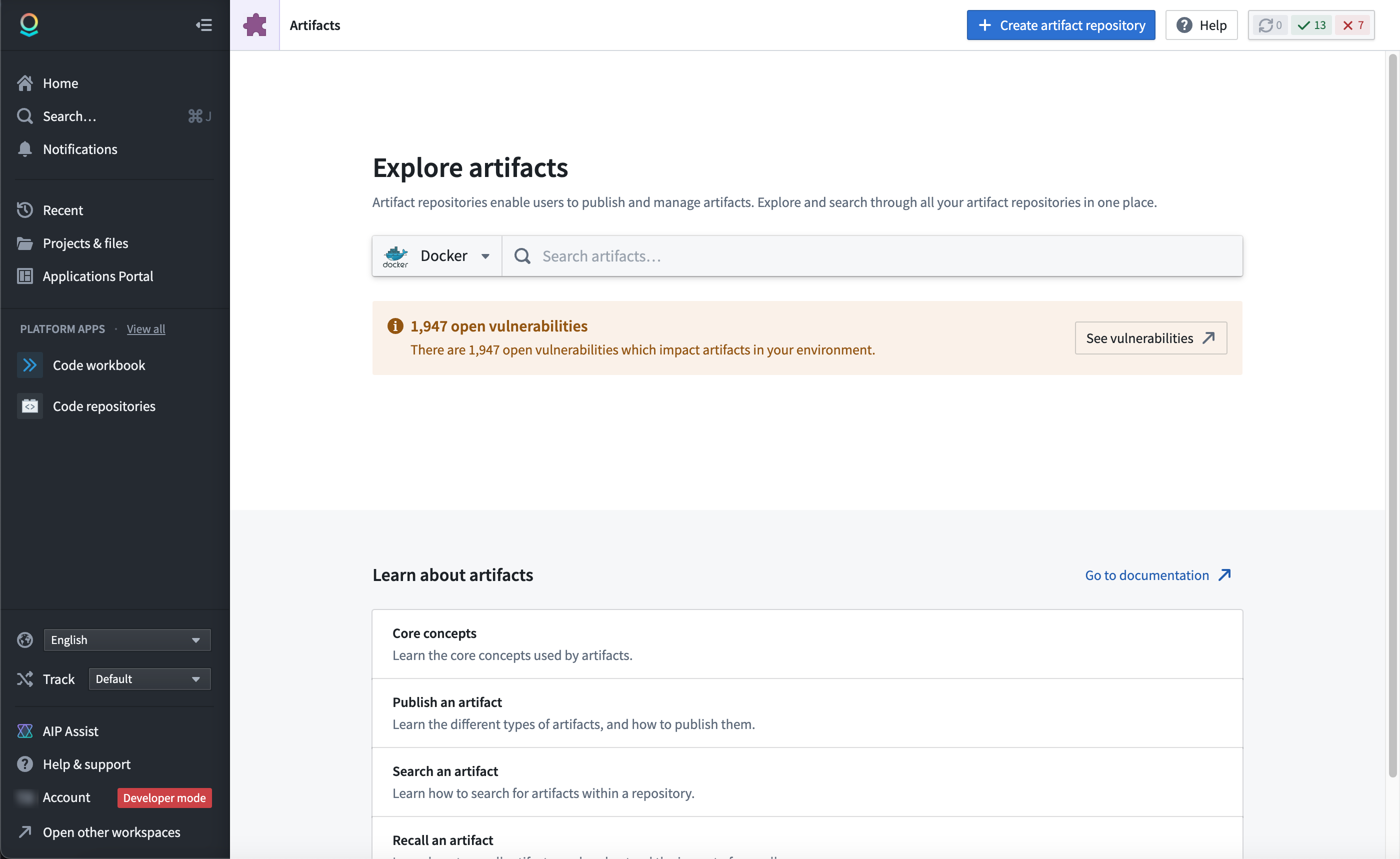

Artifact Repositories

Release Artifacts home page | Use the Release Artifacts home page to explore and search artifacts in Foundry.

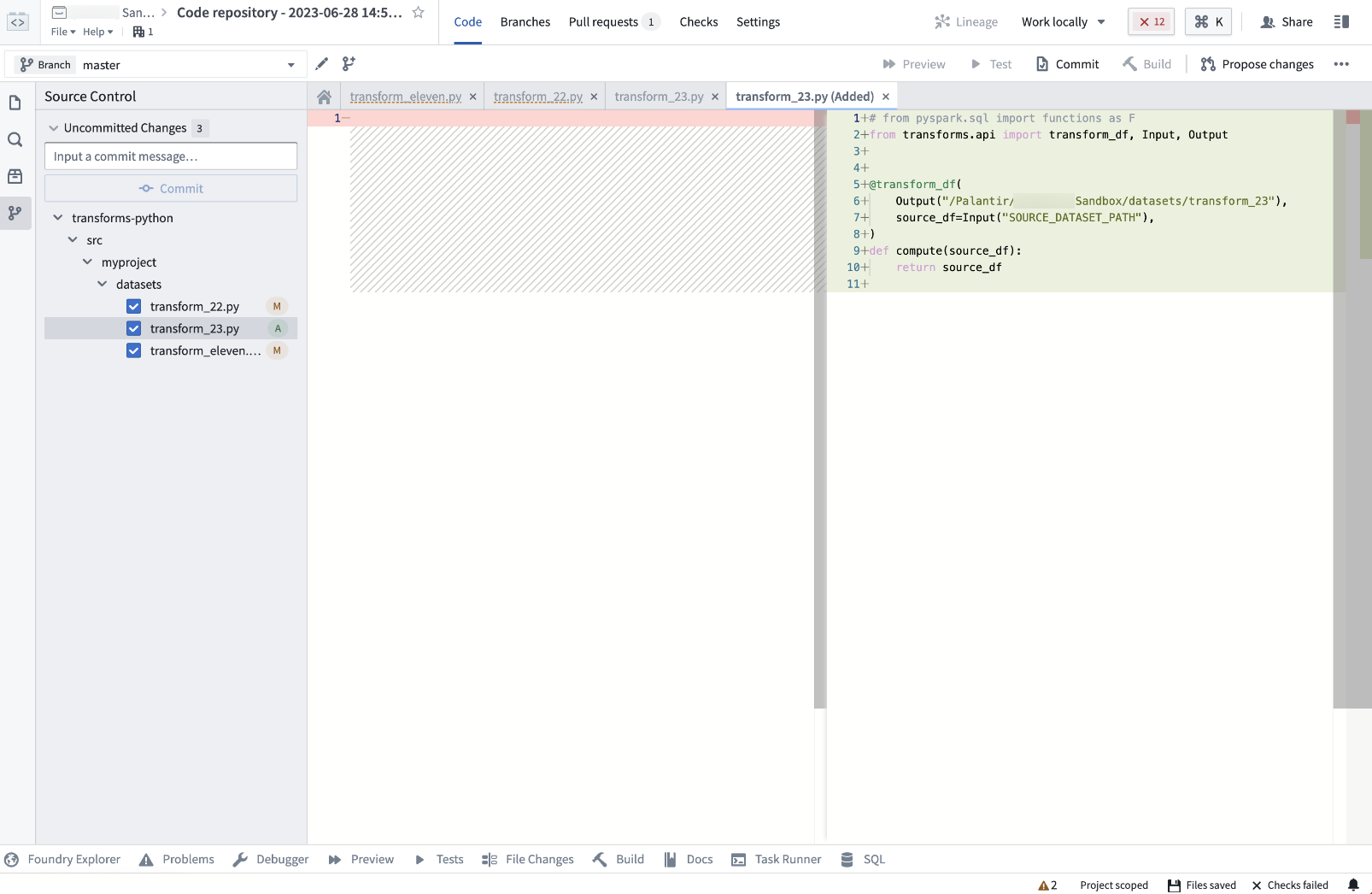

Data Integration | Code Repositories

Source Control enabled, new feature flag, and minor changes | Code Repositories users now have access to the Source Control feature, a new panel that displays file changes and allows users to commit them to a remote repository. In addition, we have made improvements to the user experience. The Commit option now opens the Source Control panel to make it easier for users to access and use the feature. How it works:

- When you open Code Repositories, you will now see a new panel for Source Control.

- This panel displays any changes made to the files in the project.

- You can select the files you wish to commit, and provide a commit message to describe the changes.

- Once you are satisfied with your changes, select Commit to commit the changes to the remote repository.

Administration | Control Panel

Announcing Foundry in Ukrainian [Beta] | Foundry's Ukrainian translation is now available in beta. Review the documentation on configuring available languages to enable the Ukrainian language translation.

Data Integration | Pipeline Builder

Simplify copy/paste and add hotkeys | The local node copy and paste functionality has been replaced with a duplicate function that can be accessed using the hotkey d. To copy a selected node to the clipboard, use hotkey Cmd+c (MacOS) or Ctrl+c (Windows), and to paste, use Cmd+v (MacOS) or Ctrl+v (Windows).

App Building | Workshop

iframe widget: Embed eternal applications | The new iframe widget in Workshop allows users to embed external, full-page applications within the platform. This feature supports rendering an external URL and is specially designed to enable iframed Slate embeds with support for input and output parameters, providing a seamless integration experience.

App Building | Workshop

Cut, copy, and paste widgets and sections | Workshop builders can now cut, copy, and paste entire sections and individual widgets within a module for a faster building experience.

Ontology | Ontology Management

Notification preferences for action types | Users can now configure notifications for action types to only notify users in the recipient list with the correct permissions; those without proper access will be ignored. Previously, the notification would fail for all users in the recipients list if any single user did not have proper permissions.

Analytics | Quiver

Open card in new analysis | You now have the option to open a card in a new Quiver analysis through the More actions (...) menu icon at the top right of Quiver cards. This action will copy the selected card over to a new analysis along with any upstream cards that this card depends on.

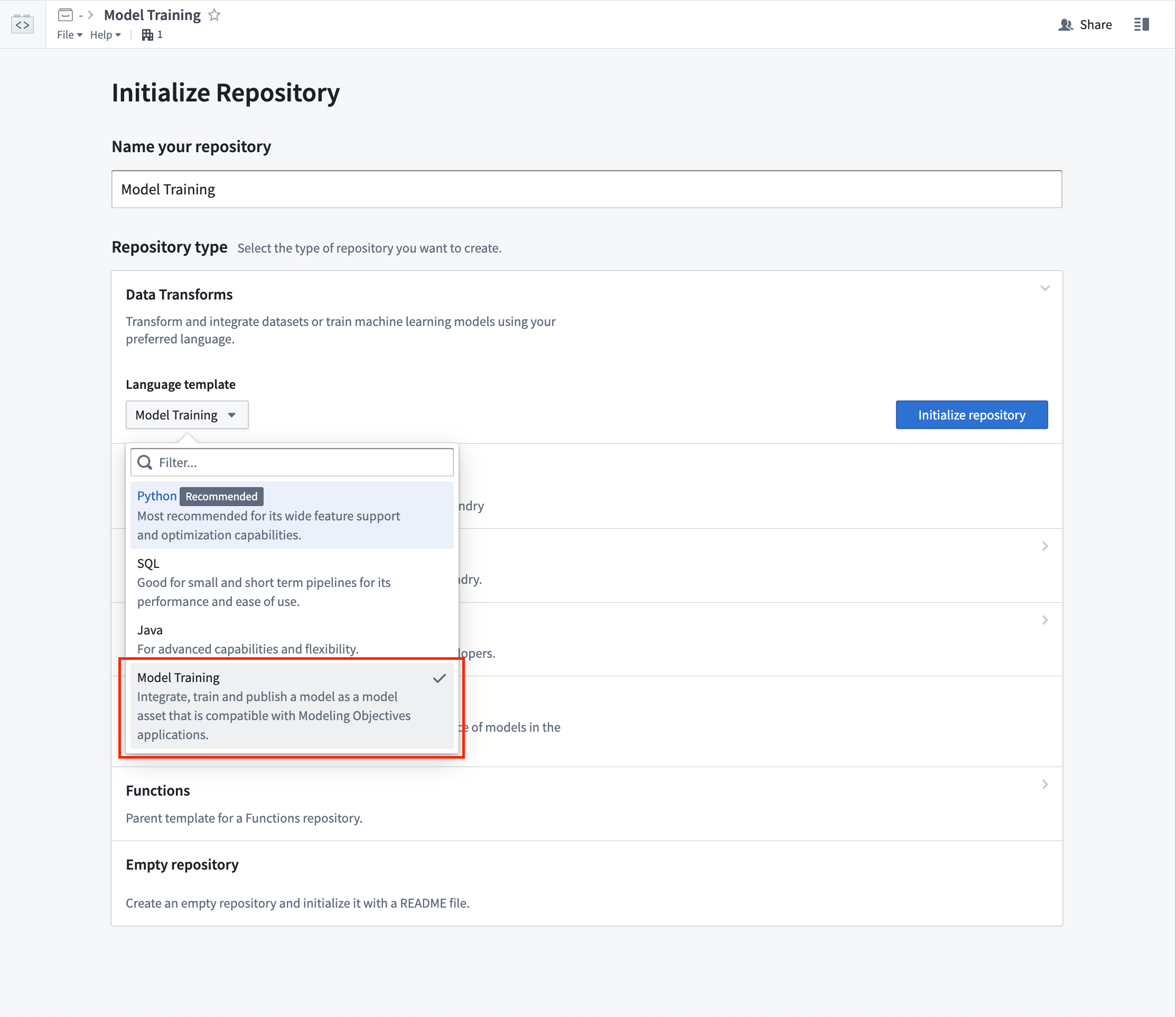

Model Integration | Modeling

Model Training Template now available in Code Repositories | The Code Repository application now provides a model training template. The new model training template allows you to write Model adapters in the same repository as your model training logic, resulting in faster iteration and a more straightforward development process in Foundry for ML and AI models.

This model training template automatically adds the correct model dependencies to all models produced inside the repository. This simplifies dependency management when models are deployed in the Modeling Objectives application in Foundry by automatically ensuring that all build-time dependencies are made available at inference time.

To get started developing ML and AI models with the new training template, refer to the documentation.

Screenshot of selecting the Model Training Template in Authoring